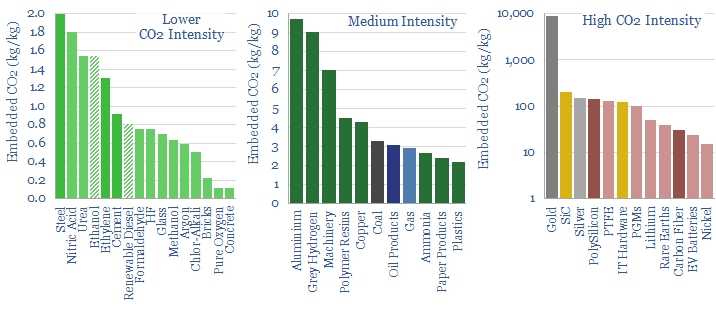

Thunder Said Energy is a research firm, focused on economic opportunities in the energy transition. Our work spans new energies, conventional energies and decarbonizing industries. You can search for keywords below. Or view our research by category, across Wind, Solar, Batteries, Vehicles, Biofuels, CCS, Coal, CO2 Intensity, Digital, Downstream, Energy Demand, Energy Efficiency, Hydrogen, LNG, Metals, Materials, Natural Gas, Nature-based solutions, Nuclear, Oil, Plastics, Power Grids, Shale and Novel Technologies.

Written Insights

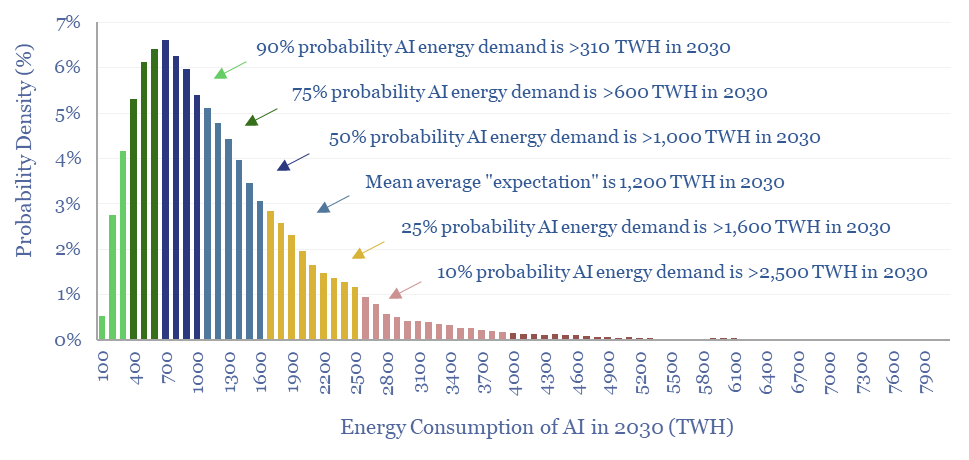

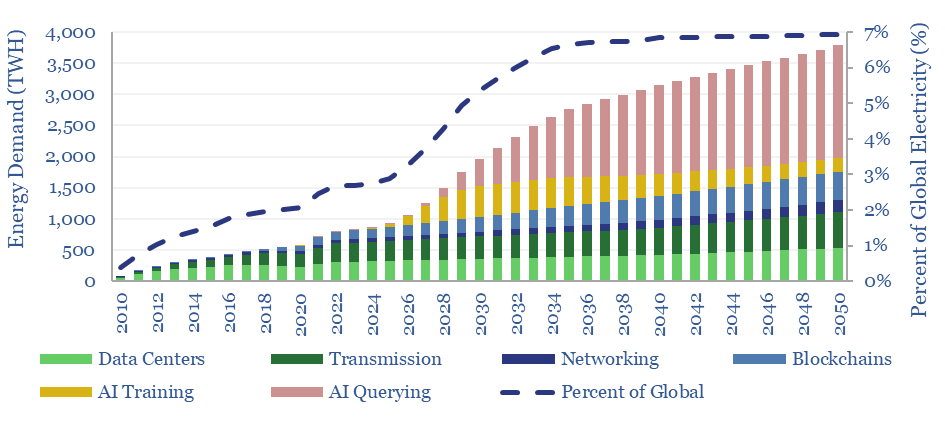

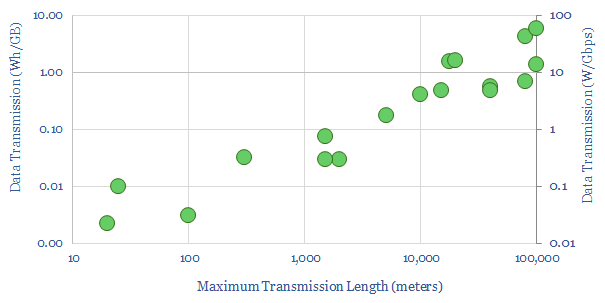

Rising energy demands of AI are now the biggest uncertainty in all of global energy. To understand why, this 17-page note explains AI computing from first principles, across transistors, DRAM, GPUs and deep learning. GPU efficiency will inevitably increase, but compute increases faster. AI most likely uses 300-2,500 TWH in 2030, with a base case of 1,000 TWH.

Read the Report?

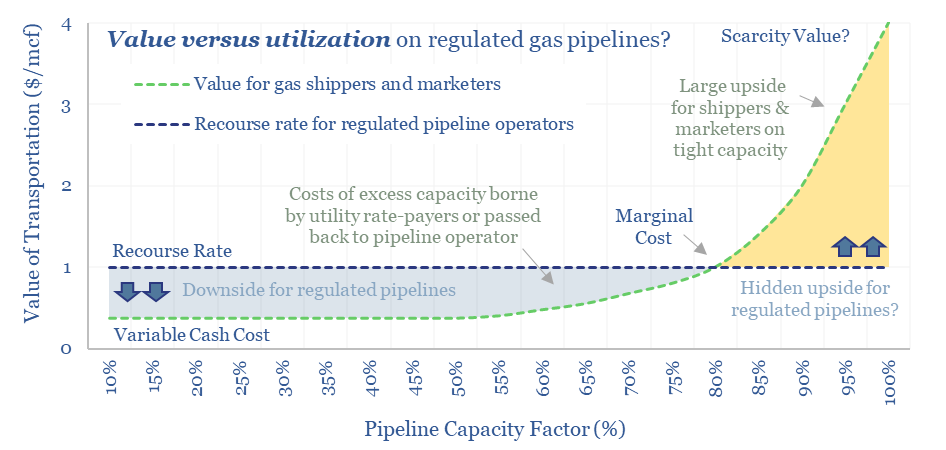

FERC regulations are surprisingly interesting!! In theory, gas pipelines are not allowed to have market power. But increasingly, they do have it: gas use is rising, on grid bottlenecks, volatile renewables and AI; while new pipeline investments are being hindered. So who benefits here? Answers are explored in this report.

Read the Report?

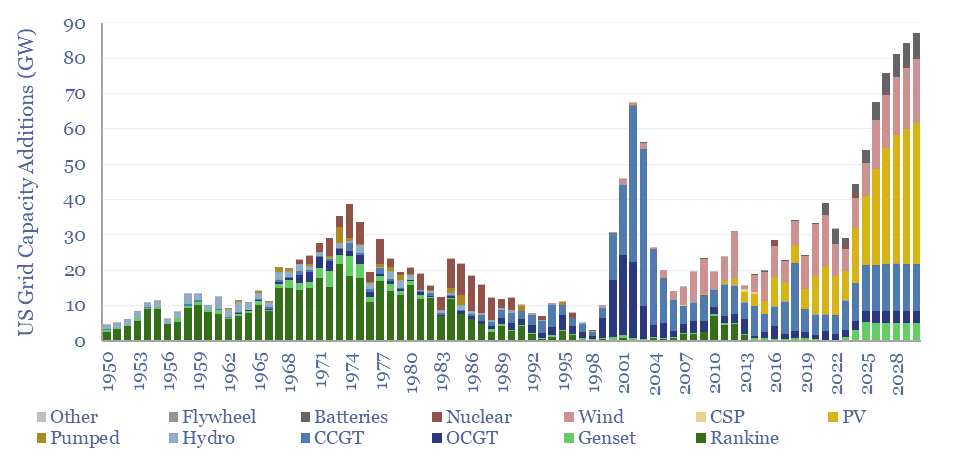

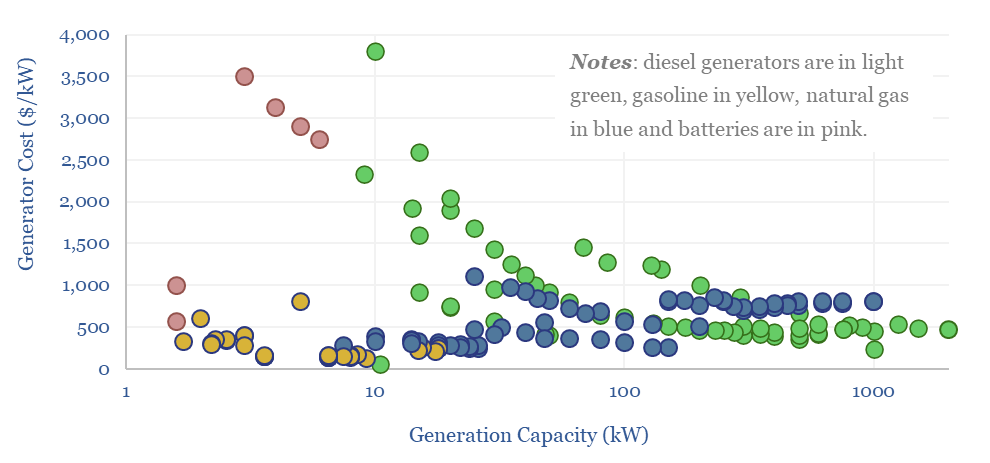

The power demands of AI will contribute to the largest growth of new generation capacity in history. This 18-page note evaluates the power implications of AI data-centers. Reliability is crucial. Gas demand grows. Annual sales of CCGTs and back-up gensets in the US both rise by 2.5x? This is our most detailed AI report to date.

Read the Report?

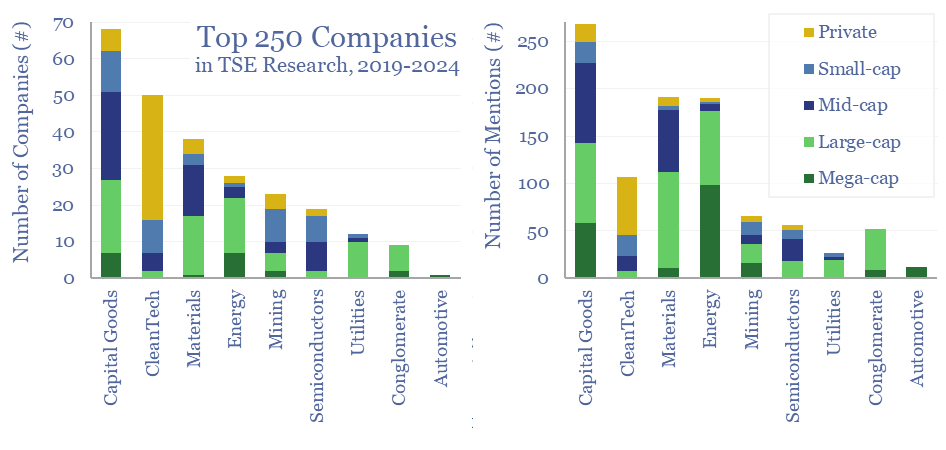

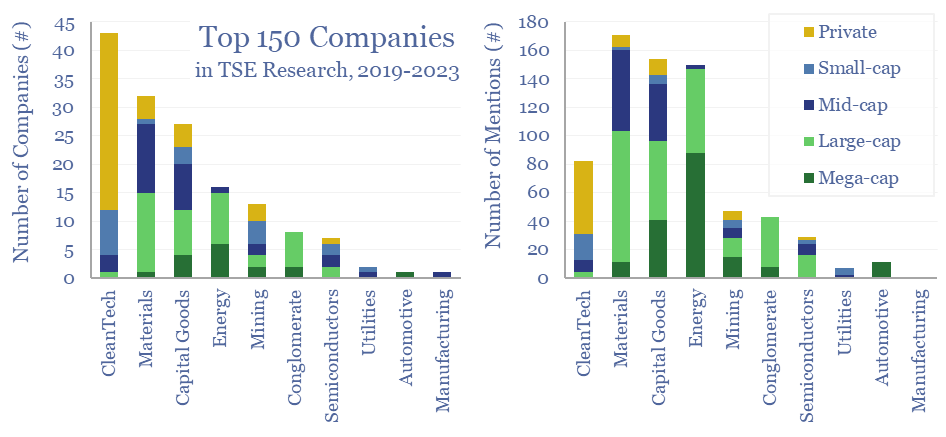

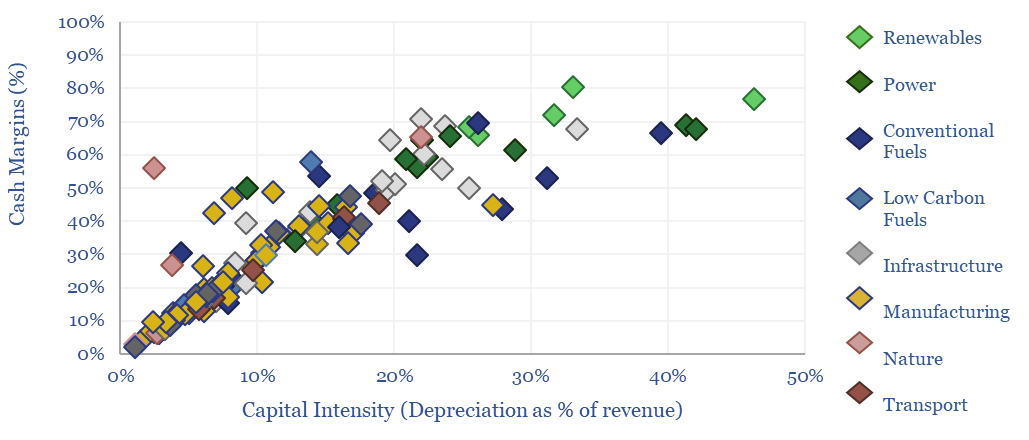

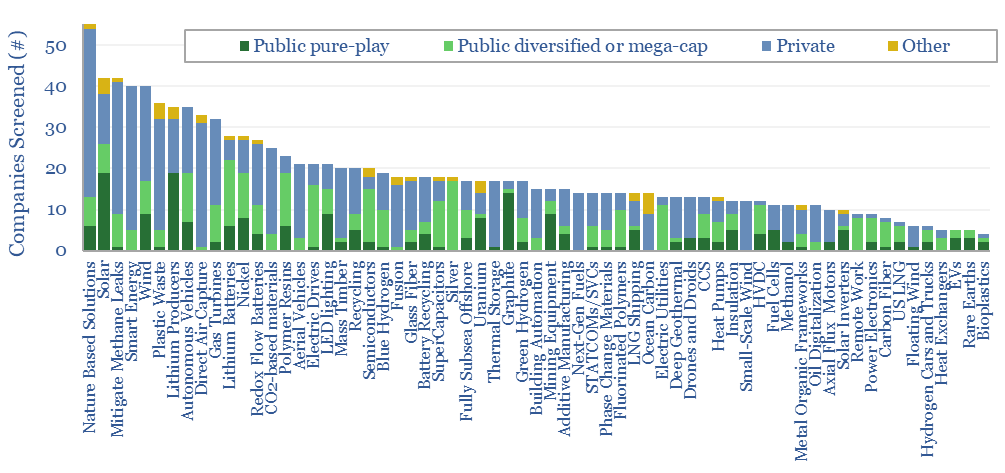

This 11-page note summarizes the key conclusions from our energy transition research in 1Q24 and across 1,400 companies that have crossed our screens since 2019. Volatility is rising. Power grids are bottlenecked. Hence what stands out in capital goods, clean-tech, solar, gas value chains and materials? And what is most overlooked?

Read the Report?

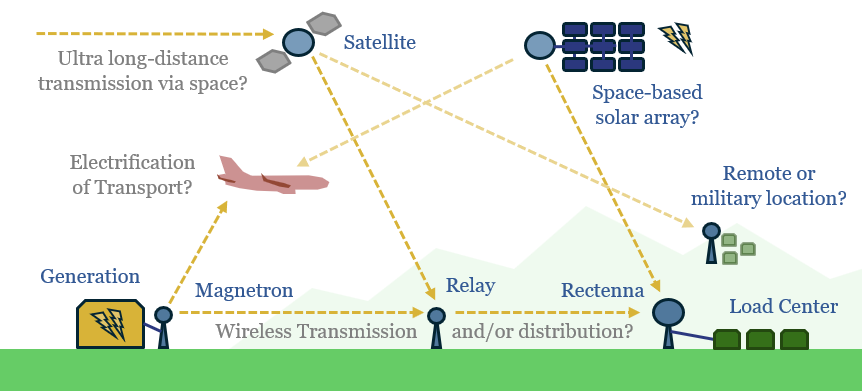

What if large quantities of power could be transmitted via the 2-6 GHz microwave spectrum, rather than across bottlenecked cables and wires? This 12-page note explores the technology, advantages, opportunities, challenges, efficiencies and costs. We still fear power grid bottlenecks.

Read the Report?

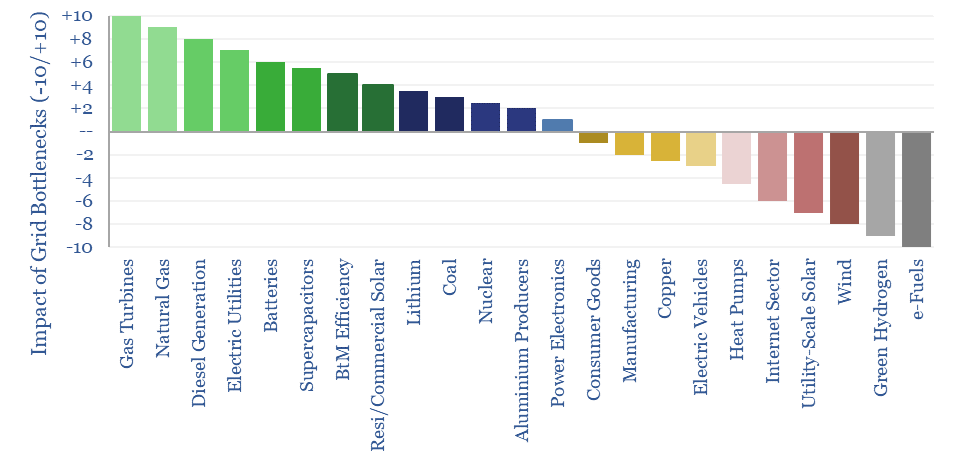

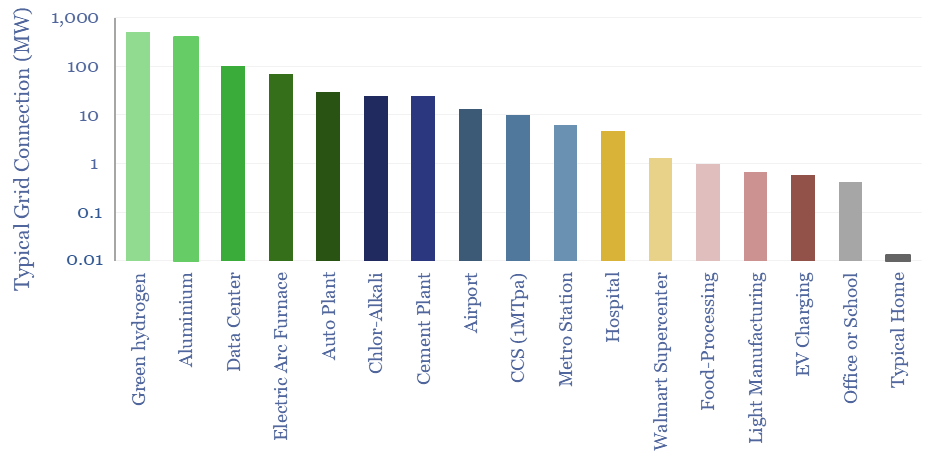

What if the world is entering an era of persistent power grid bottlenecks, with long delays to interconnect new loads? Everything changes. Hence this 16-page report looks across the energy and industrial landscape, to rank the implications across different sectors and companies.

Read the Report?

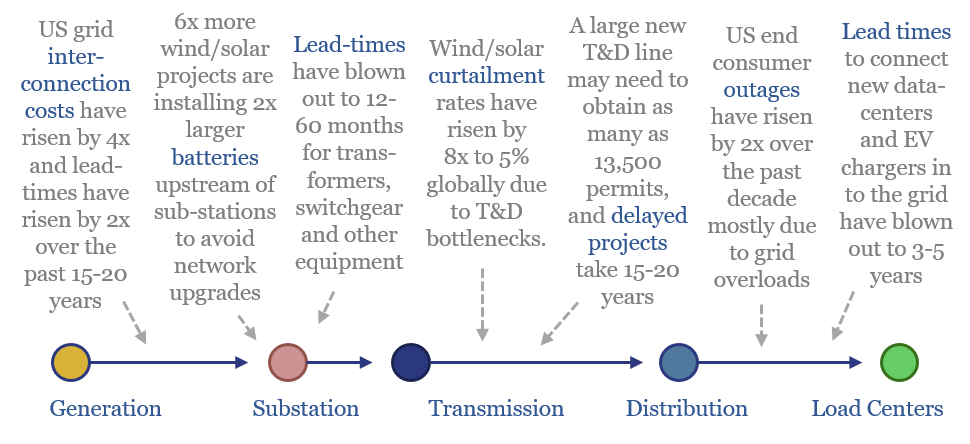

Power grids will be the biggest bottleneck in the energy transition, according to this 18-page report. Tensions have been building for a decade. They are invisible unless you are looking. And the tightness could last a decade. Further acceleration of renewables may be thwarted. And we are re-thinking grid back-ups.

Read the Report?

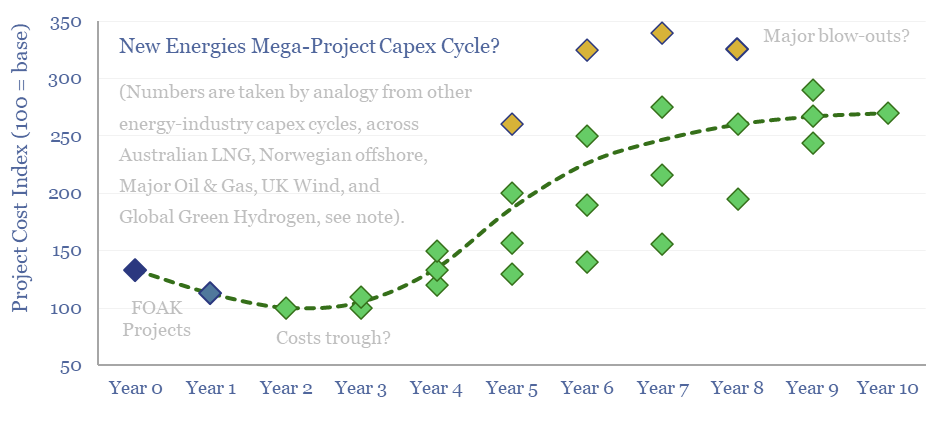

Energy transition is the largest construction project in human history. But building in boom times is associated with 2-3x cost inflation. This 10-page note reviews five case studies of prior capex booms, and argues for accelerating FIDs, even in 2024. The outlook for project developers depends on their timing? And who benefits across the supply chain?

Read the Report?

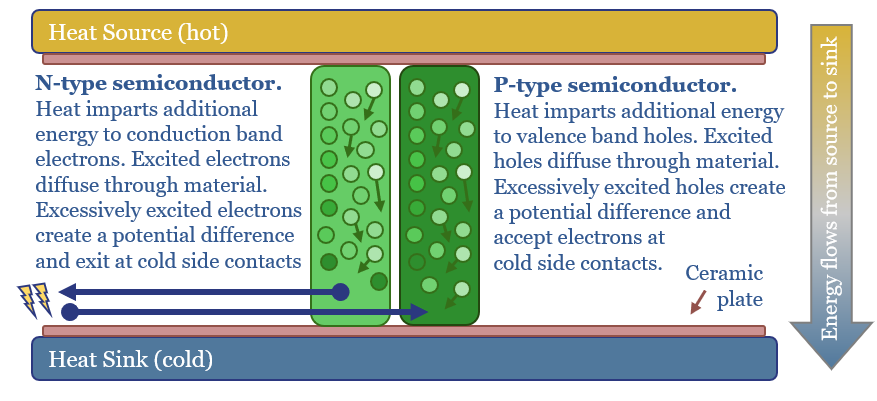

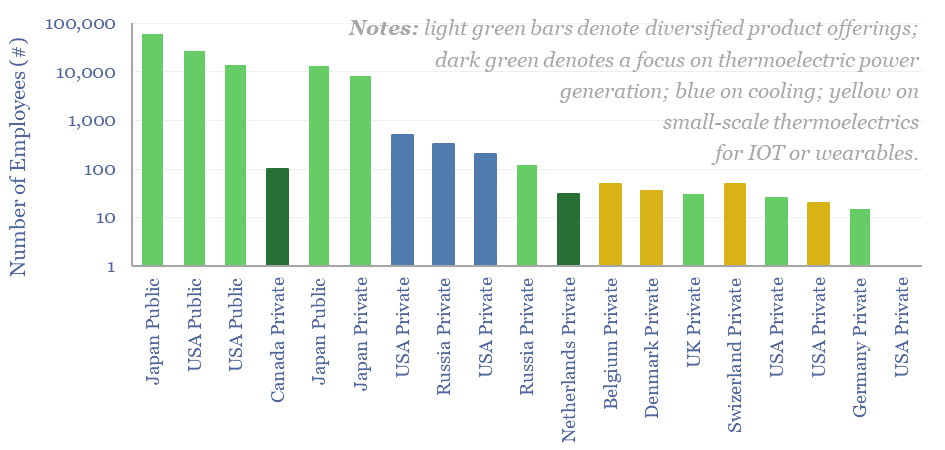

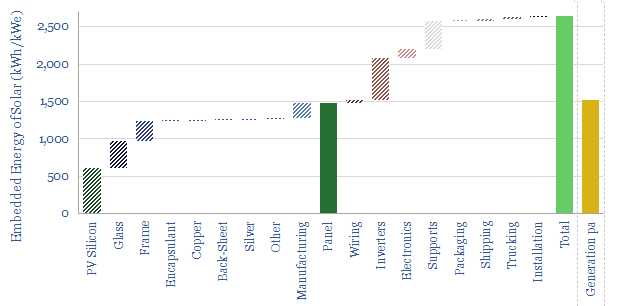

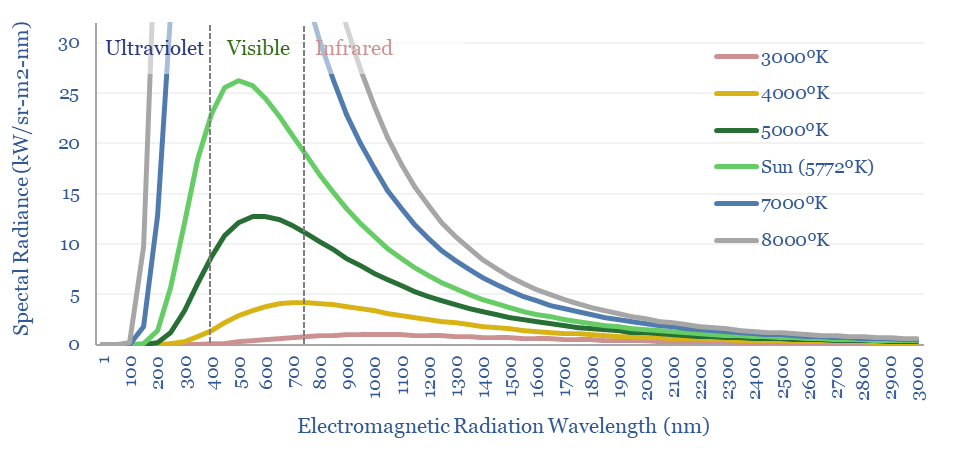

Solar semiconductors have changed the world, converting light into clean electricity. Hence can thermoelectric semiconductors follow the same path, converting heat into electricity with no moving parts? This 14-page report reviews the opportunity for thermoelectric generation in the energy transition, challenges, efficiency, costs and companies.

Read the Report?

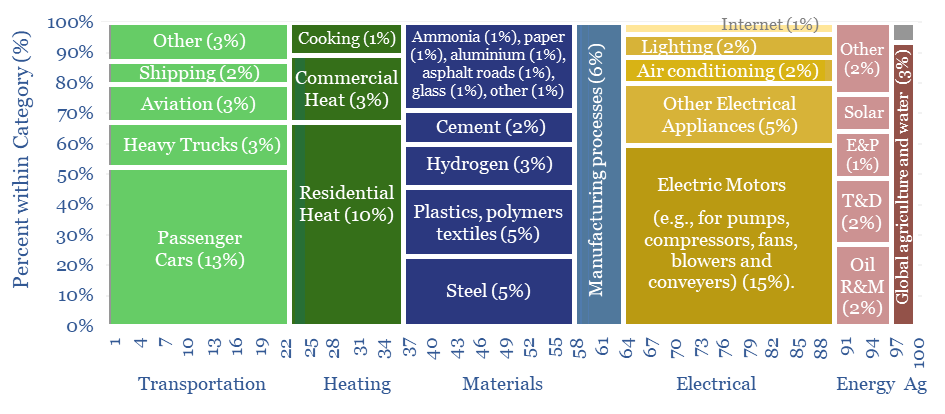

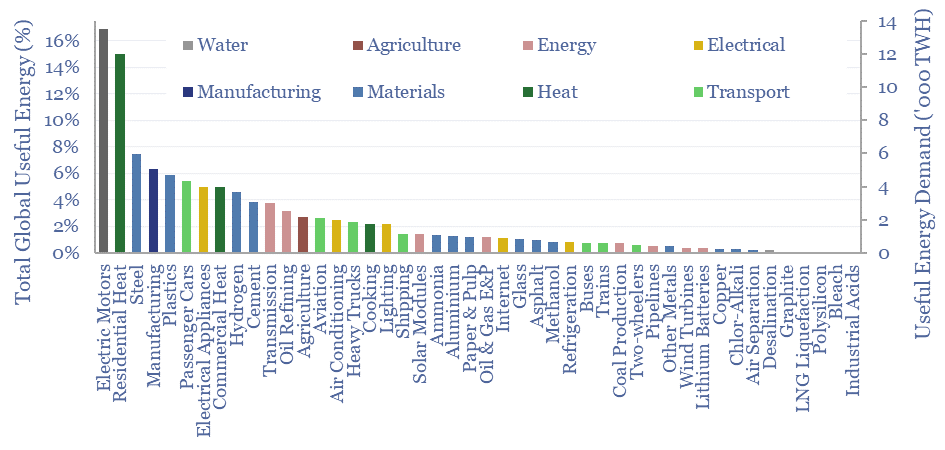

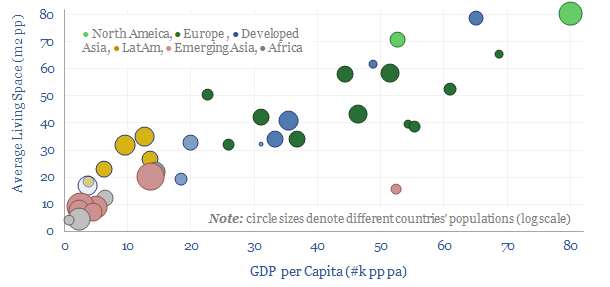

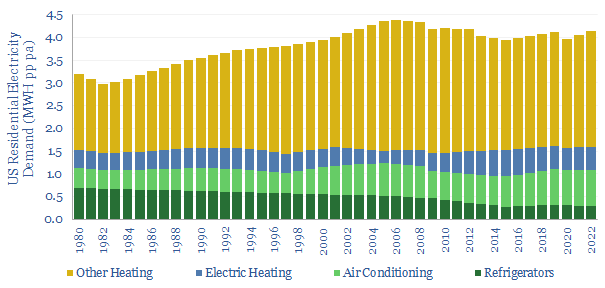

We have attempted a detailed breakdown of global energy demand across 50 categories, to identify emerging opportunities in the energy transition, and suggesting upside to our energy demand forecasts? This 12-page note sets out our conclusions and is intended a useful reference.

Read the Report?

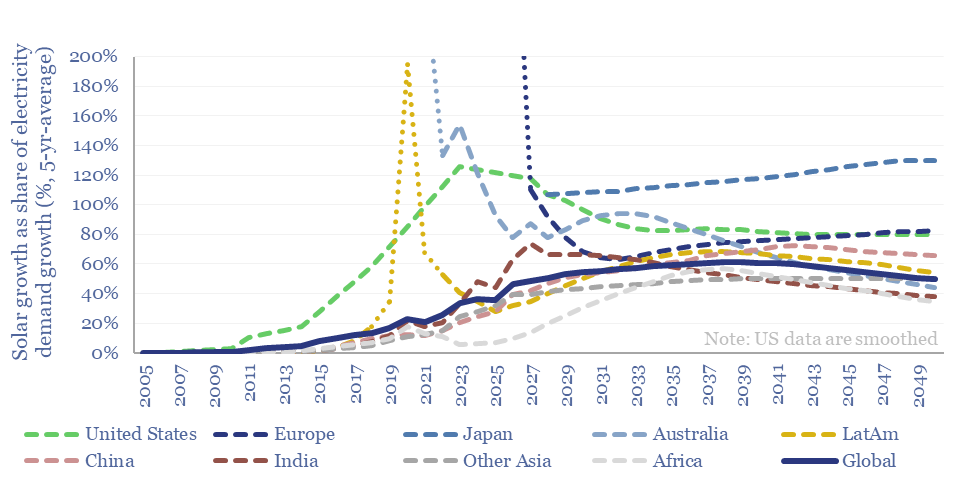

How much new solar can the world absorb in a given year? And are core markets such as the US now maturing? This 15-page note refines our solar forecasts using a new methodology. Annual solar adds will likely plateau at 50-100% of total electricity demand growth in most regions. What implications and adaptation strategies?

Read the Report?

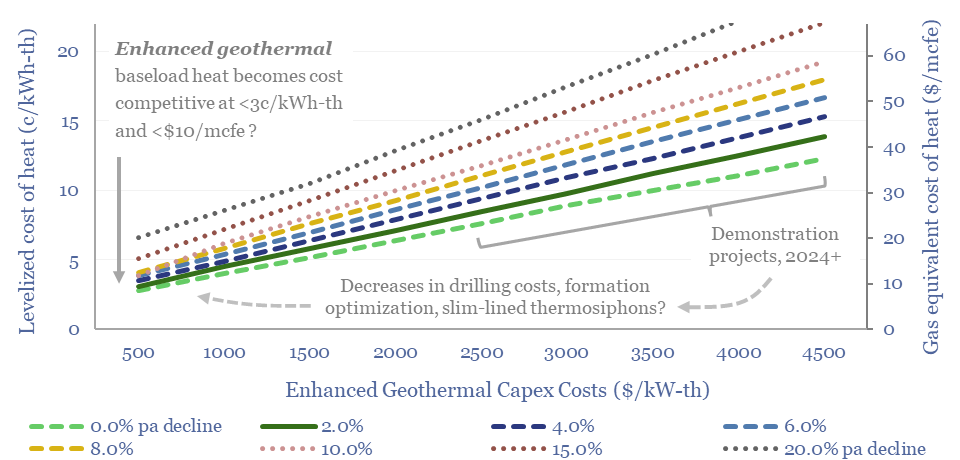

Momentum behind enhanced geothermal has accelerated 3x in the past half-decade, especially in energy-short Europe, and as pilot projects have de-risked novel well designs. This 18-page report re-evaluates the energy economics of geothermal from first principles. Is there a path to cost-competitive, zero-carbon baseload heat?

Read the Report?

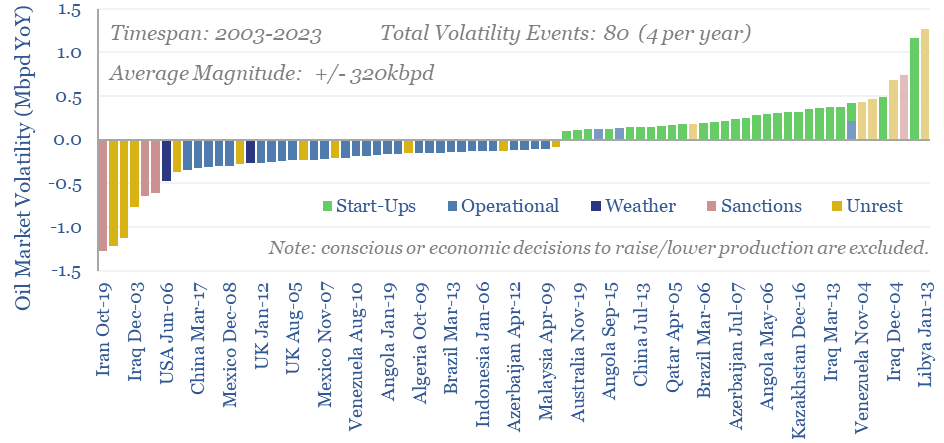

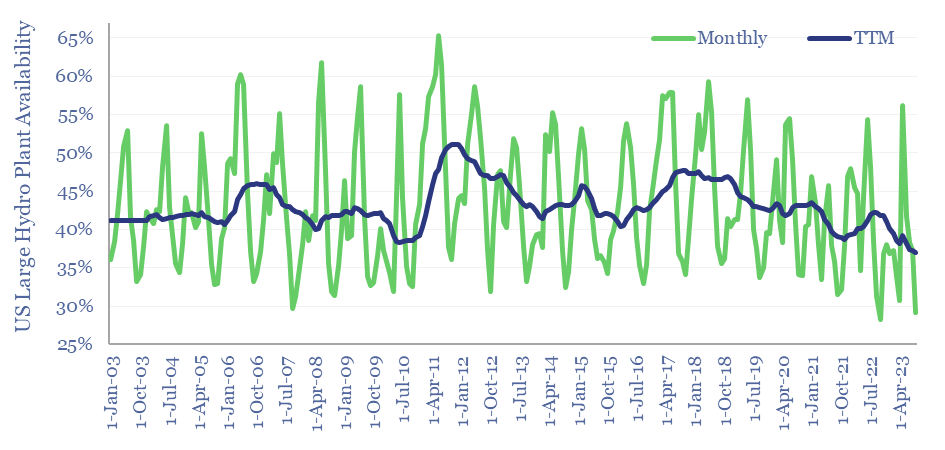

Oil markets endure 4 major volatility events per year, with a magnitude of +/- 320kbpd, on average. Their net impact detracts -100kbpd. OPEC and shale have historically buffered out the volatility, so annual oil output is 70% less volatile than renewables’ output. This 10-page note explores the numbers and the changes that lie ahead?

Read the Report?

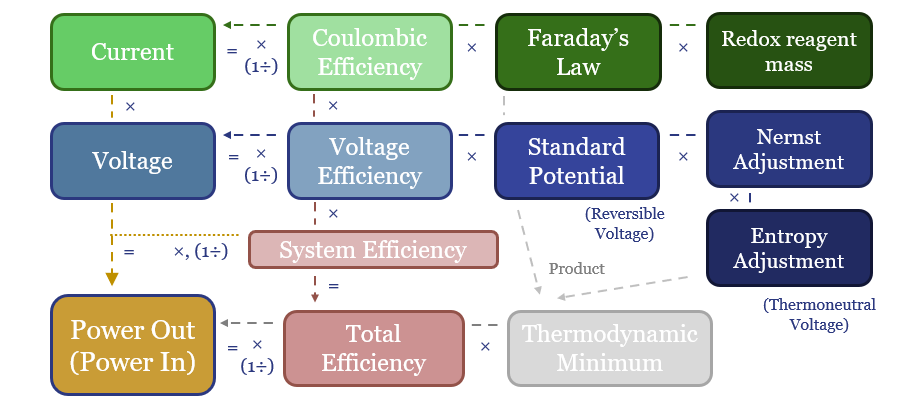

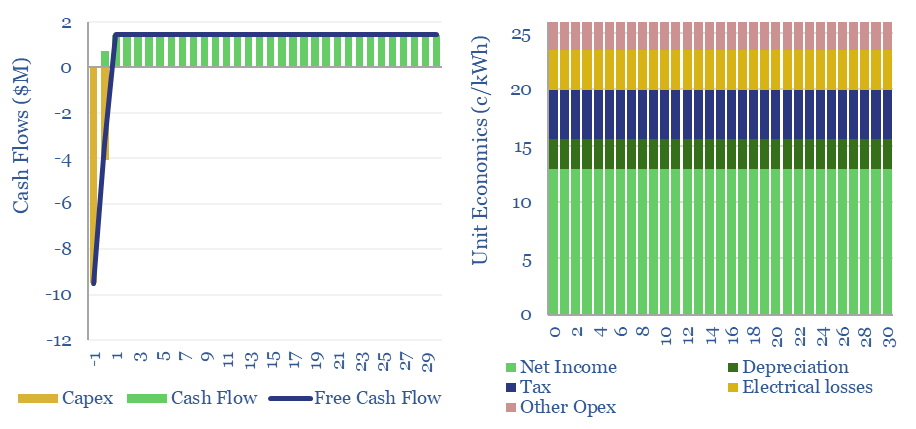

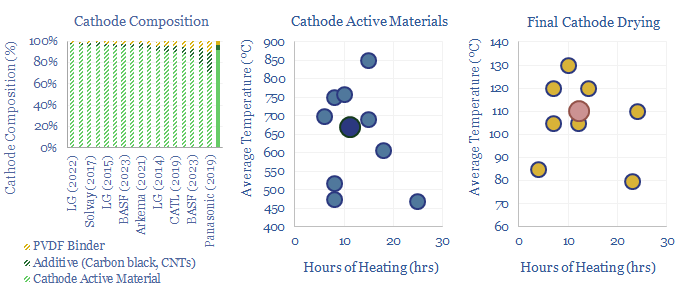

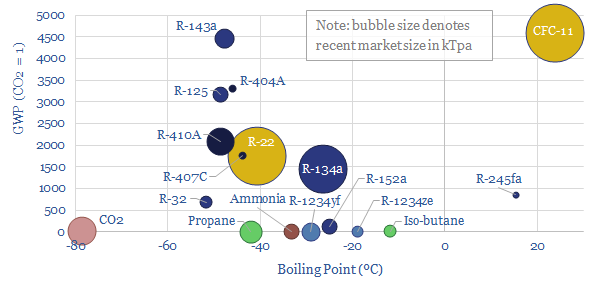

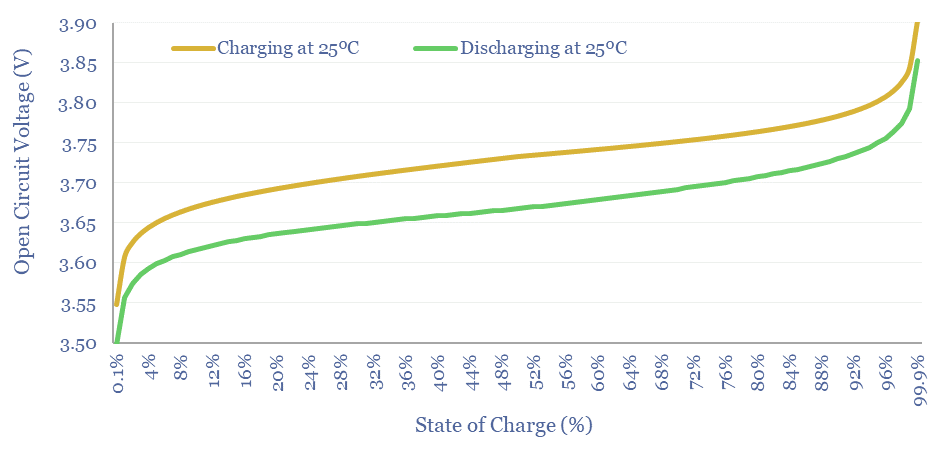

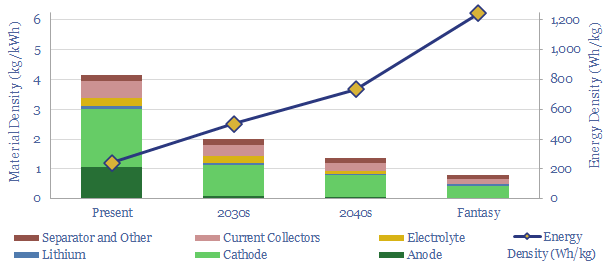

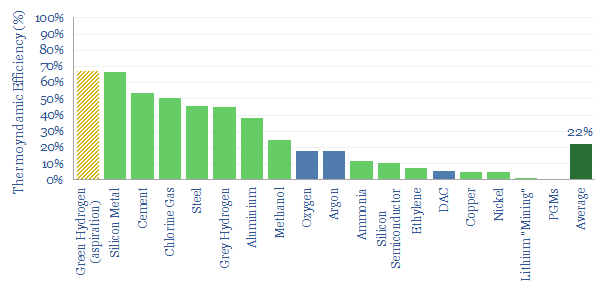

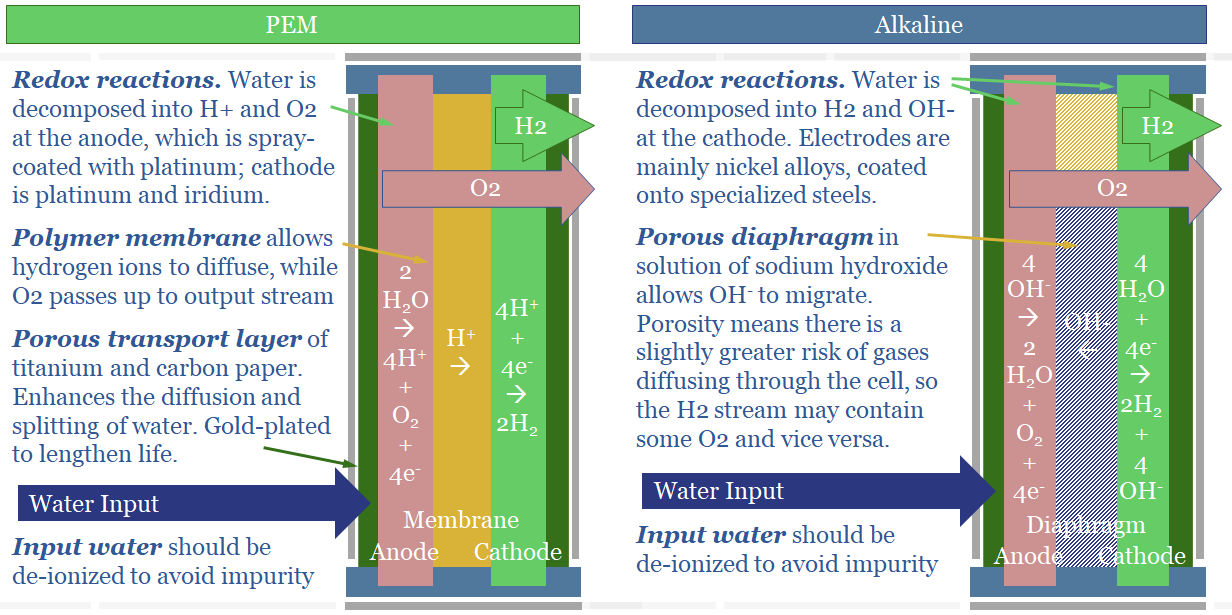

Batteries, electrolysers and cleaner metals/materials value chains all hinge on electrochemistry. Hence this 19-page note explains the energy economics from first principles. The physics are constructive for lithium and next-gen electrowinning, but perhaps challenge green hydrogen aspirations?

Read the Report?

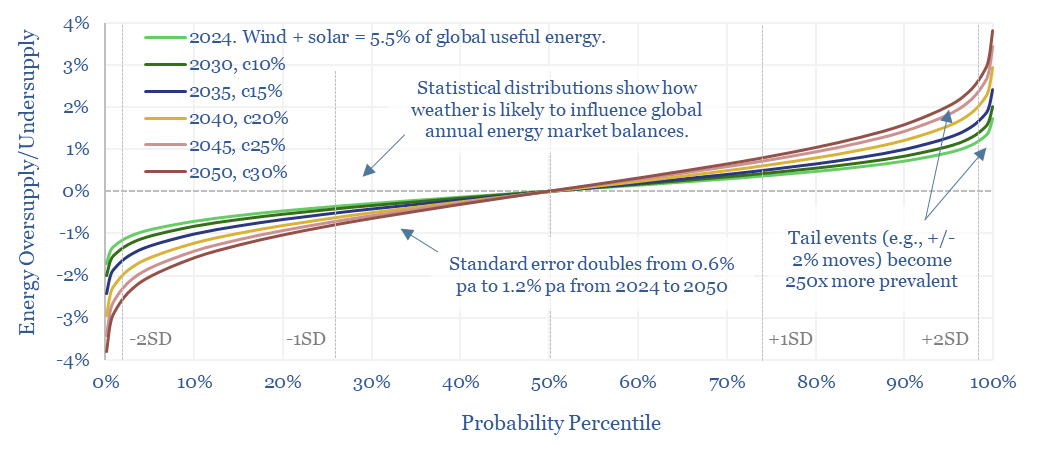

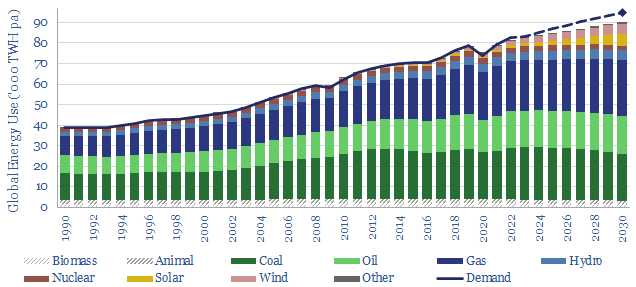

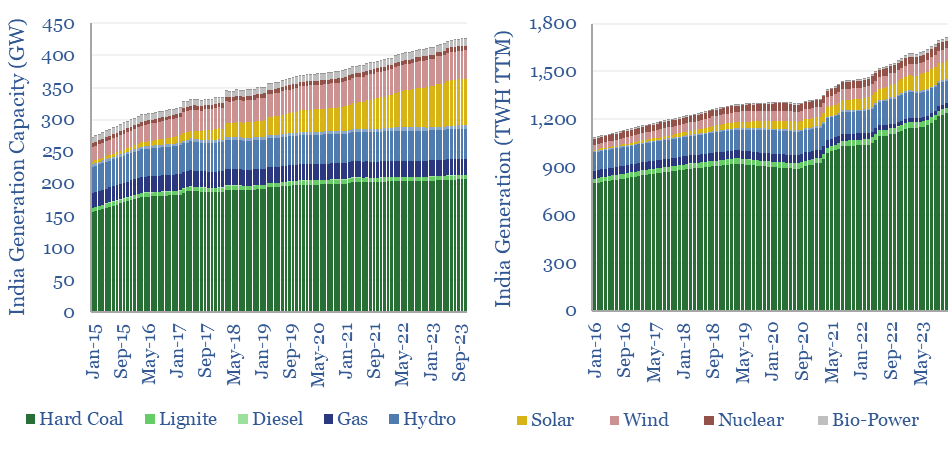

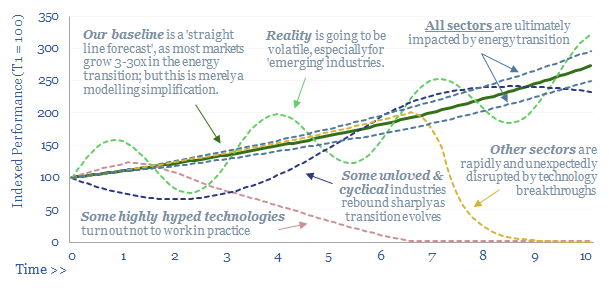

This 14-page note predicts a staggering increase in global energy market volatility, which doubles by 2050, while extreme events that sway energy balances by +/- 2% will become 250x more frequent. A key reason is that the annual output from wind, solar and hydro all vary by +/- 3-5% each year, while wind and solar will ramp from 5.5% to 30% of all global energy. Rising volatility can be a kingmaker for midstream companies? What other implications?

Read the Report?

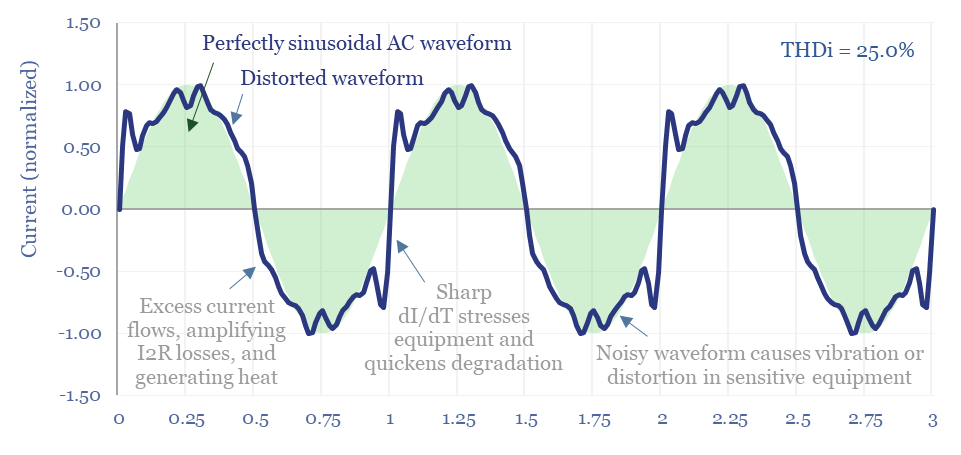

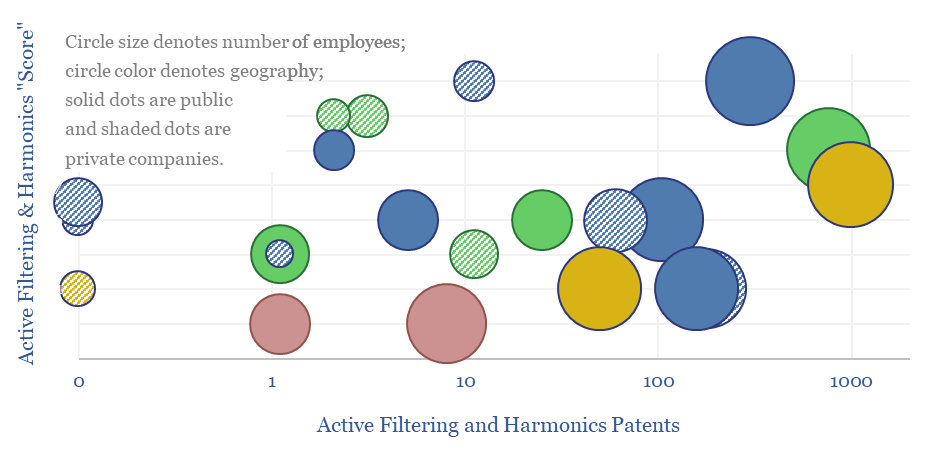

The $1bn pa harmonic filter market likely expands by 10x in the energy transition, as almost all new energies and digital technologies inject harmonic distortion to the grid. This 17-page note argues for premiumization in power electronics, including around solar, and screens for who benefits?

Read the Report?

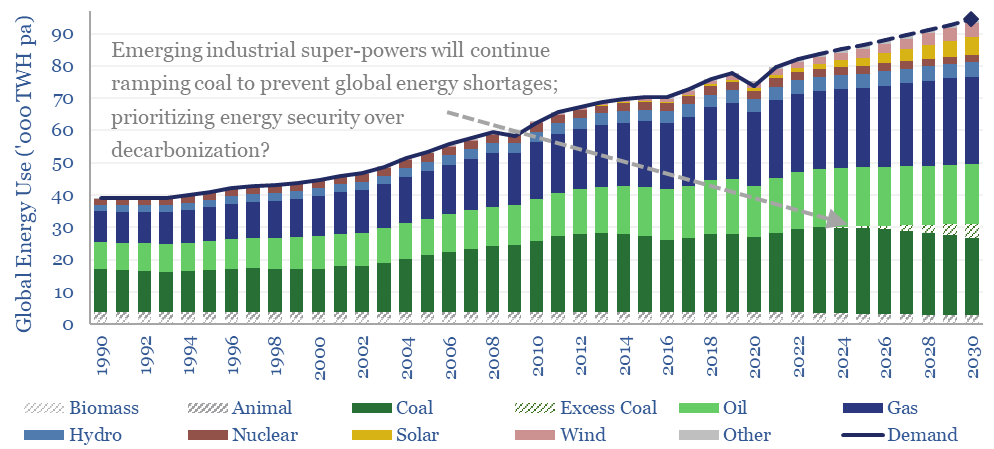

Navigating the energy transition in 2024 requires focusing in upon bright spots, because global energy priorities are shifting. Emerging nations are ramping coal to avoid energy shortages. Geopolitical tensions are escalating. So where are the bright spots? This 14-page note makes 10 predictions for 2024.

Read the Report?

150 core companies have been mentioned 700 times across all of our research since 2019; within a broader list of 1,300 total companies exposed to energy transition, diversified by geography, by size and by segment. This 12-page note draws conclusions about the most mentioned companies in our energy transition research.

Read the Report?

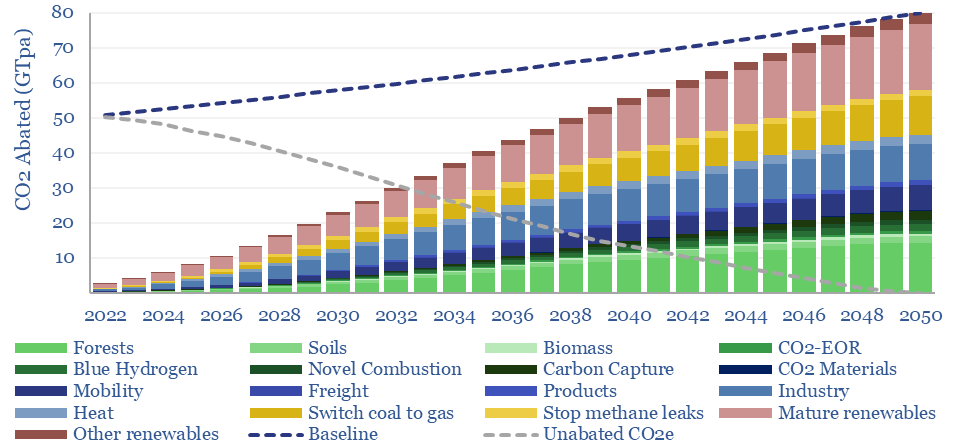

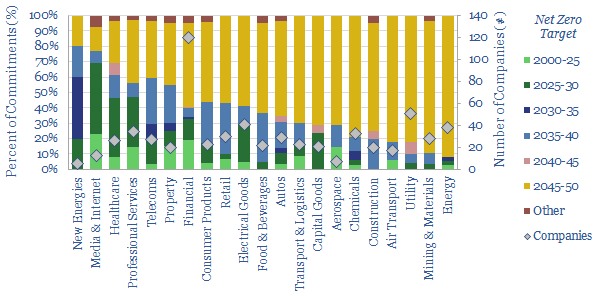

What is the most likely route to net zero by 2050, decarbonizing a planet of 9.5bn people, 50% higher energy demand, and abating 80GTpa of potential CO2? Net zero is achievable. But only with pragmatism. This 20-page report summarizes the best opportunities, resultant energy mix, bottlenecks for 30 commodities, and changes to our views in 2023.

Read the Report?

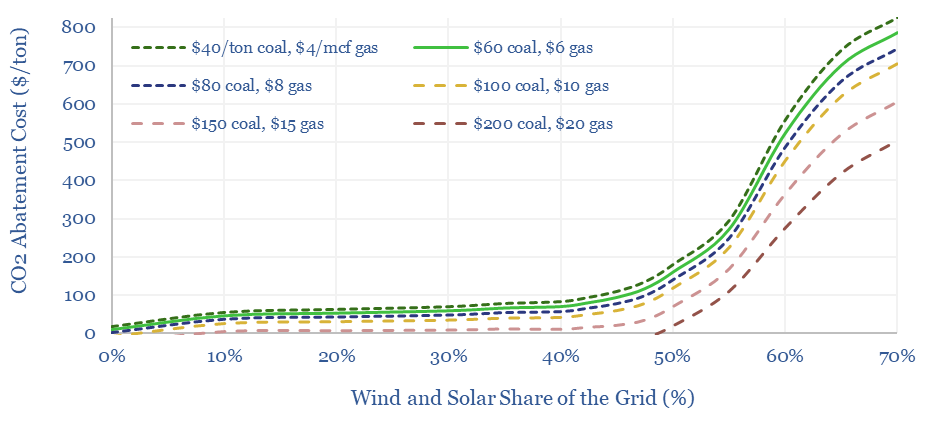

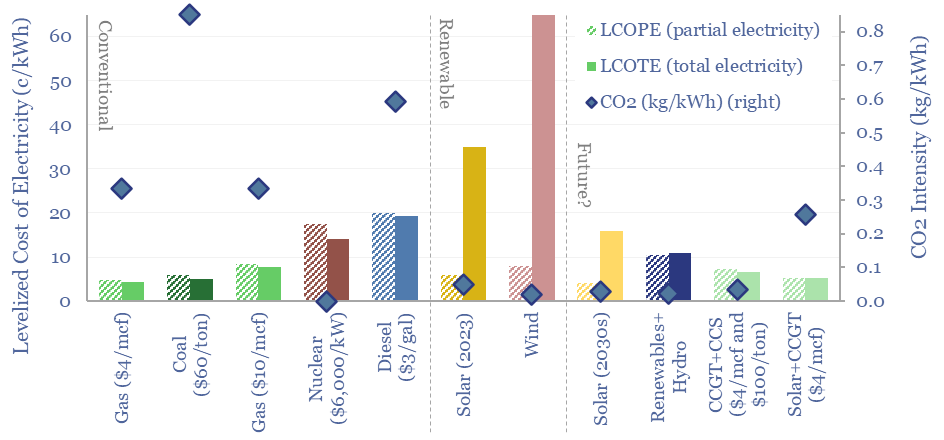

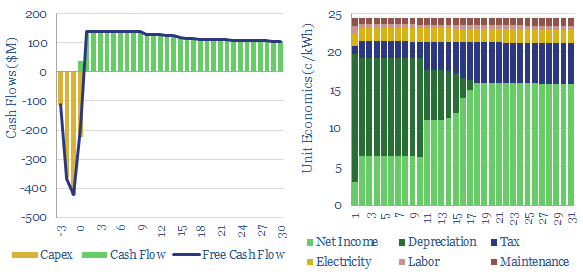

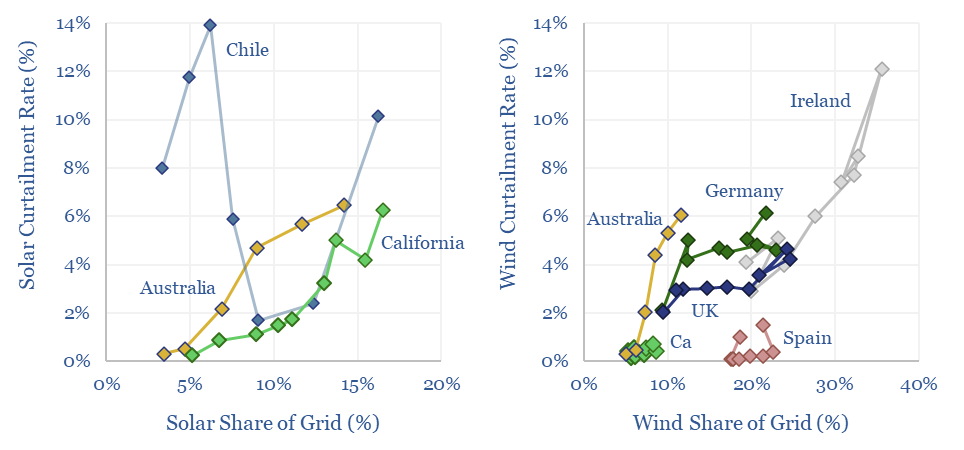

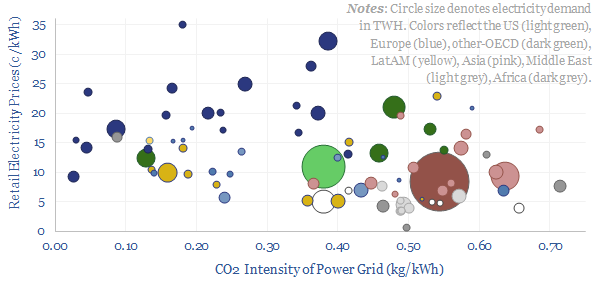

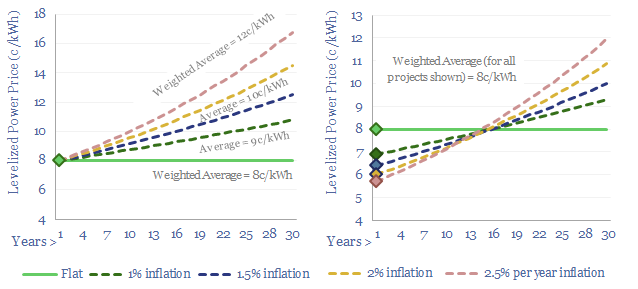

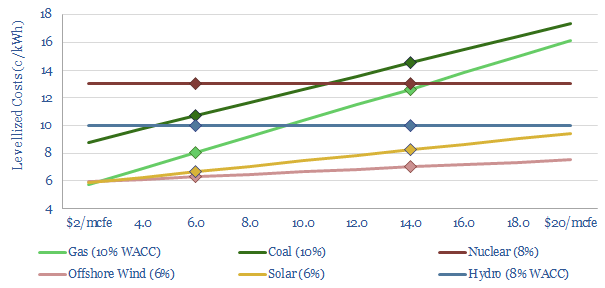

The costs of decarbonizing by ramping up solar and wind depend on the context. But our best estimate is that solar and wind can reach 40% of the global grid for a $60/ton average CO2 abatement cost. This is relatively low. Yet it raises retail electricity prices from 10c/kWh to 12c/kWh. This 7-page note explores the numbers.

Read the Report?

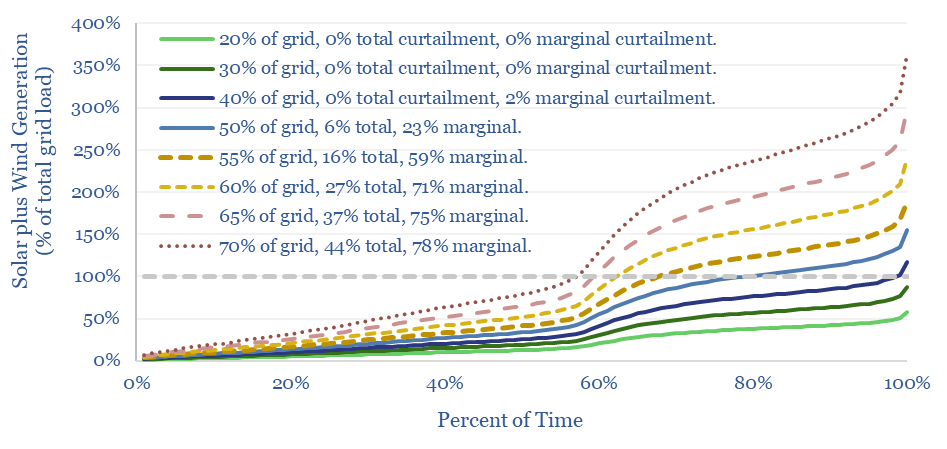

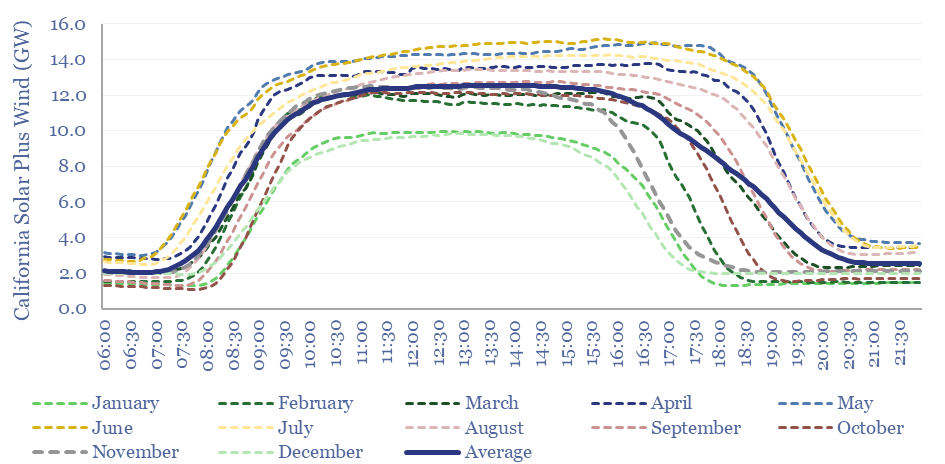

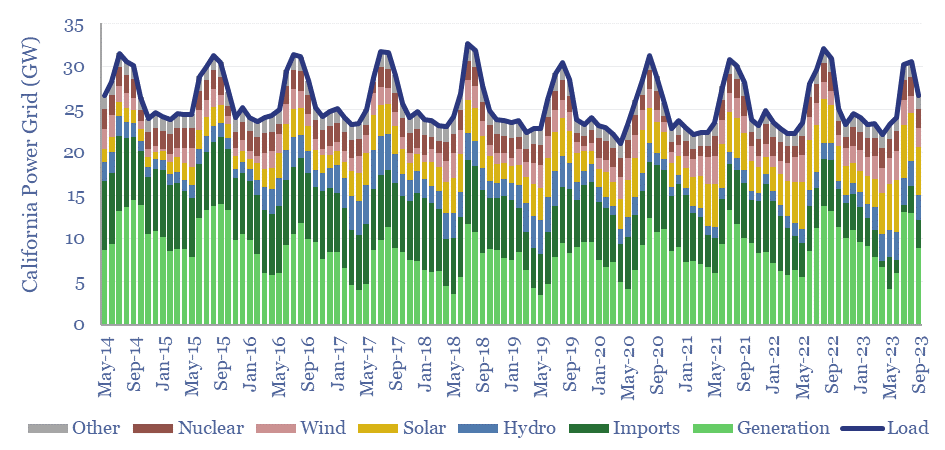

Wind and solar peak at 50-55% of power grids, without demand-shifting and batteries, before their economics become overwhelmed by curtailment rates and backup costs. More in wind-heavy grids. Less in solar heavy grids. This 12-page note draws conclusions from the statistical distribution of renewables’ generation across 100,000 x 5-minute grid intervals.

Read the Report?

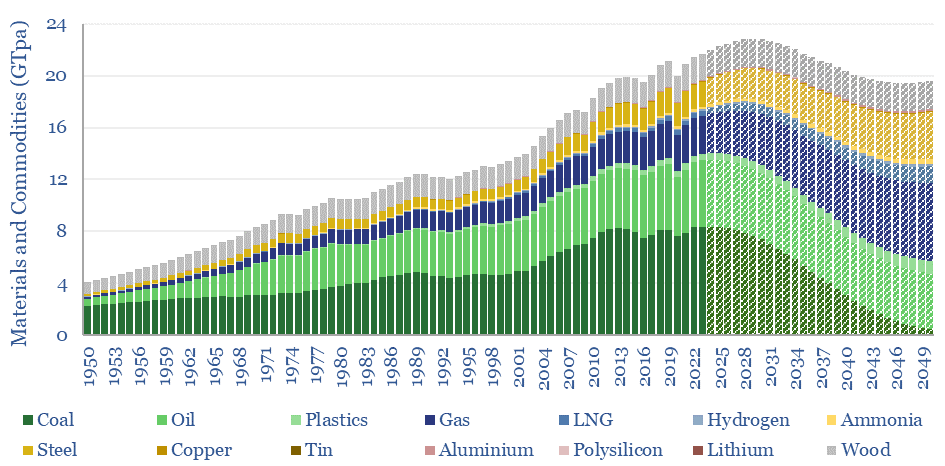

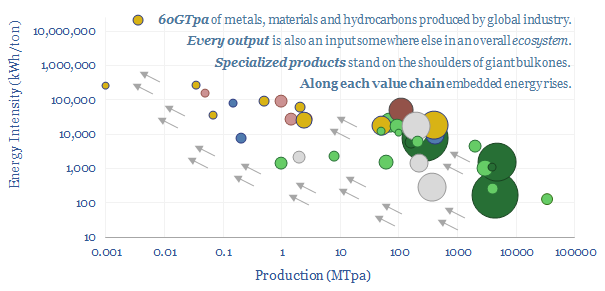

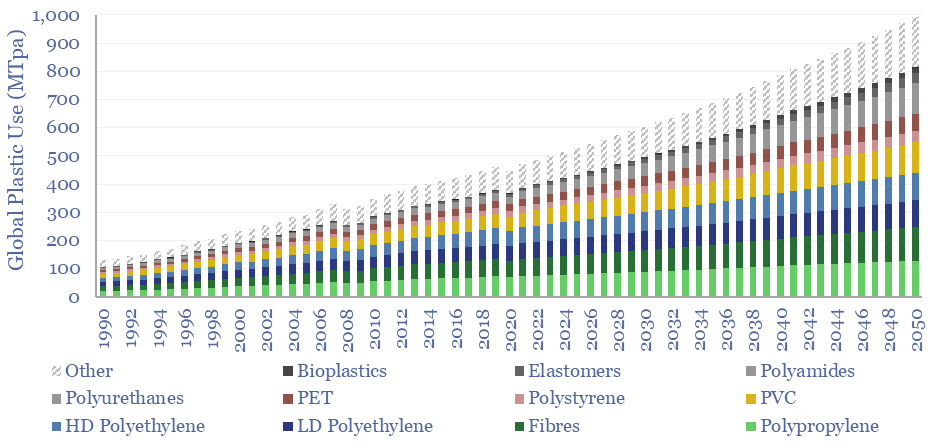

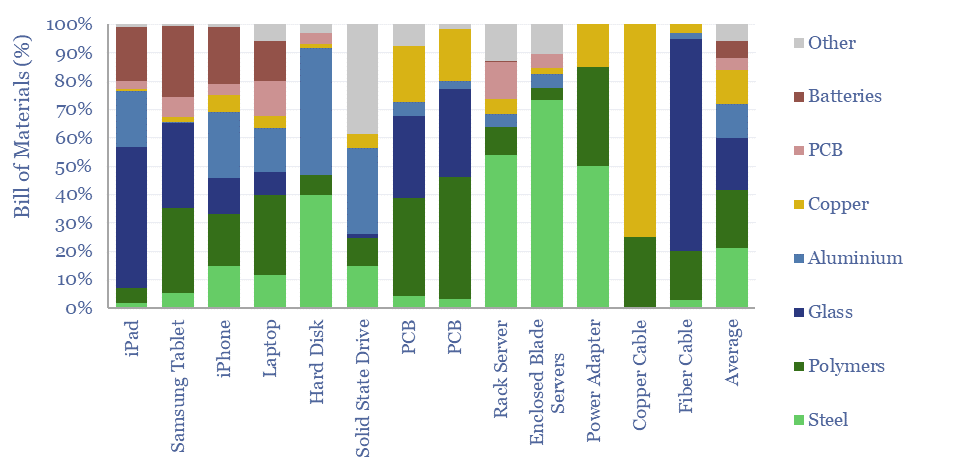

This 15-page note evaluates 10 commodity disruptions since the Stone Age. Peak demand for commodities is just possible, in total tonnage terms, as part of the energy transition. But it is historically unprecedented. And our plateau in tonnage terms is a doubling in value terms, a kingmaker for gas, plastics and materials. Outlooks for 30 major commodities are reviewed.

Read the Report?

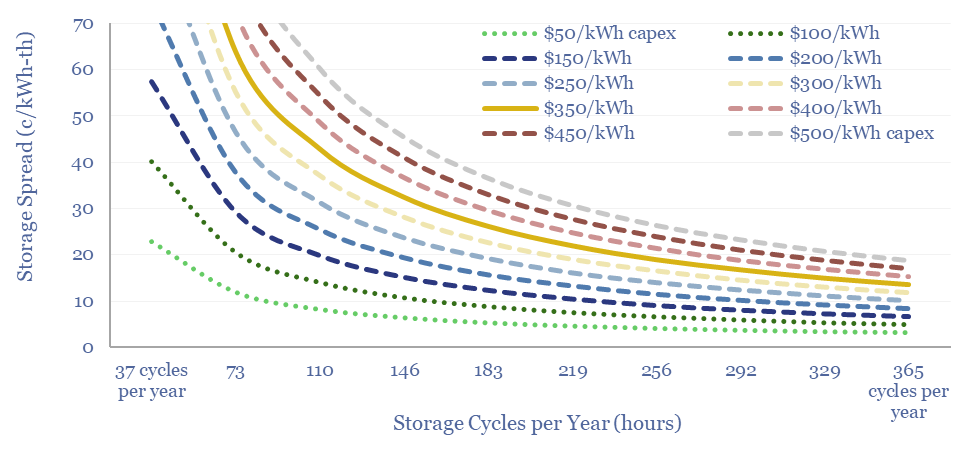

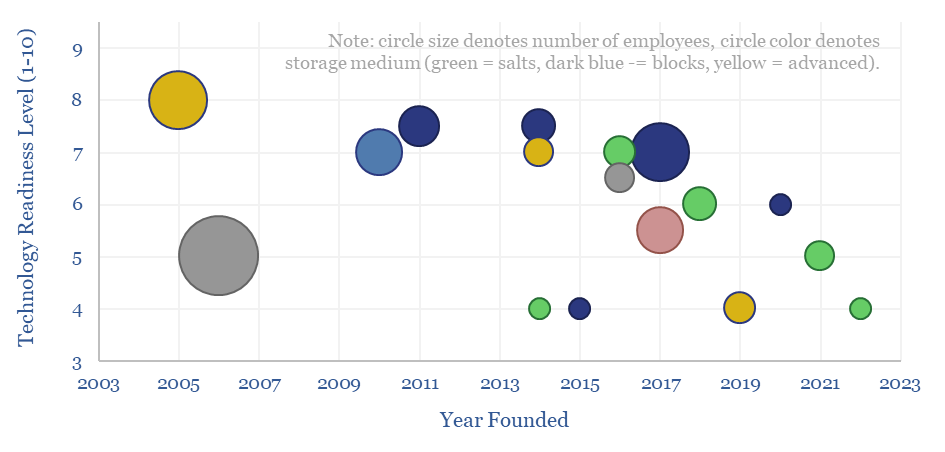

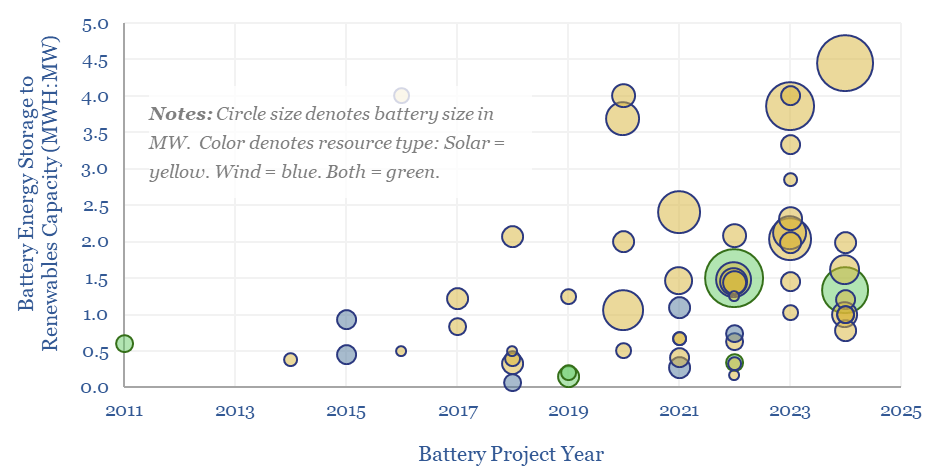

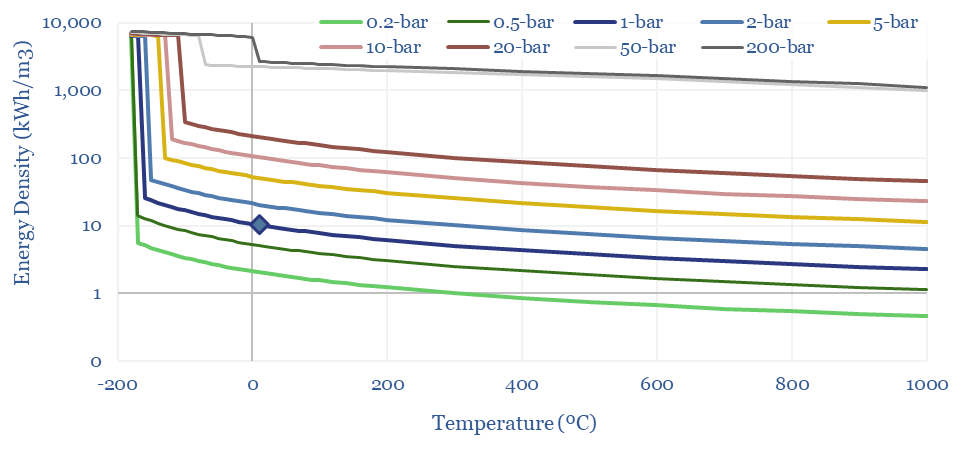

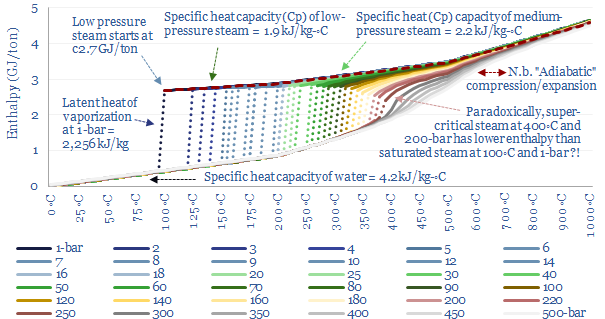

Thermal energy storage will outcompete other batteries and hydrogen for avoiding renewable curtailments and integrating more solar? Overlooked advantages are discussed in this 21-page report, plus a fast-evolving company landscape. What implications for solar, gas and industrial incumbents?

Read the Report?

The levelized cost of partial electricity (LCOPE) is very different from the levelized cost of total electricity (LCOTE). This 21-page note explores the distinction. It suggests renewables will peak at 30-60% of power grids? And gas is particularly well-placed as a back-up, set to surprise, by entrenching at 30-50% of renewables-heavy grids?

Read the Report?

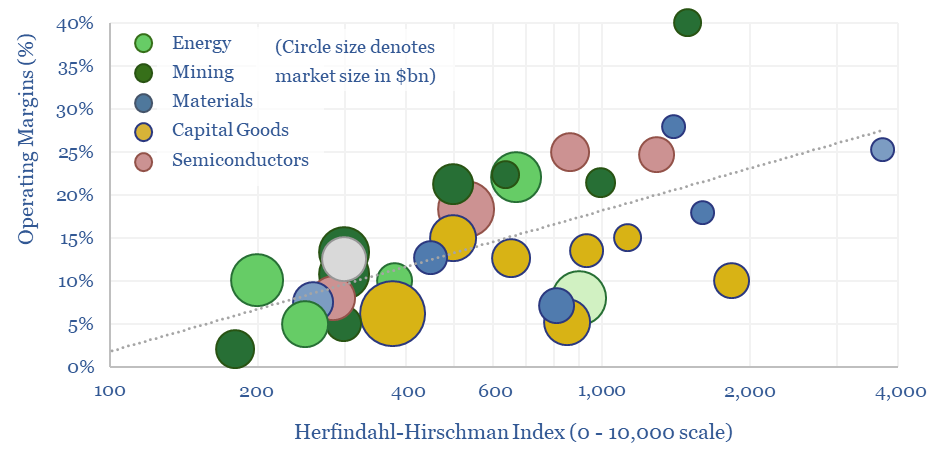

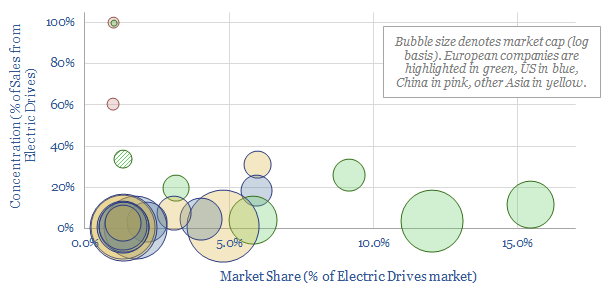

We have assessed over 100 markets in energy, materials, manufacturing and decarbonization across our research. More concentrated industries achieve higher margins across the cycle. But not always. This 10-page report draws out seven rules of thumb around market concentration to help decision-makers.

Read the Report?

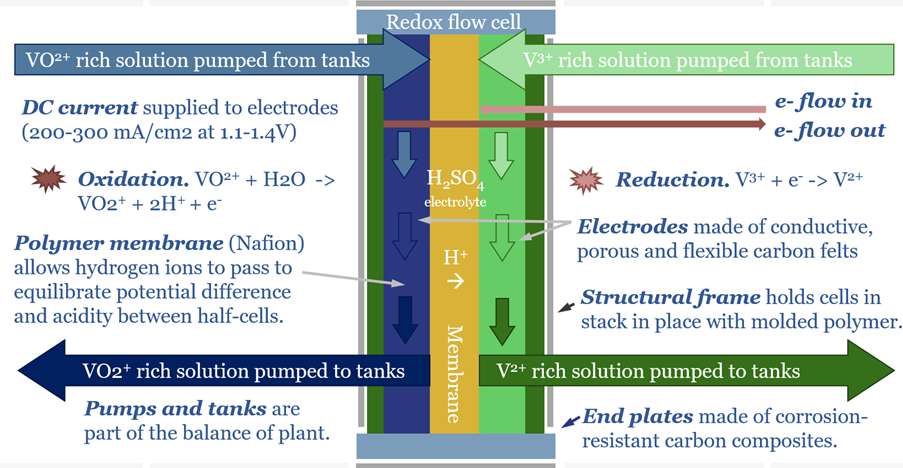

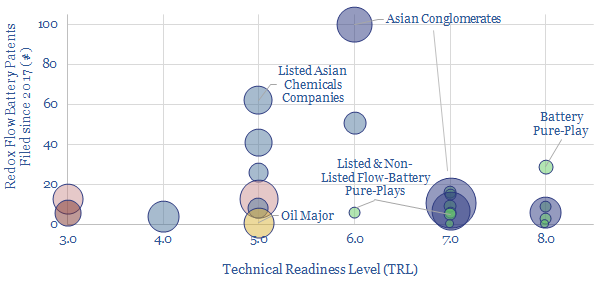

Redox flow batteries have 6-24 hour durations and require 15-20c/kWh storage spreads. They will increasingly compete with lithium ion batteries in grid-scale storage. Does this unlock a step-change for peak renewables penetration? Or create 3-30x upside for total global Vanadium demand? This 15-page note is our outlook.

Read the Report?

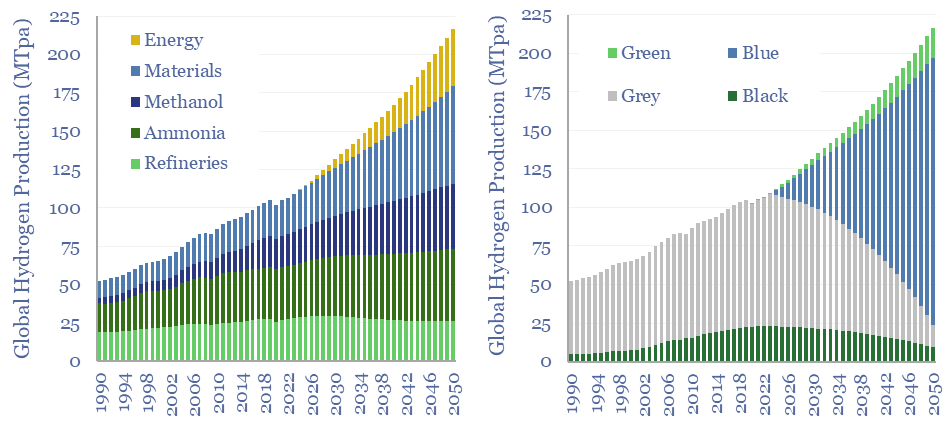

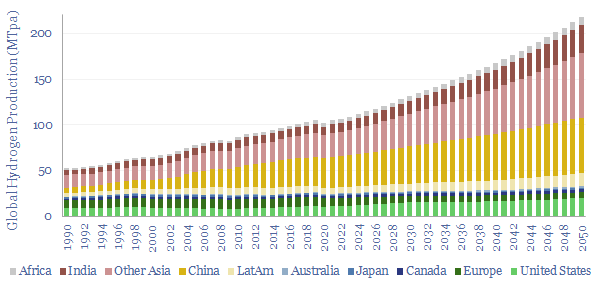

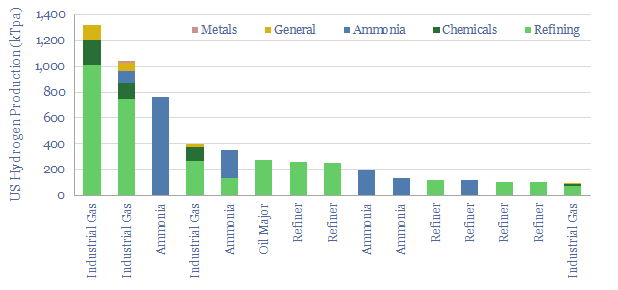

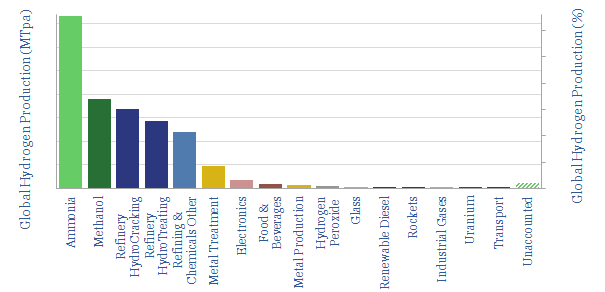

110MTpa of hydrogen is produced each year, emitting 1.3GTpa of CO2. We think the market doubles to 220MTpa by 2050. This is c60% ‘below consensus’. Decarbonization also disrupts 80% of today’s asset base. Our outlook varies by region. This 17-page note explores the evolution of hydrogen markets and implications for industrial gas incumbents?

Read the Report?

Semiconductors underpin solar panels, electric vehicles and electronics. Hence this 20-page note aims to explain semiconductor physics from first principles: their conductivity and resistance, their use in devices, plus implications for materials value chains and the energy transition itself?

Read the Report?

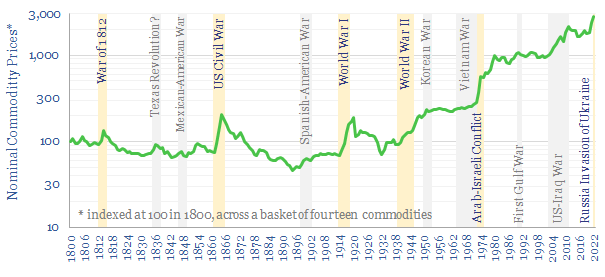

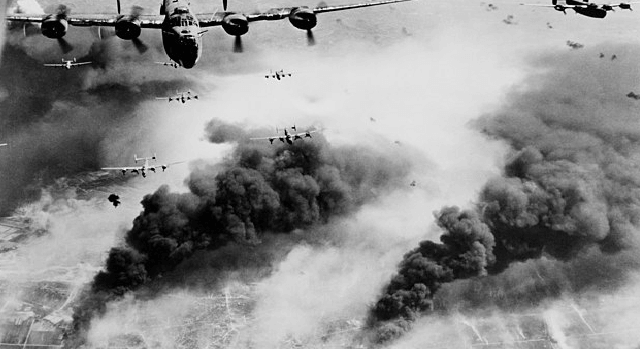

This 10-page note charts how fourteen commodities were affected, across a dozen conflicts, going back to 1800. During major conflicts, 95% of commodities saw higher prices. The average commodity doubled. There is a strong role for commodities hedging portfolios and even entire nations against conflicts.

Read the Report?

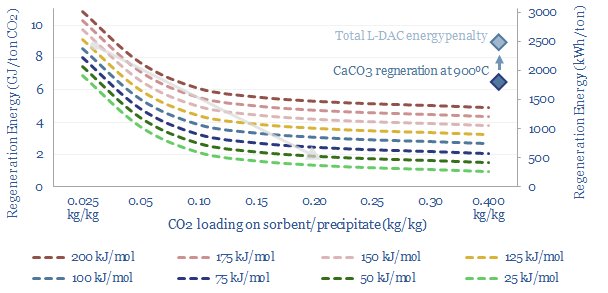

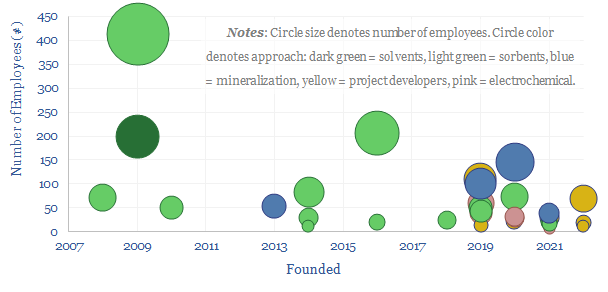

A new wave of DAC companies has been emerging rapidly since 2019, targeting 50-90% lower costs and energy penalties than incumbent S-DAC and L-DAC, potentially reaching $100/ton and 500kWh/ton in the 2030s. Five opportunities excite us and warrant partial de-risking in this 19-page report. Could DAC even beat batteries and hydrogen in smoothing renewable-heavy grids?

Read the Report?

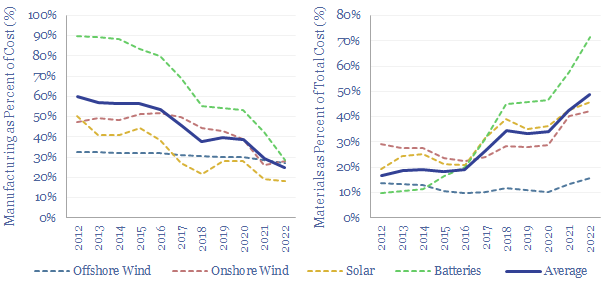

Over the past decade, costs have deflated by 85% for lithium ion batteries, 75% for solar and 25% for onshore wind. Now new energies are entering a new era. Future costs are mainly determined by materials. Bottlenecks matter. Deflation is slower. Even higher-grade materials are needed to raise efficiency. This 14-page note explores the new age of materials, how much new energies deflation is left, and who benefits?

Read the Report?

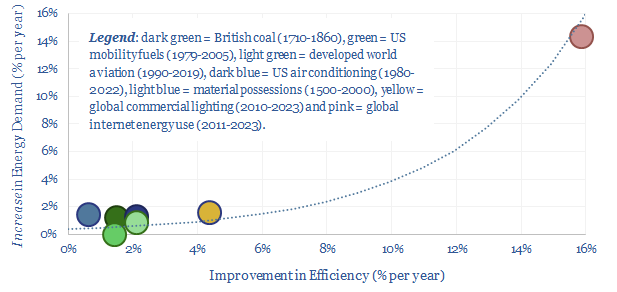

Using a commodity more efficiently can cause its demand to rise not fall, as greater efficiency opens up unforeseen possibilities. This is Jevons' Paradox. Our 16-page report finds it is more prevalent than we expected. Efficiency gains underpin 25% of our roadmap to net zero. To be effective, commodity prices must also rise and remain high, otherwise rebound effects will raise demand.

Read the Report?

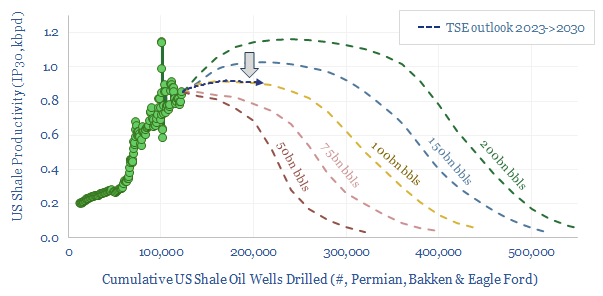

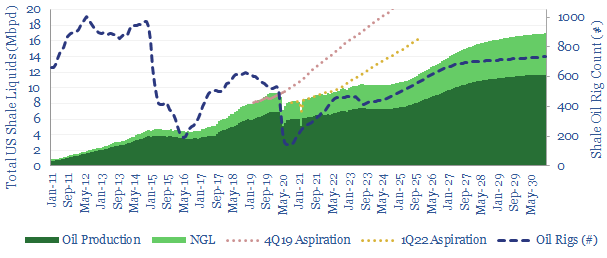

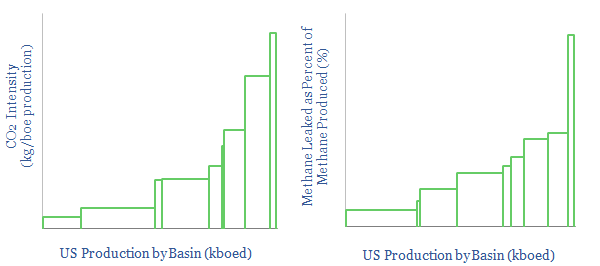

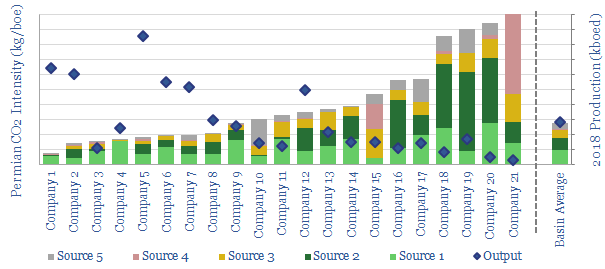

This 17-page note makes the largest changes to our shale forecasts in five years, on both quantitative and qualitative signs that productivity growth is slowing. Productivity peaks after 2025, precisely as energy markets hit steep undersupply. We still see +1Mbpd/year of liquids potential through 2030, but it is back loaded, and requires persistently higher oil prices?

Read the Report?

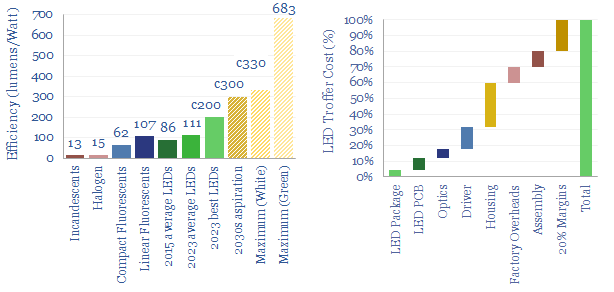

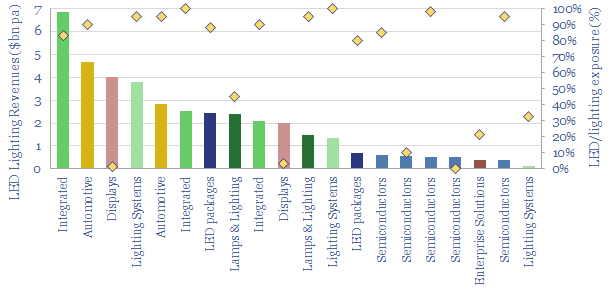

Lighting is 2% of global energy, 6% of electricity, 25% of buildings’ energy. LEDs are 2-20x more efficient than alternatives. Hence this 16-page report presents our outlook for LEDs in the energy transition. We think LED market share doubles to c100% in the 2030s, to save energy, especially in solar-heavy grids. But demand is also rising due to ‘rebound effects’ and use in digital devices.

Read the Report?

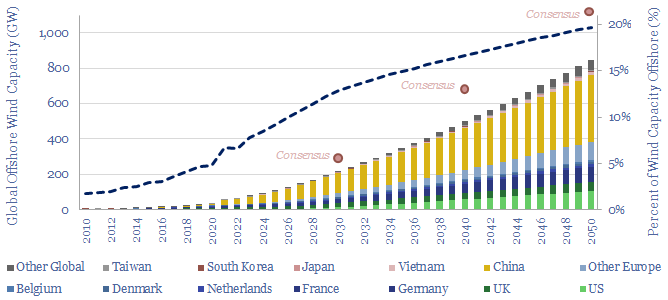

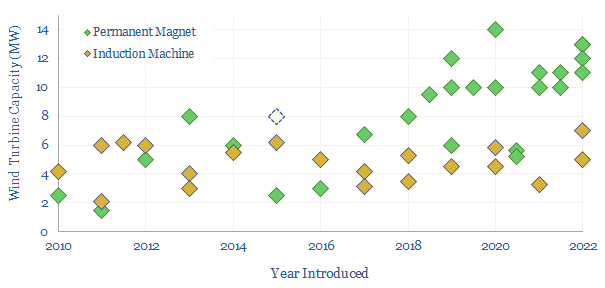

This 14-page report re-visits our wind industry outlook. Our long-term forecasts are reluctantly being revised downwards by 25%, especially for offshore wind, where levelized costs have reinflated by 30% to 13c/kWh. Material costs are widely blamed. But rising rates are the greater evil. Upscaling is also stalling. What options to right this ship?

Read the Report?

This 14-page report explores whether global industrial activity is set to become ever more concentrated in a few advantaged locations, especially the US Gulf Coast, China and the Middle East. Industries form ecosystems. Different species cluster together. Elsewhere, you can no more re-shore a few select industries than introduce dung beetles onto the moon. These mega-trends matter for valuations.

Read the Report?

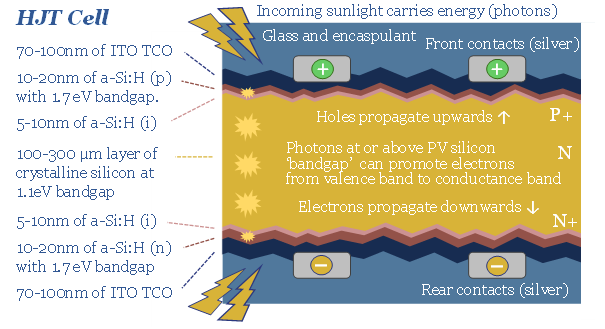

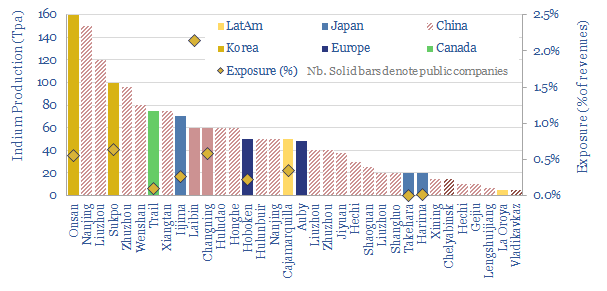

HJT solar modules are accelerating, as they are efficient and easy to manufacture. But HJT could also be a kingmaker for Indium, used in transparent and conductive thin films (ITO). Our forecasts see primary Indium demand rising 4x by 2050. This 16-page note explores the costs and benefits of using ITO in HJTs, and who benefits as solar cells evolve?

Read the Report?

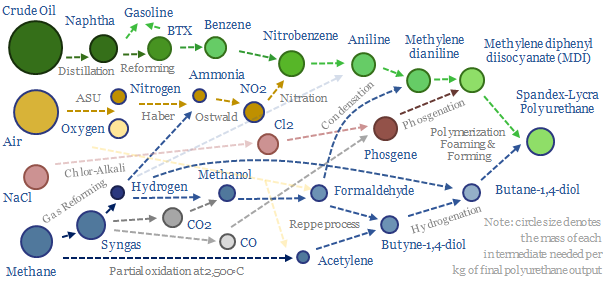

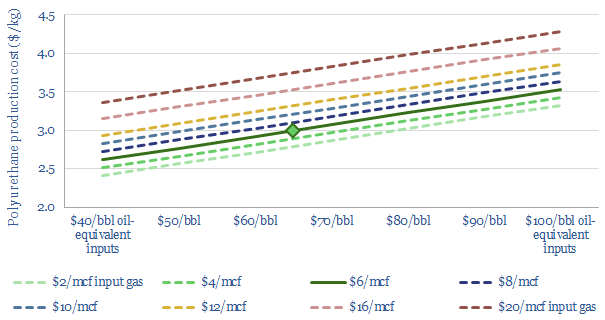

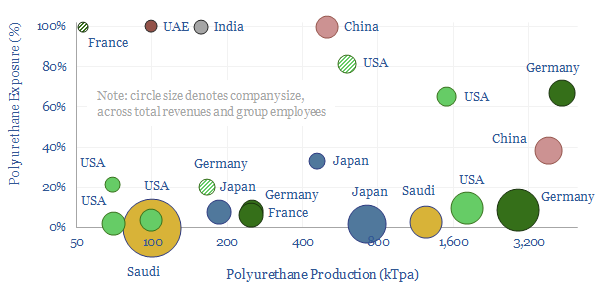

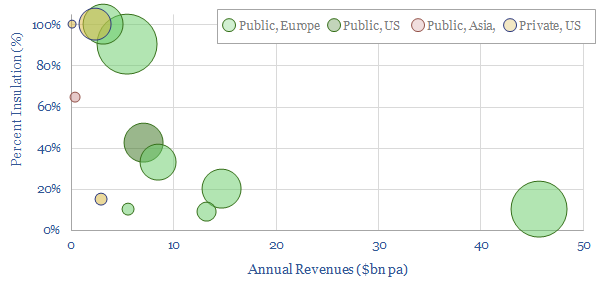

Polyurethanes are elastic polymers, used for insulation, electric vehicles, electronics and apparel. This $75bn pa market expands 3x by 2050. But could energy transition double historically challenging margins, by freeing up feedstock supplies? This 13-page note builds a full mass balance for the 20+ stage polyurethane value chain and screens 20 listed companies.

Read the Report?

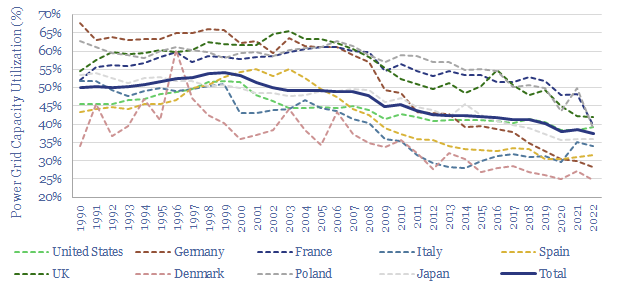

There is an economic paradox where shifting towards lower cost supply sources can cause inflation in the total costs of supply. Renewable-heavy grids are subject to this paradox, as they have high fixed costs and falling utilization. As power prices rise, there are growing incentives for self-generation. Energy transition requires a balanced, pragmatic approach.

Read the Report?

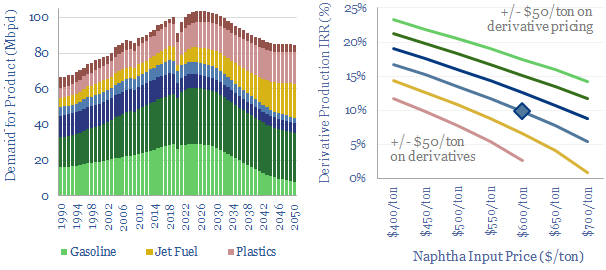

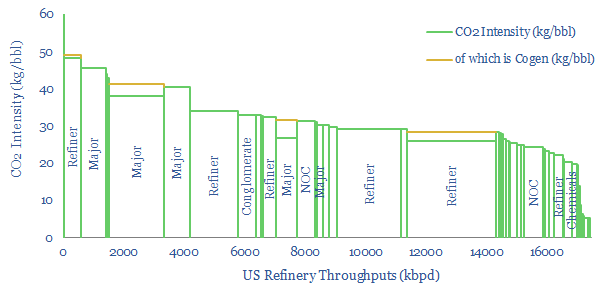

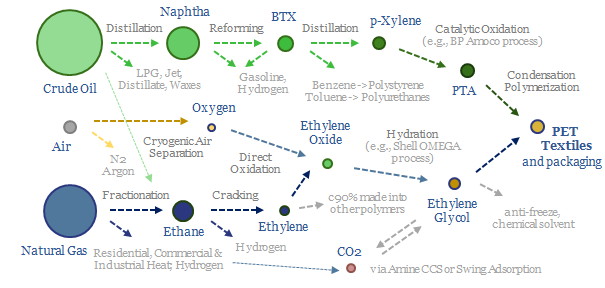

Oil markets are transitioning, with electric vehicles displacing 20Mbpd of gasoline by 2050, while petrochemical demand rises by almost 10Mbpd. So it is often said oil refiners should 'become chemicals companies'. It depends. This 18-page report charts petrochemical pathways and sees opportunity in chemicals that can absorb surplus BTX.

Read the Report?

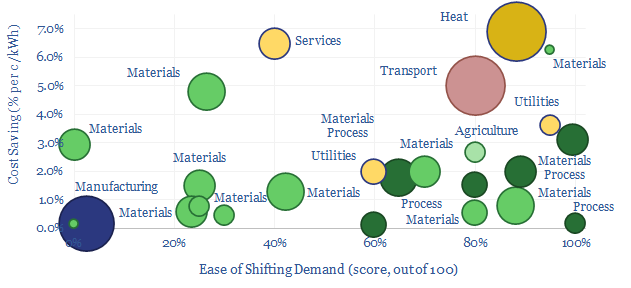

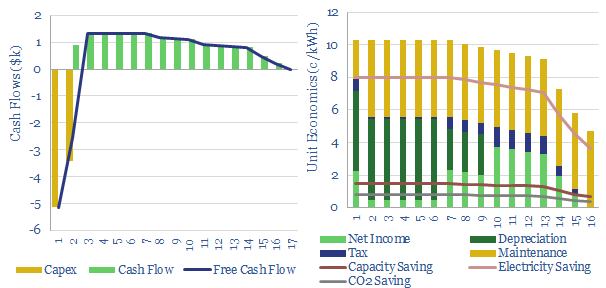

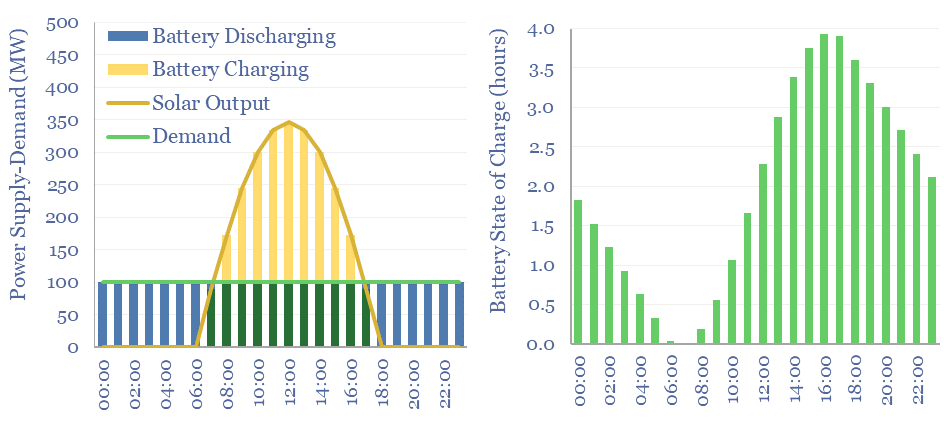

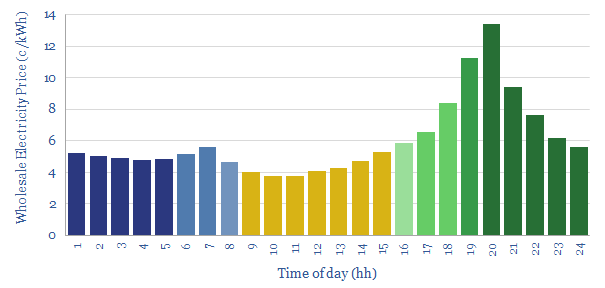

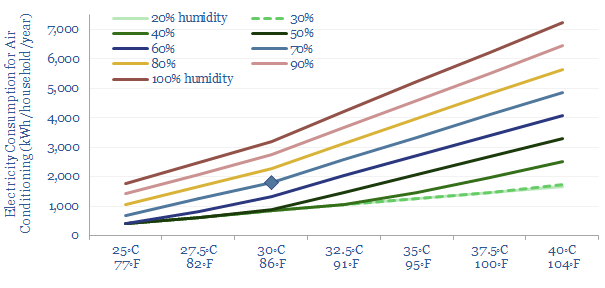

Some industries can absorb low-cost electricity when renewables are over-generating and avoid high-cost electricity when they are under-generating. The net result can lower electricity costs by 2-3c/kWh and uplift ROCEs by 5-15% in increasingly renewables-heavy grids. This 14-page note ranges over 10,000 demand shifting opportunities, to identify who can benefit most.

Read the Report?

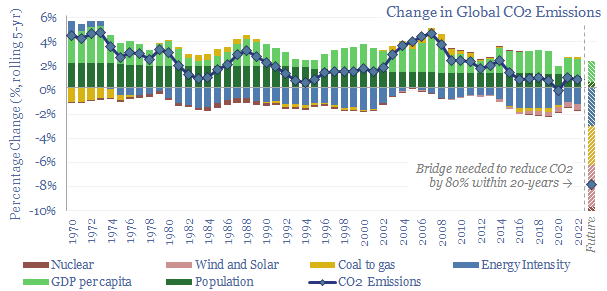

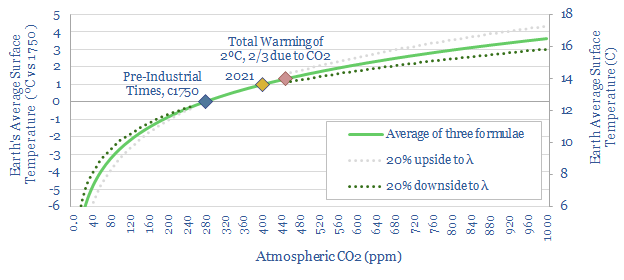

This 16-page report breaks down global CO2 emissions, across six causal factors and 28 countries-regions. Global emissions rose at +0.7% pa in 2017-22, unchanged on 2012-17, even as global income growth slowed by -0.5% pa. Depressing. The biggest reason is underinvestment in gas. Reaching net zero requires vast acceleration in renewables, infrastructure, nuclear, gas and nature.

Read the Report?

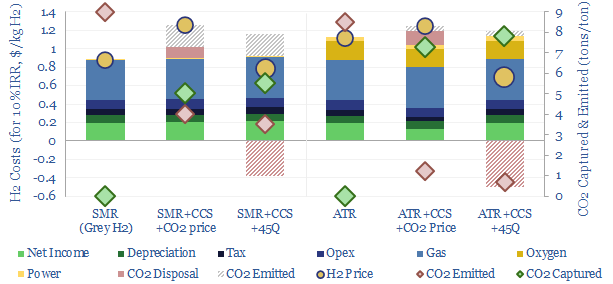

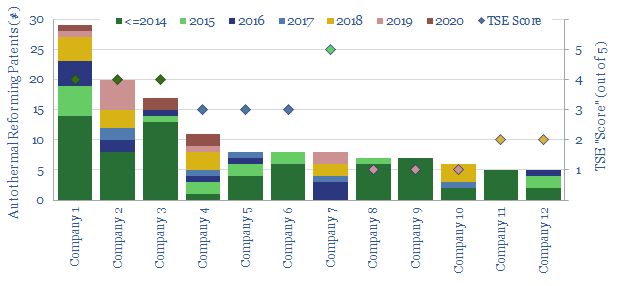

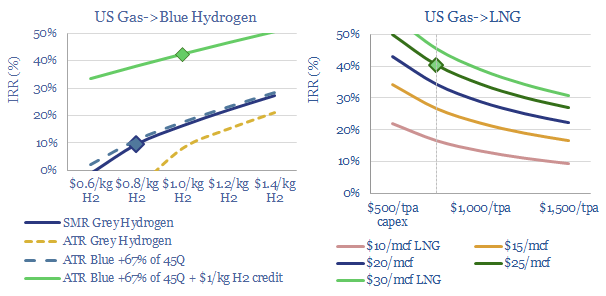

Blue hydrogen value chains are gaining momentum. Especially in the US. So this 16-page note contrasts steam-methane reforming (SMR) versus autothermal reforming (ATR). Each has merits and challenges. ATR looks excellent for clean ammonia. While the IRA creates CCS upside for today’s SMR incumbents, across industrial gases, refining and chemicals.

Read the Report?

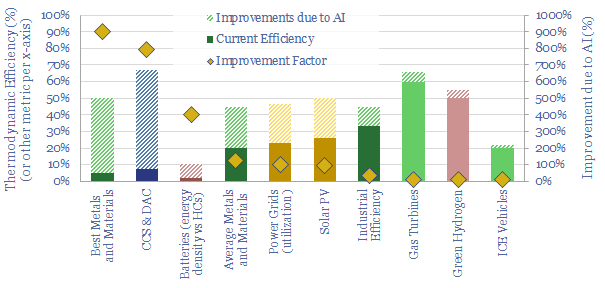

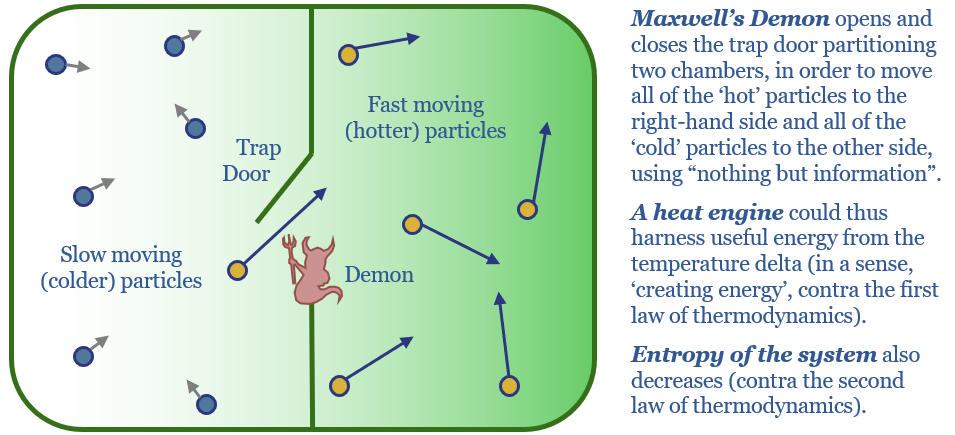

AI will be a game-changer for global energy efficiency, saving 10x more energy than it consumes directly, closing 'thermodynamic gaps' where 80-90% of all primary energy is wasted today. Leading corporations will harness AI to lower costs and accelerate decarbonization. This 19-page note explores opportunities.

Read the Report?

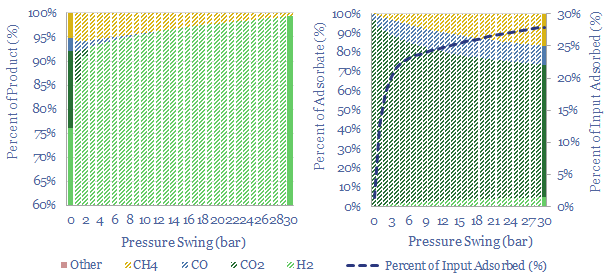

Swing Adsorption separates gases, based on their differential loading onto zeolite adsorbents at varying pressures. Today, tens of thousands of PSA plants purify hydrogen, biogas, polymers, nitrogen/oxygen and possibly in the future, can capture CO2? This 16-page note explores PSA technology for industrial gases.

Read the Report?

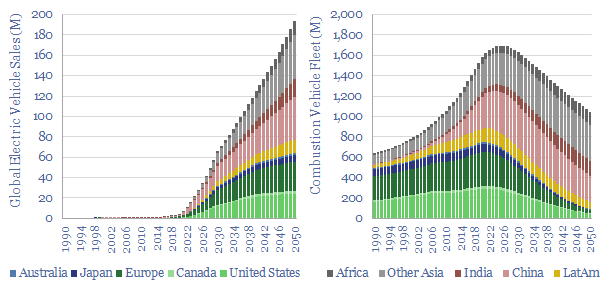

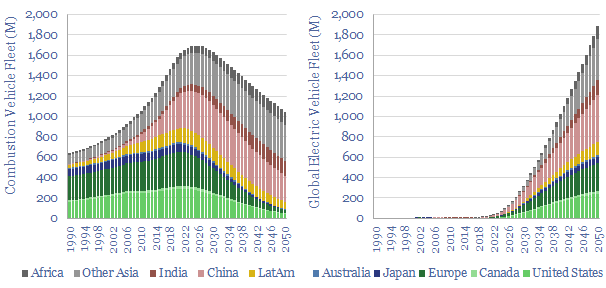

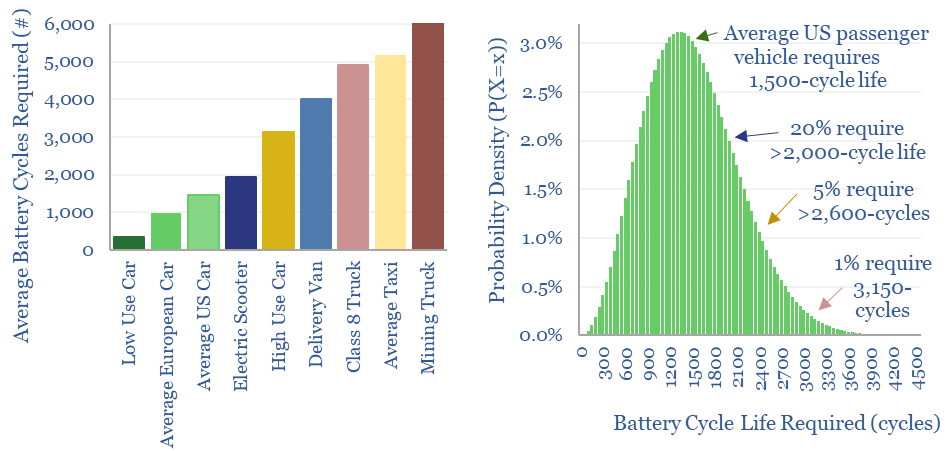

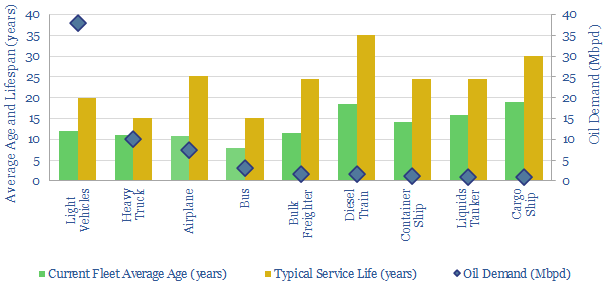

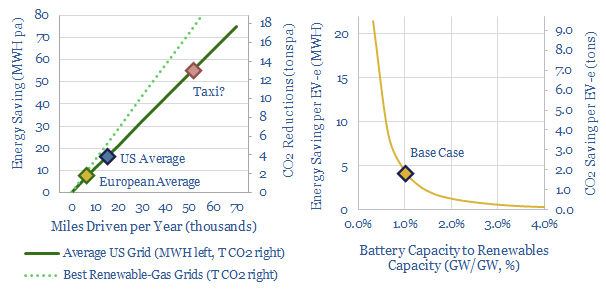

Electric vehicles are a world-changing technology, 2-6x more efficient than ICEs, but how quickly will they ramp up to re-shape global oil demand? This 14-page note finds surprising ‘stickiness’. Even as EV sales explode to 200M units by 2050 (2x all-time peak ICE sales), the global ICE fleet may only fall by 40%. Will LT oil demand surprise to the upside or downside?

Read the Report?

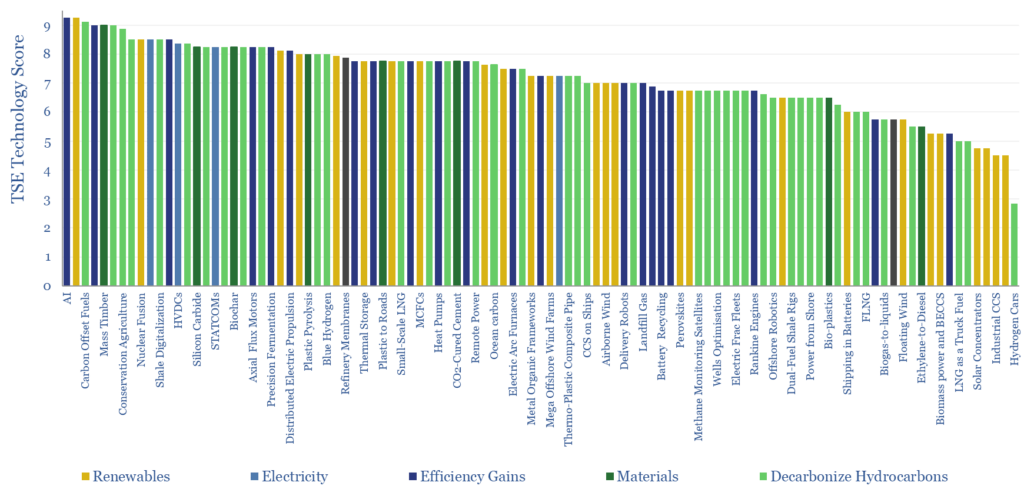

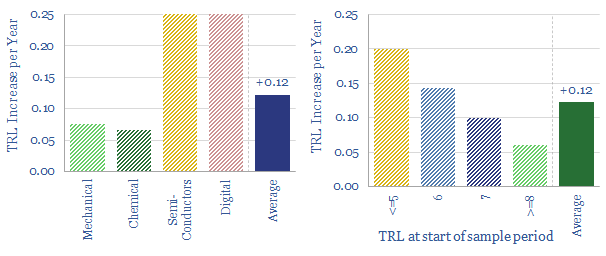

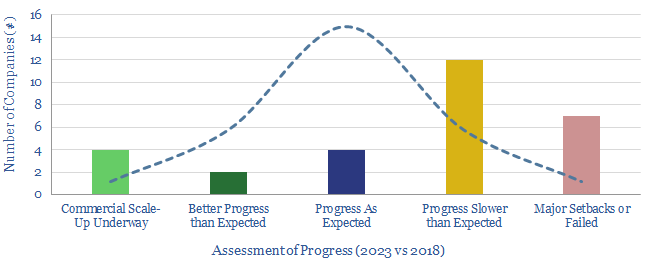

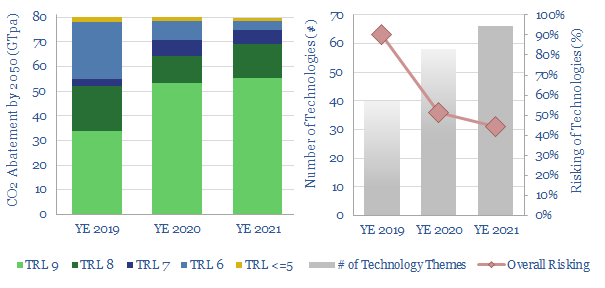

This 12-page note looks back over 5-years of energy technology research. Progress has often been slower than we expected. Maturing early-stage technology takes 20-30 years. Progress slows as work shifts from the lab to the real world. We wonder whether 2050 will look more like 2023 than many expect; or if decarbonization must rely more on today’s mature technologies?

Read the Report?

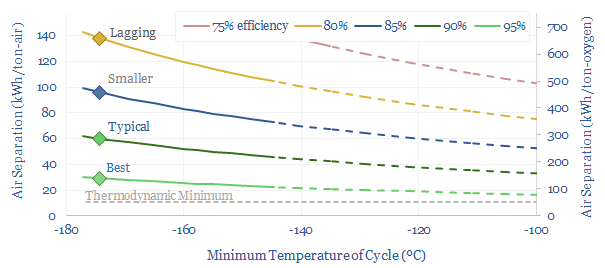

Cryogenic air separation is used to produce 400MTpa of oxygen, plus pure nitrogen and argon; for steel, metals, ammonia, wind-solar inputs, semiconductor, blue hydrogen and Allam cycle oxy-combustion. Hence this 16-page report is an overview of industrial gases. How does air separation work? What costs, energy use and CO2 intensity? Who benefits amidst the energy transition?

Read the Report?

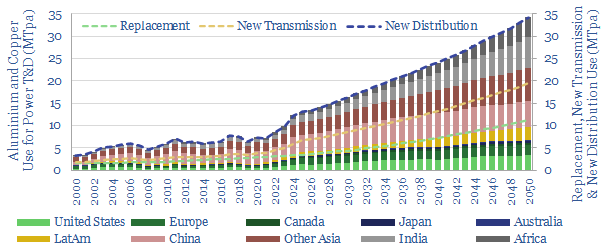

Power grid circuit kilometers need to rise 3-5x in the energy transition. This trend directly tightens global aluminium markets by over c20%, and global copper markets by c15%. Slow recent progress may lead to bottlenecks, then a boom? This 12-page note quantifies the rising demand for circuit kilometers, grid infrastructure, underlying metals and who benefits?

Read the Report?

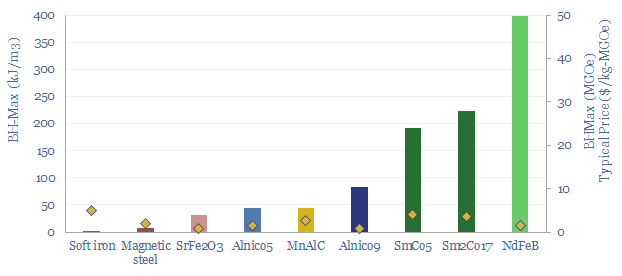

Electric currents create magnetic fields. Moving magnets induce electric currents. These principles underpin 95% of global electricity. 50% of wind turbines and 95% of electric vehicles use permanent magnets with Rare Earth metals. This 15-page overview of magnets covers key concepts and controversies for energy transition.

Read the Report?

Energy Market Models

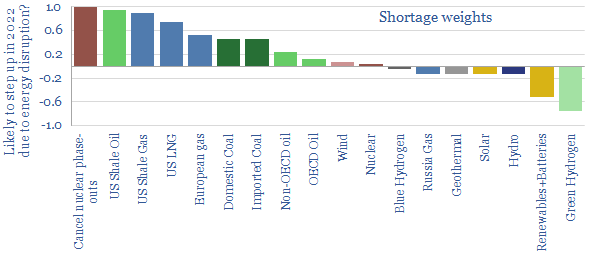

This global energy supply-demand model combines our supply outlooks for coal, oil, gas, LNG, wind and solar, nuclear and hydro, into a build-up of useful global energy balances in 2022-30. We fear chronic under-supply. This is masked by economic weakness in 2023, rises to 3% shortages in 2025, and 5% shortages in 2030. Numbers can be stress-tested in the model.

Download the Model?

Global plastic is estimated at 470MTpa in 2022, rising to at least 800MTpa by 2050. This data-file is a breakdown of global plastic demand, by product, by region and by end use, with historical data back to 1990 and our forecasts out to 2050. Our top conclusions for plastic in the energy transition are summarized.

Download the Model?

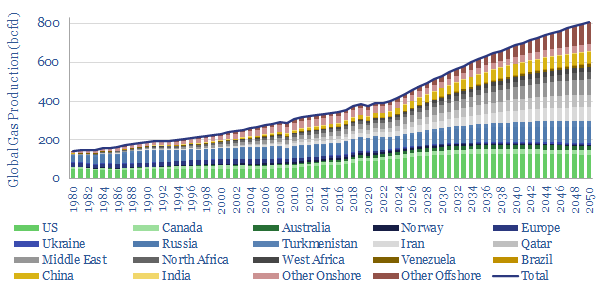

Our roadmap to 'Net Zero' requires doubling global gas production from 400bcfd to 800bcfd, as a complement to wind, solar, nuclear and other low-carbon energy. Reserve replacement must exceed 100% and the global RP ratio halves to 25-years. What do you have to believe?

Download the Model?

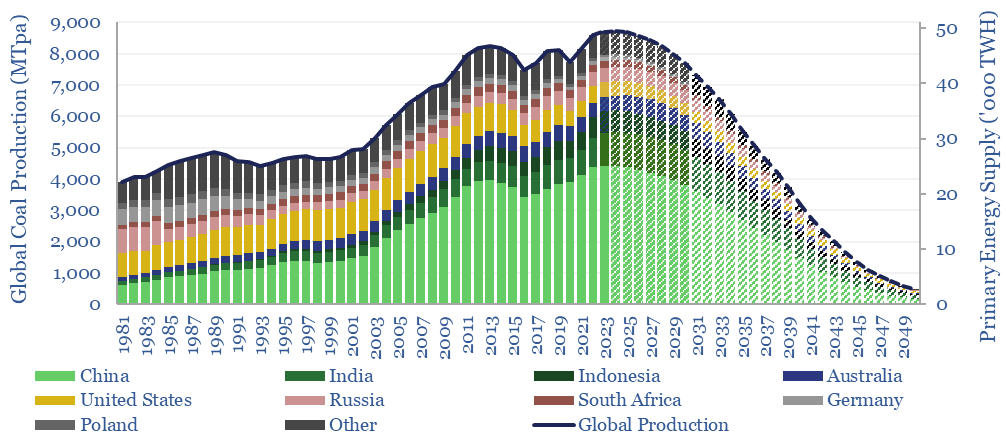

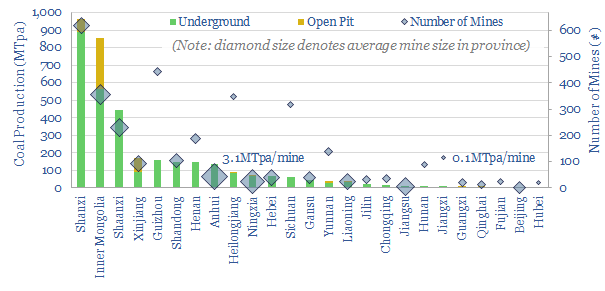

Our models of the energy transition ease coal production back from a new all-time peak of 8.3GTpa in 2022 to 0.5GTpa by 2050. This is sheer fantasy without a vast scale-up of wind, solar, gas and nuclear. This model breaks down coal production by country.

Download the Model?

This model sets out our US shale production forecasts by basin. It covers the Permian, Bakken and Eagle Ford, as a function of the rig count, drilling productivity, completion rates, well productivity and type curves. US shale likely adds +1Mbpd/year of production growth from 2023-2030, albeit flatlining in 2024, then re-accelerating on higher oil prices?

Download the Model?

This data-file forecasts the energy consumption of the internet, rising from 800 TWH in 2022 to 2,000 TWH in 2030 and 3,750 TWH by 2050. The main driver is the energy consumption of AI, plus blockchains, rising traffic, and offset by rising efficiency. Input assumptions to the model can be flexed. Underlying data are from technical papers.

Download the Model?

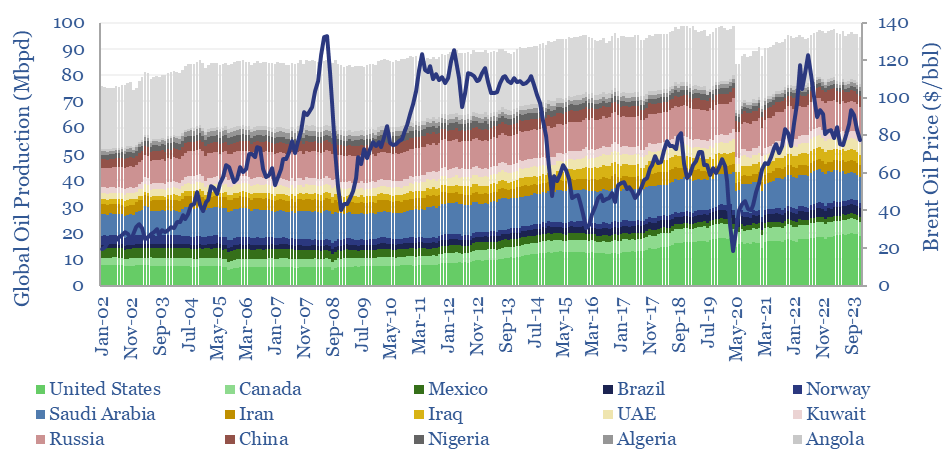

Global oil production by country by month is aggregated across 35 countries that produce 80kbpd of crude, NGLs and condensate, explaining >96% of the global oil market. Production has grown by +1Mbpd/year in the past two-decades, led by the US, Iraq, Russia, Canada. Oil market volatility is usually low, at +/- 1.5% per year, of which two-thirds is down to conscious decisions.

Download the Model?

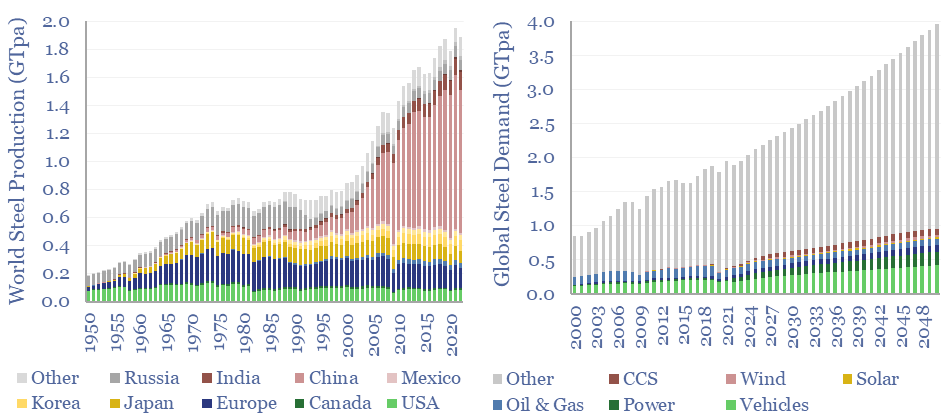

Global steel supply-demand runs at 2GTpa in 2023, having doubled since 2003. Our best estimate is that steel demand rises another 80%, to 3.6GTpa by 2050, including due to the energy transition. Global steel production by country is now dominated by China, whose output exceeds 1GTpa, which is 8x the #2 producer, India, at 125MTpa.

Download the Model?

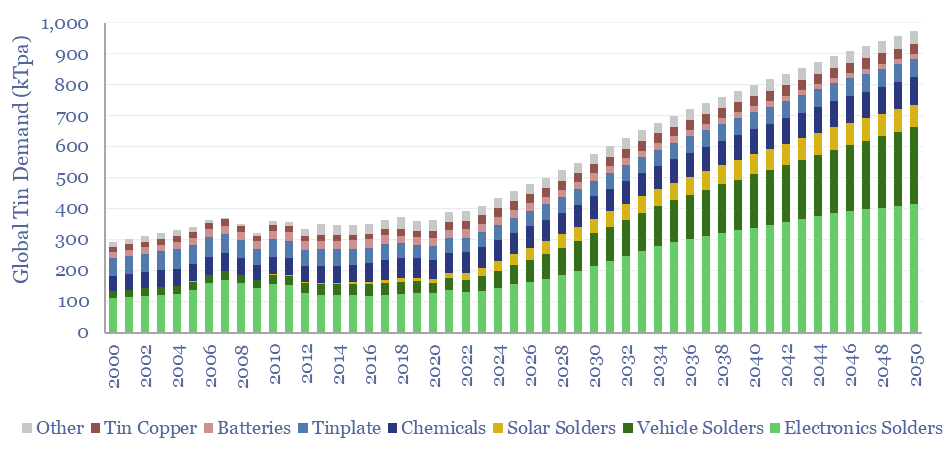

Global tin demand stands at 400kTpa in 2023 and rises by 2.5x to 1MTpa in 2050 as part of the energy transition. 50% of today's tin market is for solder, which sees growing application in the rise of the internet, rise of EVs and rise of solar. Global tin supply and demand can be stress-tested in the model.

Download the Model?

Global production of hydrogen is around 110MTpa in 2023, of which c30% is for ammonia, 25% is for refining, c20% for methanol and c25% for other metals and materials. This data-file estimates global hydrogen supply and demand, by use, by region, and over time, with projections through 2050.

Download the Model?

Polysilicon is a highly pure, crystalline silicon material, used predominantly for photovoltaic solar, and also for 'chips' in the electronics industry. Global polysilicon capacity is estimated to reach 1.65MTpa in 2023, and global polysilicon production surpasses 1MTpa in 2023. China now dominates the industry, approaching 90% of all global capacity.

Download the Model?

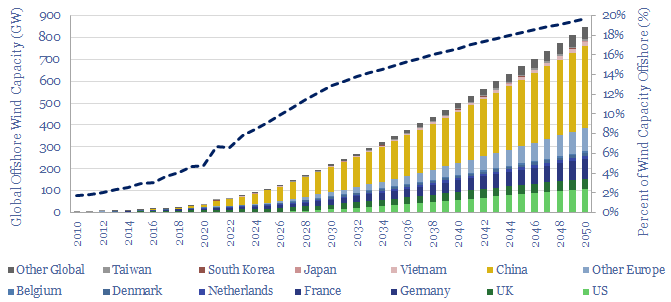

Global offshore wind capacity stood at 60GW at the end of 2022, rising at 8GW pa in the past half decade, comprising 7% of all global wind capacity, and led by China, the UK and Germany. Our forecasts see 220GW of global offshore wind capacity by 2030 and 850GW by 2050, which in turn requires a 15x expansion of this market.

Download the Model?

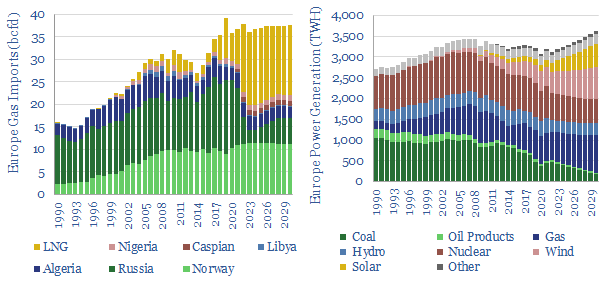

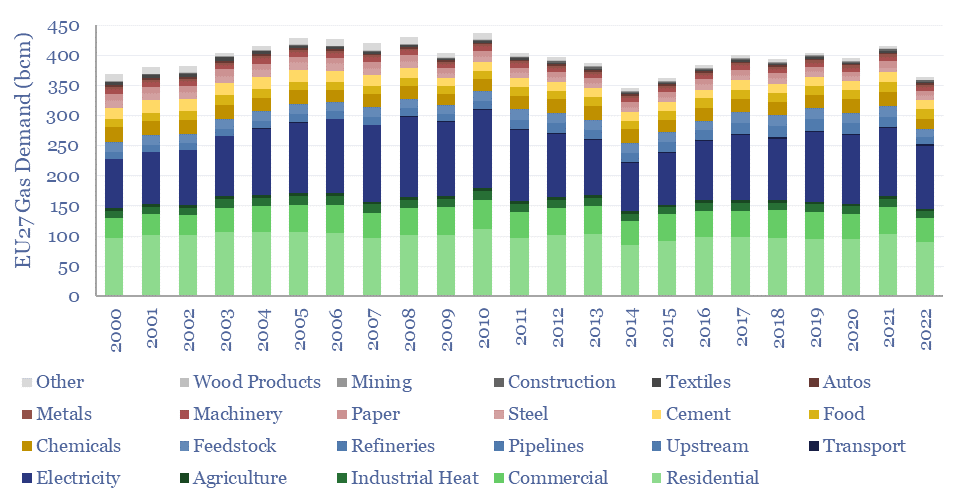

European gas and power markets will look better-supplied than they truly are in 2023-24. A dozen key input variables can be stress-tested in the data-file. Overall, we think Europe will need to source over 15bcfd of LNG through 2030, especially US LNG.

Download the Model?

We have modeled the global light vehicle fleet, light vehicle sales by region, and the world's shift from internal combustion engines (ICEs) towards electric vehicles (EVs) through 2050. Our base case model sees almost 200M EV sales by 2050, and a c40% decline to around 1bn combustion vehicles in the world's fleet by 2050.

Download the Model?

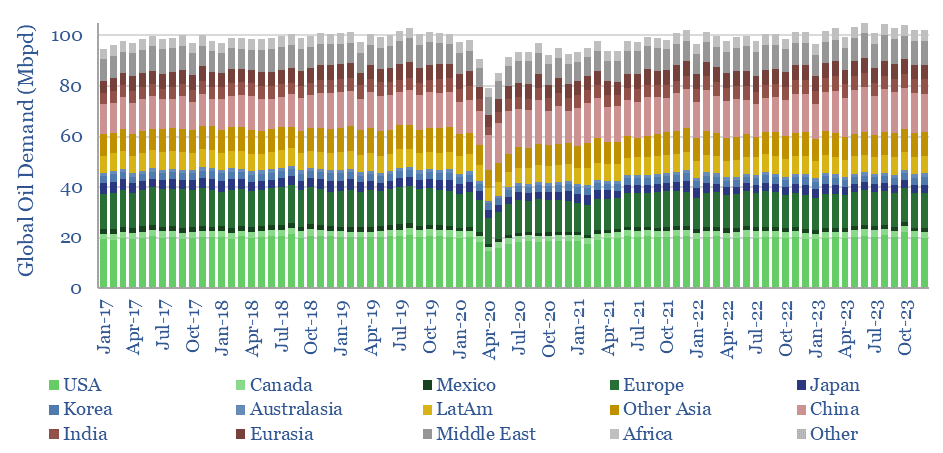

This data-file breaks down global oil demand, country-by-country, product-by-product, month-by-month, across 2017-2022. The goal is to summarize the effects of COVID, and the subsequent recovery in oil markets. Global oil demand is hitting new highs, even though several product categories are still not fully recovered.

Download the Model?

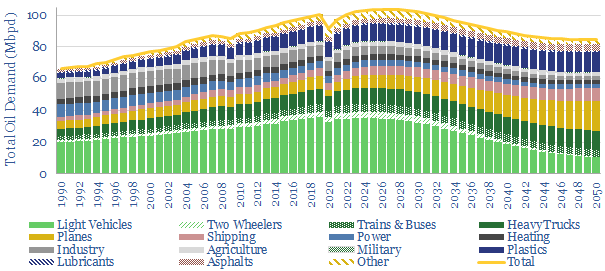

This model forecasts long-run oil demand to 2050, by end use, by year, and by region; across the US, the OECD and the non-OECD. We see demand gently rising through the 2020s, peaking at 104Mbpd in 2025-27, then gently falling to 85Mbpd by 2050 in the energy transition.

Download the Model?

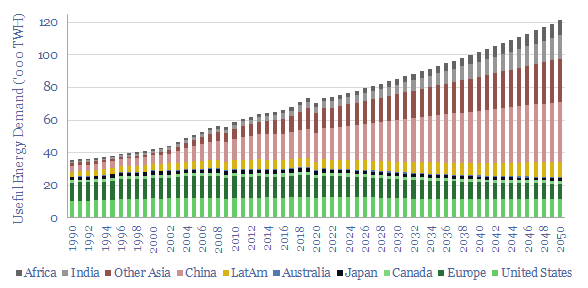

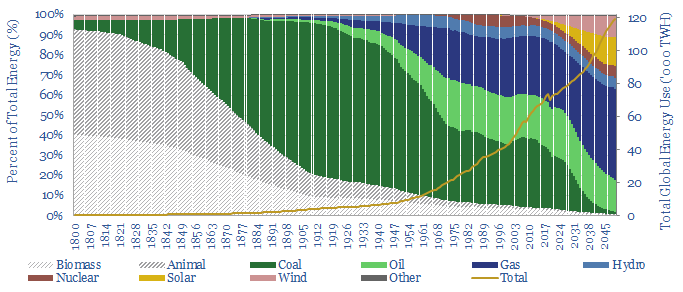

This model captures global energy demand by region through 2050, rising from 70,000 TWH in 2019-22 to 120,000 MWH in 2050. Demand rises c2% pa. Energy use per global person rises at 1% pa from 9.3 MWH pp pa to 12.6 MWH pp pa. Meeting human civilization's energy needs is crucial to the energy transition.

Download the Model?

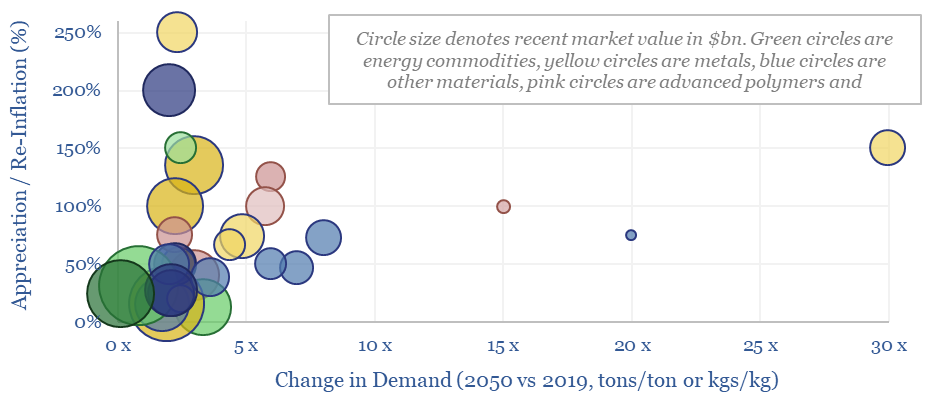

This data-file summarizes our latest thesis on ten commodities with upside in the energy transition. The average one will see demand rise by 3x and price/cost appreciate or re-inflate by 100%. The data-file contains a 6-10 line summary of our work into each commodity.

Download the Model?

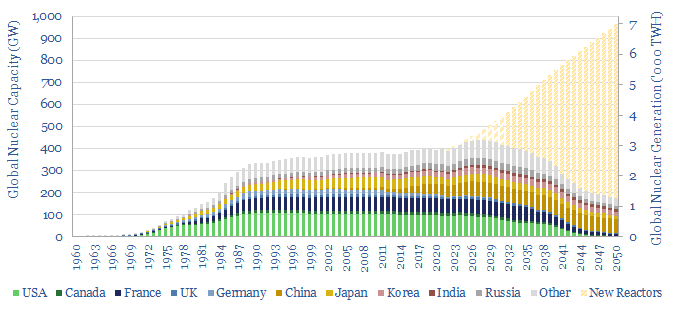

How much nuclear capacity would need to be constructed in our roadmap to net zero? This breakdown of global nuclear capacity forecasts that 30 GW of new reactors must be brought online each year through 2050, if the nuclear industry was to ramp up to 7,000 TWH of generation by 2050, which would be 6% of total global energy. There is a precedent. Delaying shutdowns helps too.

Download the Model?

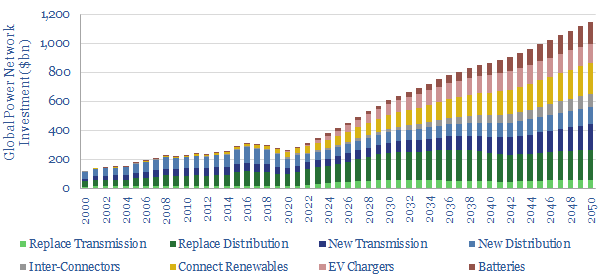

Global investment into power networks averaged $280bn per annum in 2015-20, of which two-thirds was for distribution and one-third was for transmission. Amazingly, these numbers step up to $600bn in 2030, >$1trn in the 2040s and can be as large as all primary energy investment.

Download the Model?

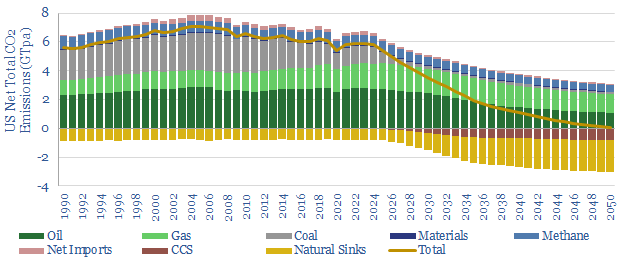

The US consumes 25,000 TWH of primary energy per year, which equates to 13,000 TWH of useful energy, and emits 6GTpa of CO2. This model captures our best estimates for what a pragmatic and economical decarbonization of the US will look like, reaching net zero in 2050, with energy consumption at 11,500 TWH per year.

Download the Model?

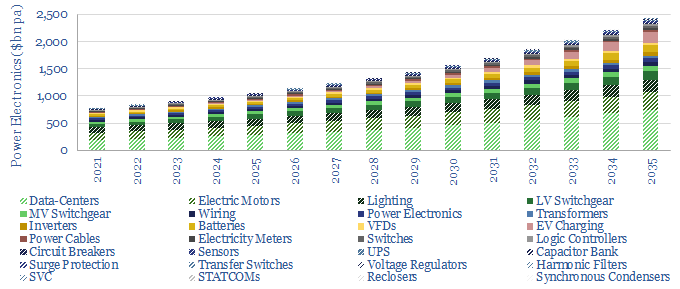

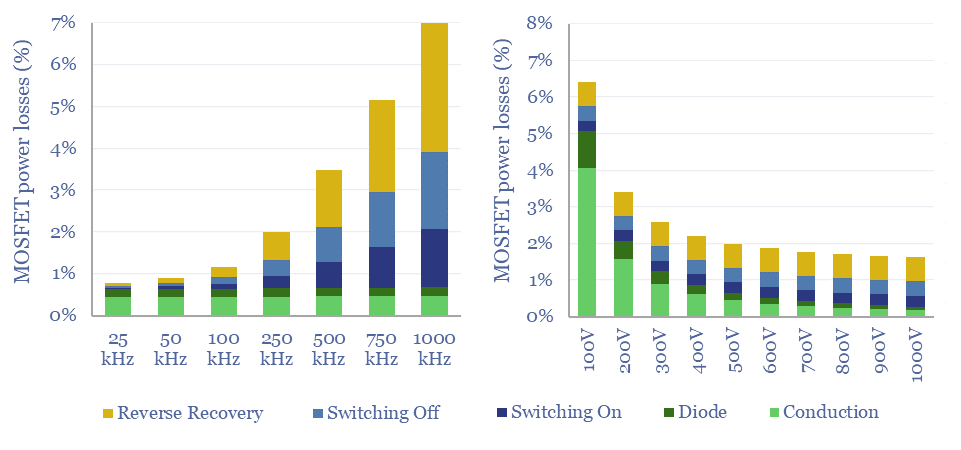

We describe c15 problems incurred by industrial and commercial power consumers. Many will require additional investment as renewables replace the large rotating generators of traditional power grids. Hence we see the market for commercial and industrial power electronics trebling from $360bn pa in 2021 to $1trn pa by 2035.

Download the Model?

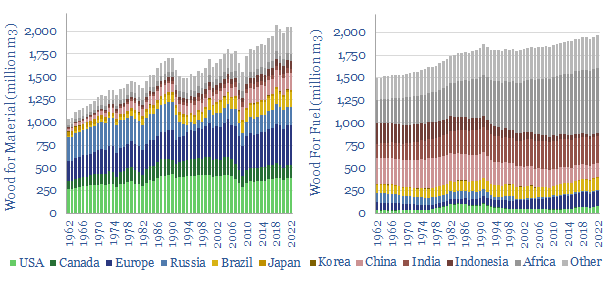

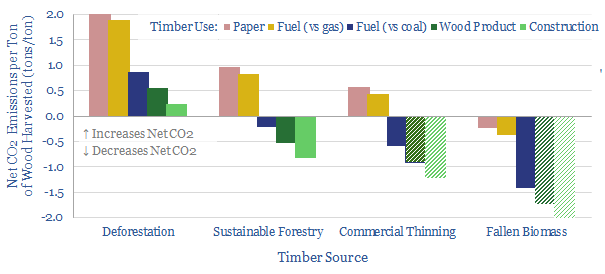

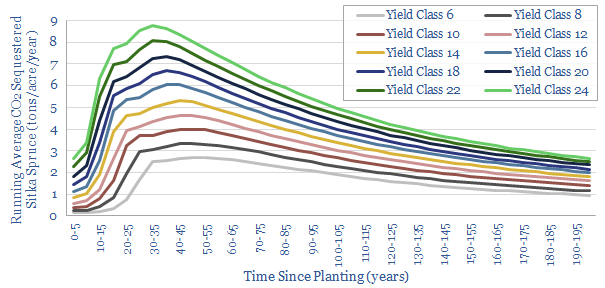

This data-file quantifies global wood production, country-by-country, back to 1960, across energy, pulp and longer-lasting materials. Overall, wood energy has declined from 11% of the world's primary energy mix in 1960 to c4% today, but it remains stubbornly high in less-developed countries, amplifying deforestation.

Download the Model?

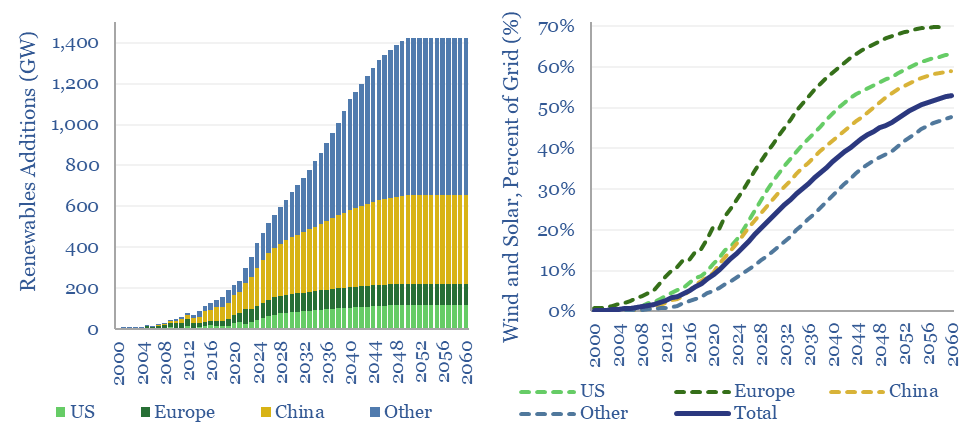

This model aims to calculate global wind and solar capacity additions. How many GW of new capacity would be needed for renewables to reach c25% of the global energy mix by 2050, up from 4% in 2021? In total energy terms, this means a 10x scale up, to 30,000 TWH of useful wind+solar energy in 2050. Gross global wind and solar capacity additions will surpass +1,000 GW by 2040.

Download the Model?

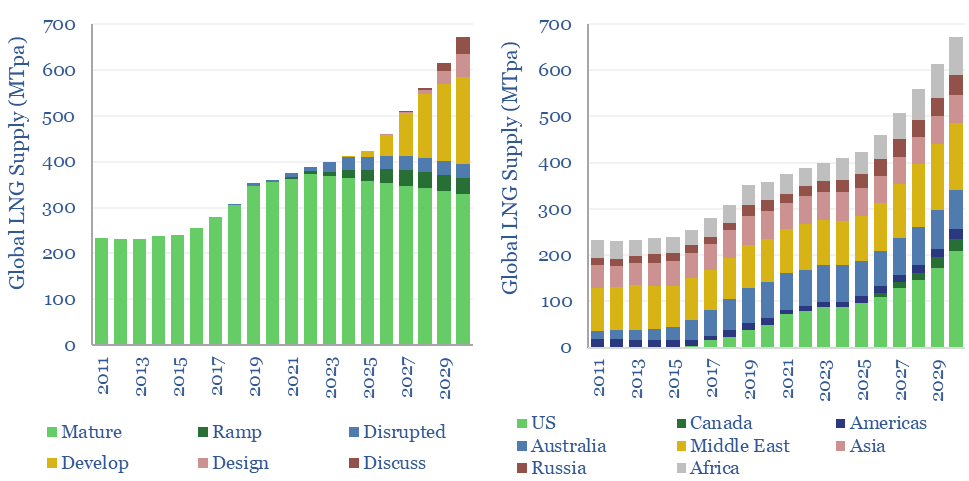

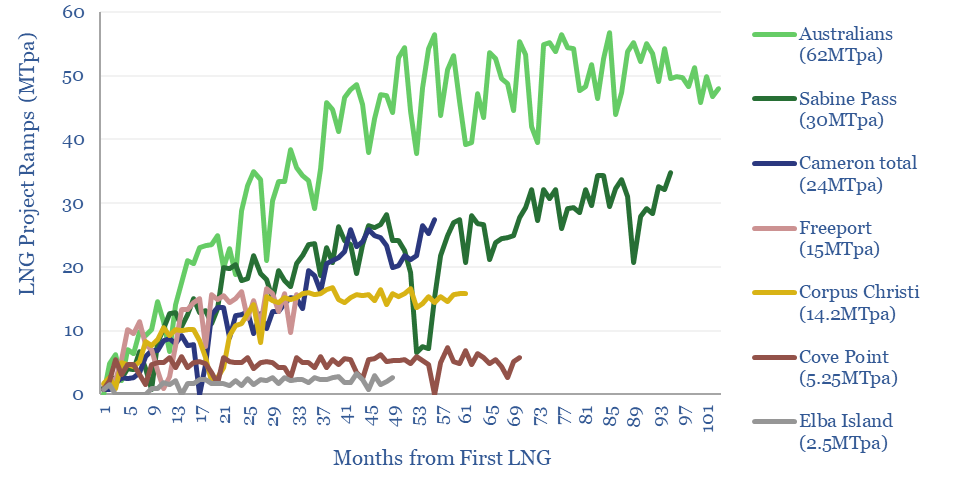

Global LNG output ran at 400MTpa in 2023. This model estimates global LNG production by facility across 140 LNG facilities. Our latest forecasts are that global LNG demand will rise at an 8% CAGR, to reach 670MTpa by 2030, for an absolute growth rate of +40MTpa per year, but the growth is back-end loaded.

Download the Model?

This model breaks down 2050 and 2100's global energy market, based on a dozen input assumptions. You can 'flex' these, to see how it will affect future oil, coal and gas demand, as well as global CO2 emissions. We reach 'net zero' by 2050. Even as fossil fuel demand rises 18%, gas demand trebles and renewables also reach c16%.

Download the Model?

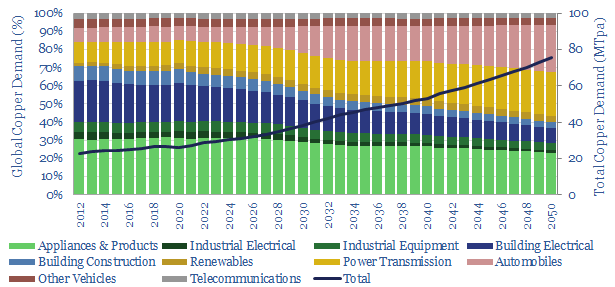

This data-file estimates global copper demand as part of the energy transition, rising from 28MTpa in 2022 to 70MTpa in our base case scenario. The largest contributor is the electrification of transport. You can stress test half-a-dozen key input variables in the model.

Download the Model?

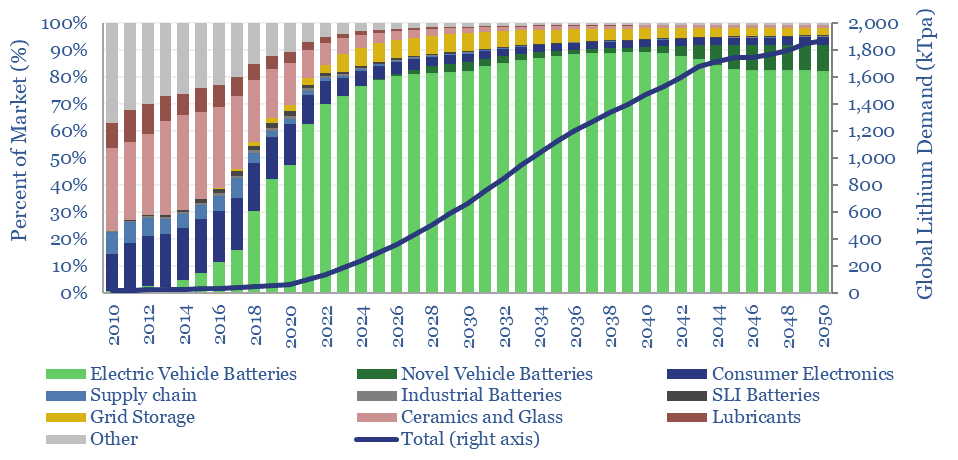

This data-file estimates global demand for lithium as part of the energy transition. The market has already trebled from 23kTpa in 2010 to 65kTpa in 2020, while we see the ascent continuing to 500kTpa in 2030 and almost 2MTpa in 2050. 90% is driven by transport. Global reserves suffice to cover the demand.

Download the Model?

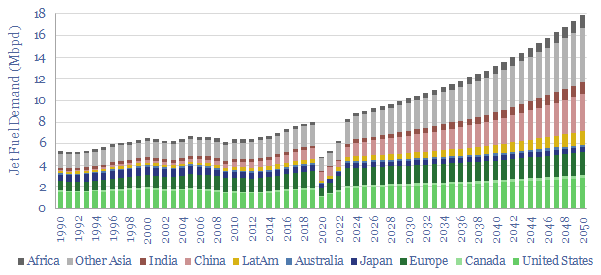

Jet fuel demand ran at 8Mbpd in 2019, the last year before COVID, and could rise to 18Mbpd by 2050, as global population rises 25%, jet fuel demand per capita doubles and fuel economy per aviation mile rises by 20%. This data file breaks down jet fuel demand by region, including our forecasts through 2050, which can be stress-tested, and feed into our global oil demand models.

Download the Model?

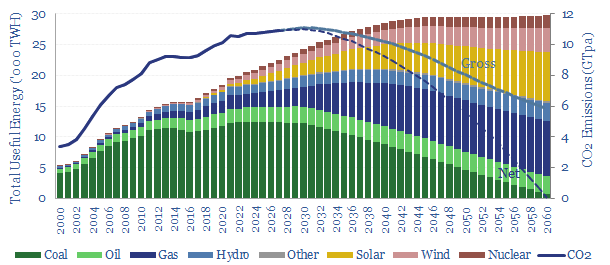

In our base case scenario, total useful energy demand rises 1.5x by 2050, gas demand rises 3.5x to displace coal, wind and solar reach 50% of the final electricity grid, while gross CO2 emissions fall back from 10.5GTpa in 2022 to 8GTpa, and the remainder must be met by (a) waiting until 2060 (b) CCS and (c) CO2 removals.

Download the Model?

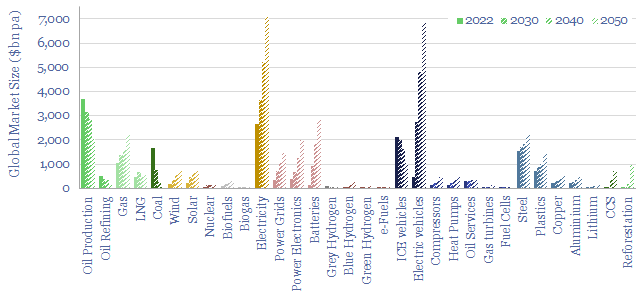

This data-file contains energy transition market sizing analysis, for hydrocarbons, new energies, capital goods and materials in $bn pa, integrating over 1,000 items of energy transition research and our latest roadmap to net zero. In aggregate, energy, materials and transition-related markets double from $25 trn pa to $50 trn pa.

Download the Model?

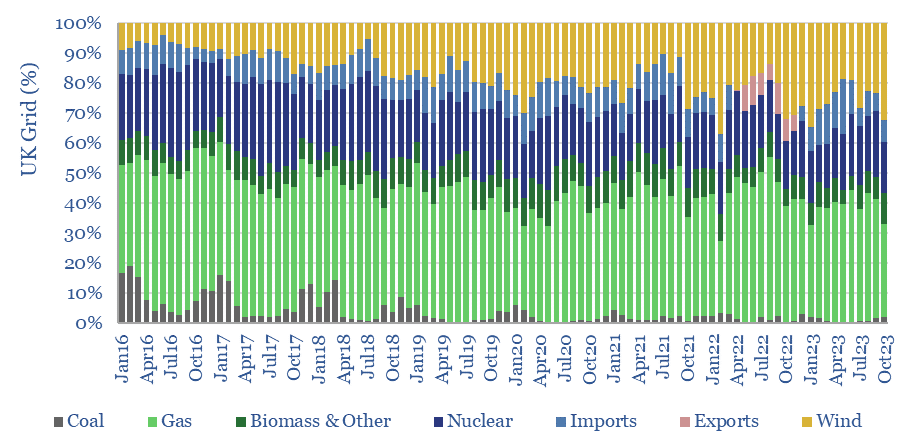

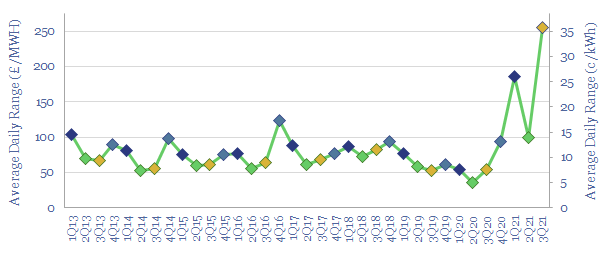

This data-file contains the output from some enormous data-pulls, evaluating UK grid power generation by source, its volatility, and the relationship to hourly traded power prices. We conclude the grid is growing more expensive and volatile, with the increasing share of wind.

Download the Model?

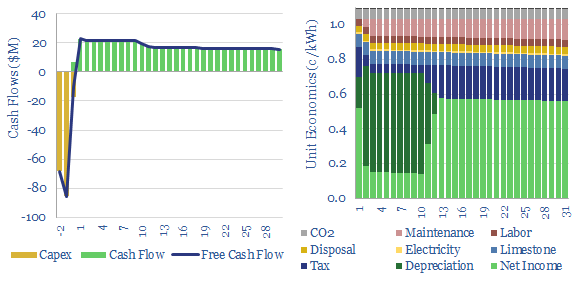

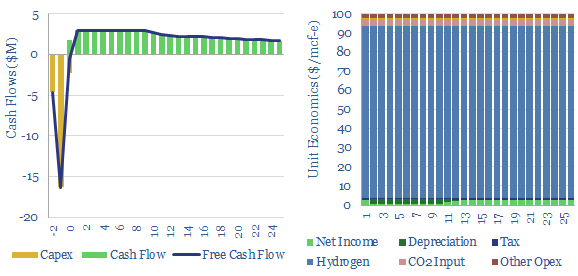

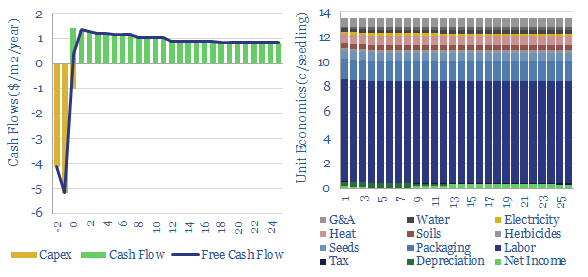

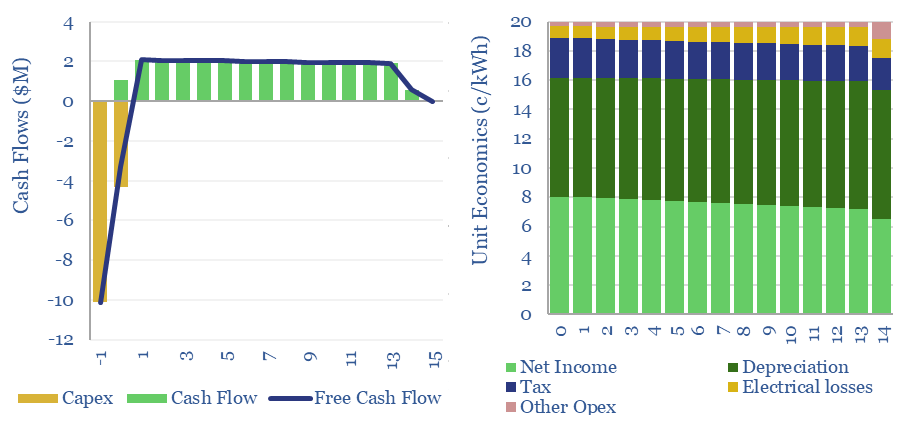

Economic Models

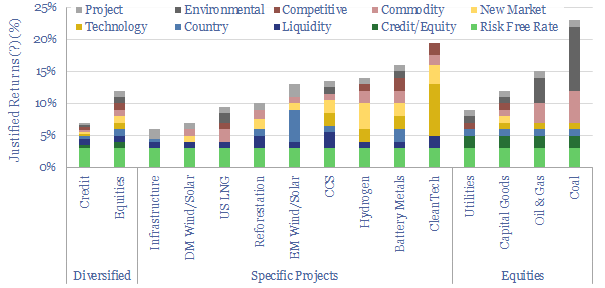

This data-file provides an overview of energy economics, across 175 different economic models constructed by Thunder Said Energy, in order to put numbers in context. This helps to compare marginal costs, capex costs, energy intensity, interest rate sensitivity, and other key parameters that matter in the energy transition.

Download the Model?

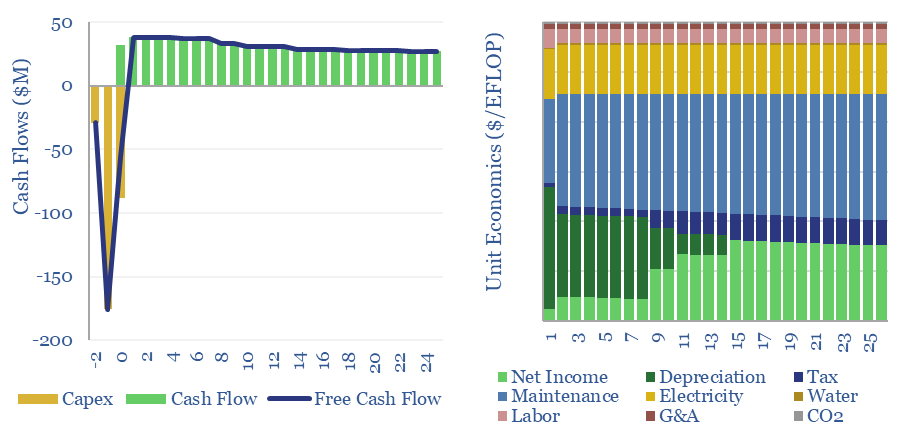

The capex costs of data centers are typically $10M/MW, with opex costs dominated by maintenance (c40%), electricity (c15-25%), labor, water, G&A and other. A 30MW data-center must generate $100M of revenues for a 10% IRR, while an AI data center in 2024 may need to charge $3M/EFLOP of compute.

Download the Model?

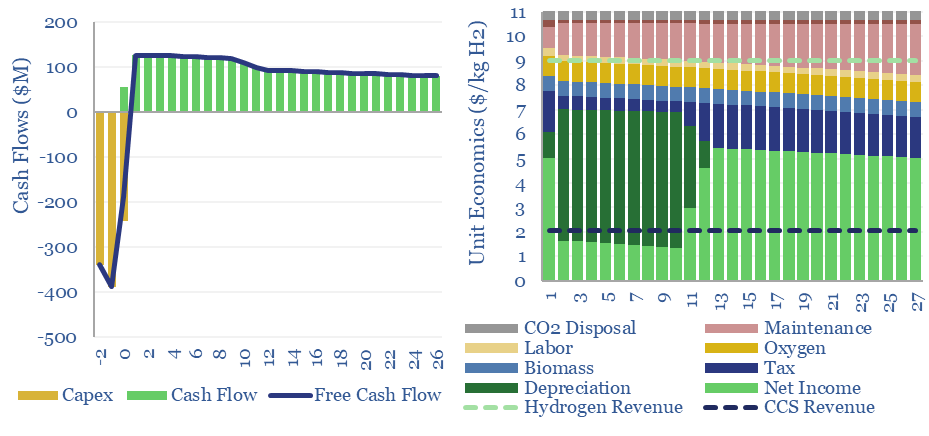

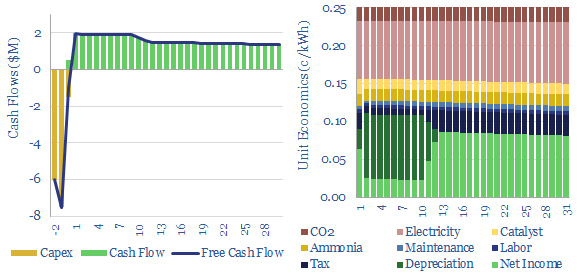

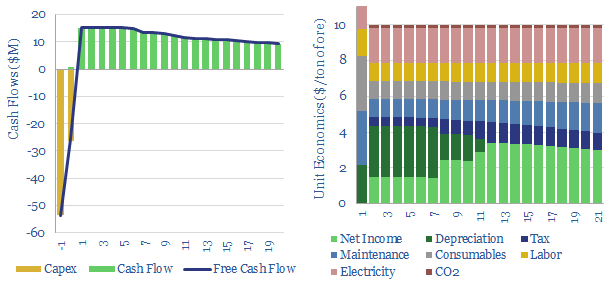

Woody biomass can be converted into clean hydrogen via gasification. If the resultant CO2 is sequestered, each ton of hydrogen may be associated with -20 tons of CO2 disposal. The economies of hydrogen from biomass gasification require $11/kg-e revenues for a 10% IRR on capex of $3,000/Tpa of biomass, or lower, with CO2 disposal incentives.

Download the Model?

Our base case estimates for Compressed Air Energy Storage costs require a 26c/kWh storage spread to generate a 10% IRR at a $1,350/kW CAES facility, with 63% round-trip efficiency, charging and discharging 365 days per year. Our numbers are based on top-down project data and bottom up calculations, both for CAES capex (in $/kW) and CAES efficiency (in %) and can be stress-tested in the model. What opportunities?

Download the Model?

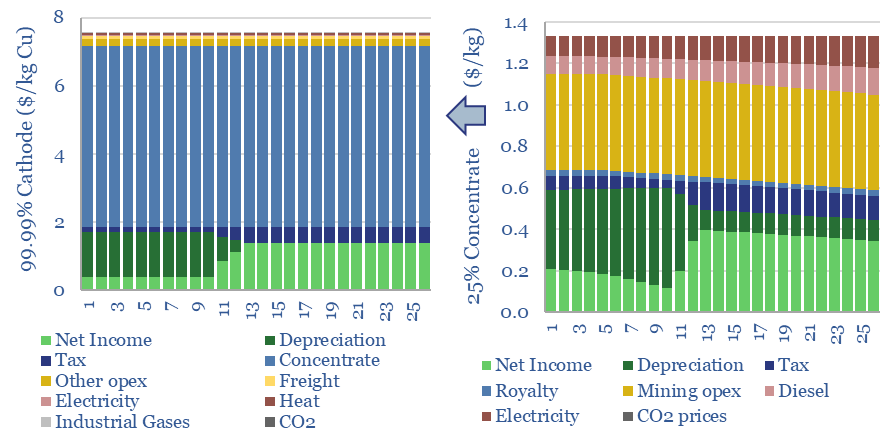

The economic cost of copper production is build up from first princples in this model, from mine, to concentrator, to smelter to 99.99% pure copper cathodes. Our base case is $7.5/kg copper cathode, with 4 tons/ton CO2 intensity, after starting from an 0.57% ore grade. Numbers vary sharply and can be stress-tested in the data-file.

Download the Model?

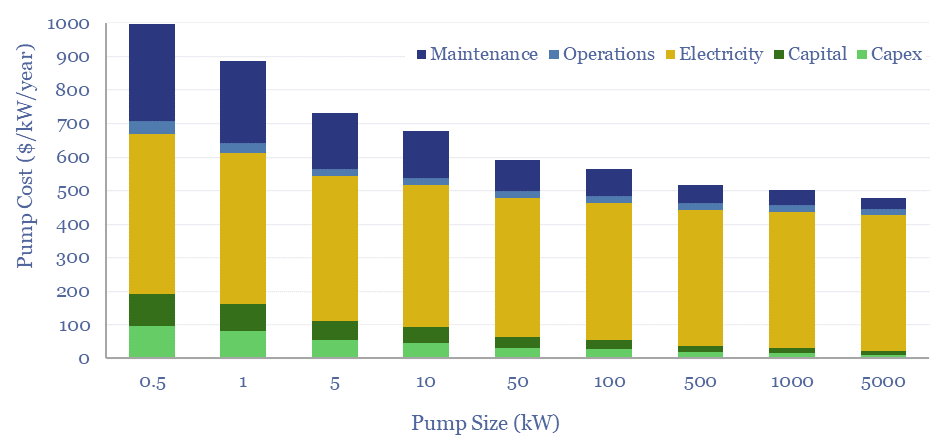

Total pump costs can be ballparked at $600/kW/year of power, of which 70% is electricity, 20% operations and maintenance, 10% capex/capital costs. But the numbers vary. Hence this data-file breaks down the capex costs of pumps, other pump costs, pump energy consumption and the efficiency of pumps from first principles.

Download the Model?

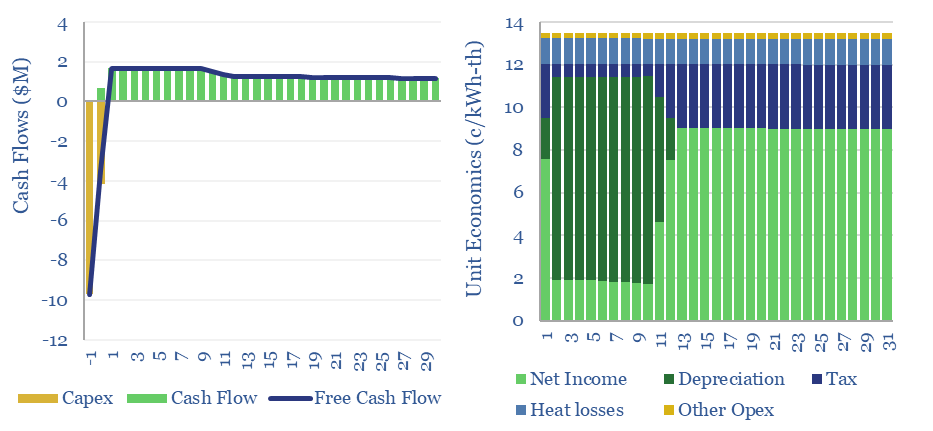

This data-file captures the costs of thermal energy storage, buying renewable electricity, heating up a storage media, then releasing the heat for industrial, commercial or residential use. Our base case requires 13.5 c/kWh-th for a 10% IRR, however 5-10 c/kWh-th heat could be achieved with lower capex costs.

Download the Model?

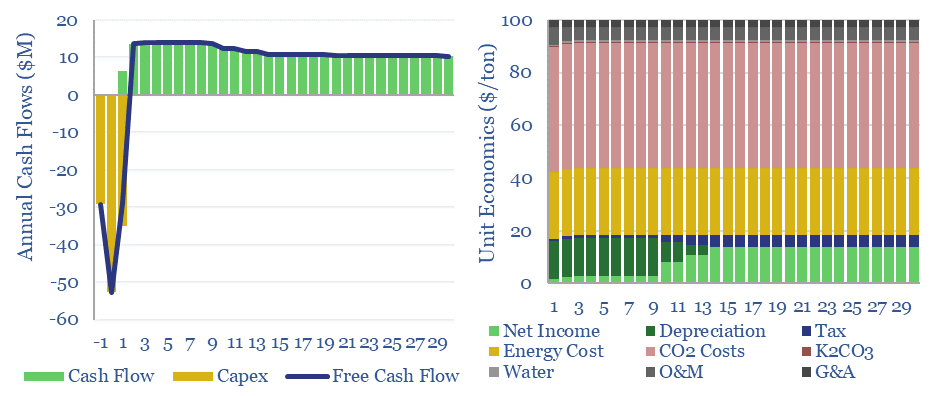

Hot potassium carbonate is a post-combustion CCS technology that bypasses the degradation issues of amines, and can help to decarbonize power, BECCS and cement plants. We think costs are around $100/ton and energy penalties are 30-50%. Potassium carbonate CCS can be stress-tested in this data-file, across 50 inputs.

Download the Model?

Redox flow battery costs are built up in this data-file, especially for Vanadium redox flow. In our base case, a 6-hour battery that charges and discharges daily needs a storage spread of 20c/kWh to earn a 10% IRR on $3,000/kW of up-front capex. Longer-duration redox flow batteries start to out-compete lithium ion batteries for grid-scale storage.

Download the Model?

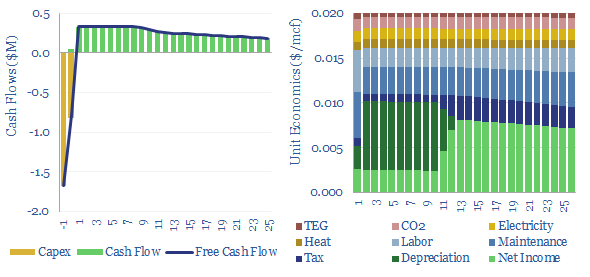

Gas dehydration costs might run to $0.02/mcf, with an energy penalty of 0.03%, to remove around 90% of the water from a wellhead gas stream using a TEG absorption unit, and satisfy downstream requirements for 4-7lb/mmcf maximum water content. This data-file captures the economics of gas dehydration, to earn a 10% IRR off $25,000/mmcfd capex.

Download the Model?

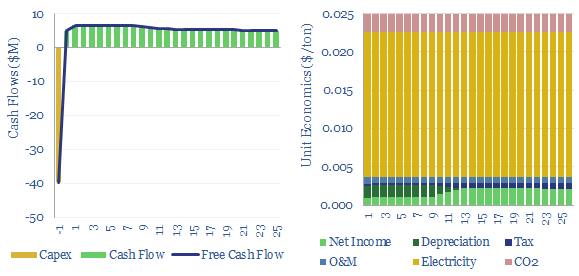

Fans and blowers comprise a $7bn pa market, moving low-pressure gases through industrial and commercial facilities. Typical costs might run at $0.025/ton of air flow to earn a return on $200/kW equipment costs and 0.3kWh/ton of energy consumption. 3,000 tons of air flow may be required per ton of CO2 in a direct air capture plant.

Download the Model?

Gas fractionation separates out methane from NGLs such as ethane, propane and butane. A full separation uses up almost 1% of the input gas energy and 4% of the NGL energy. The costs of gas fractionation require a gas processing spread of $0.7/mcf for a 10% IRR off $2/mcf input gas, or in turn, an average NGL sales price of $350/ton. Costs of gas fractionation vary and can be stress tested in this model.

Download the Model?

Hydrogen peroxide production costs run at $1,000/Tpa, to generate a 10% IRR at a greenfield production facility, with c$2,000/Tpa capex costs. Today's market is 5MTpa, worth c$5bn pa. CO2 intensity runs to 3 kg of CO2 per kg of H2O2. But lower-carbon hydrogen could be transformational for clean chemicals?

Download the Model?

This model estimates the levelized cost of offshore wind at 13c/kWh, to generate a 7% IRR off of capex costs of $4,000/kW and a utilization factor of 40-45%. Each $400/kW on capex adds 1c/kWh and each 1% on WACC adds 1.3 c/kWh to offshore wind levelized costs.

Download the Model?

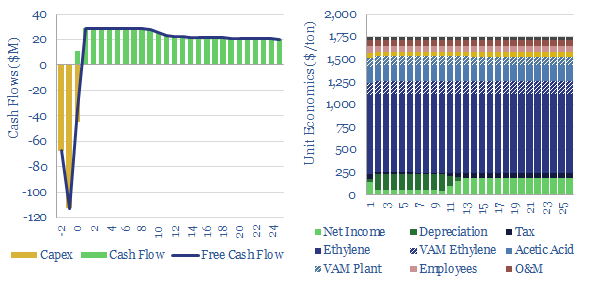

Propylene oxide production costs average $2,000/ton ($2/kg) in order to derive a 10% IRR at a newbuild chemicals plant with $1,500/Tpa in capex. 80% of the costs are propylene and hydrogen peroxide inputs. 60-70% of this $25bn pa market is processed into polyurethanes. CO2 intensity is 2 tons of CO2 per ton of PO today, but there are pathways to absorb CO2 by reaction with PO and possibly even create carbon negative polymers.

Download the Model?

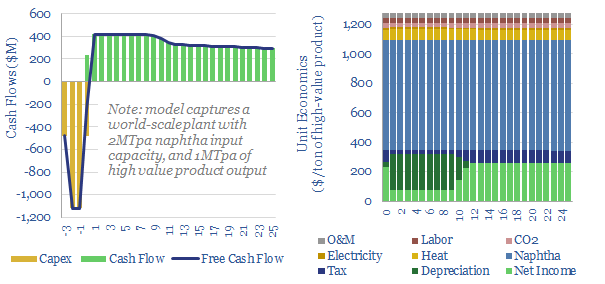

Naphtha cracking costs $1,300/ton for high value products, such as ethylene, propylene, butadiene and BTX aromatics, to derive a 10% IRR constructing a greenfield naphtha cracker, with $1,600/Tpa capex. CO2 intensity averages 1 ton of CO2 per ton of high value products. This data-file captures the economics for naphtha cracking, a cornerstone of the modern materials industry.

Download the Model?

Acetylene production costs are broken down in this data-file, estimated at $1,425/ton for a 10% IRR on a petrochemical facility that partially oxidizes the methane molecule. CO2 intensity is over 3 kg/kg. Up to 12MTpa of acetylene is produced globally for welding and as a petrochemical building block.

Download the Model?

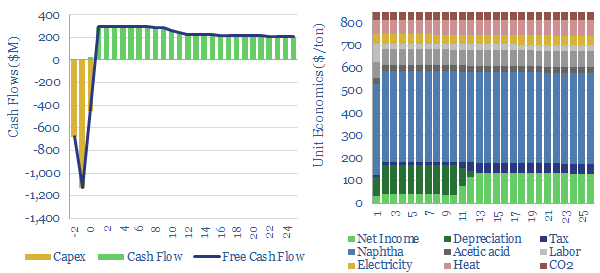

A PTA price of $800-850/ton is needed to earn a 10% IRR on a new, integrated petrochemical facility, catalytically reforming naphtha into paraxylene, then oxidizing the paraxylene into purified terephthalic acid, with upfront capex cost of $1,300/Tpa. Feedstock costs, energy prices and CO2 prices matter too.

Download the Model?

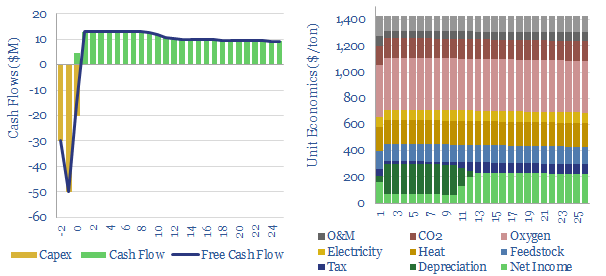

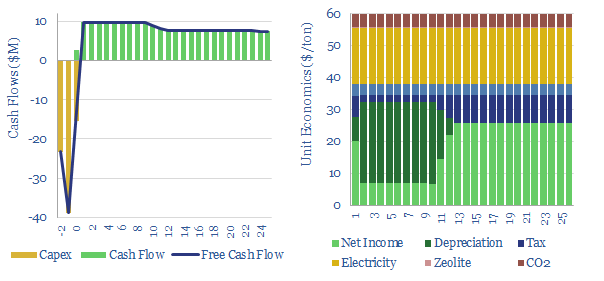

Pressure swing adsorption purifies gases according to their differing tendencies to adsorb onto adsorbents under pressure. Pressure swing adsorption costs $0.1/kg when separating pure hydrogen from reformers, and $2-3/mcf when separating bio-methane from biogas. Our cost breakdowns include capex, opex, maintenance, zeolite replacement, compression power and CO2 costs.

Download the Model?

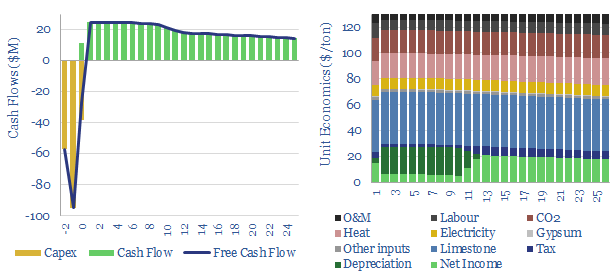

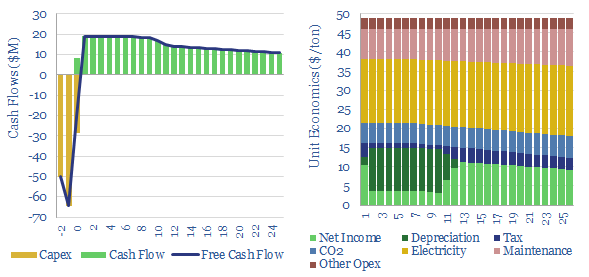

This data-file captures cement costs, based on inputs, capex and energy economics. A typical cement plant requires a cement price of $130/ton for a 10% IRR, on capex costs of $200/Tpa, energy intensity of 1,000 kWh/ton and CO2 intensity of 0.9 tons/ton. Cement costs can be stress tested in the data-file.

Download the Model?

Electrostatic precipitator costs can add 0.5 c/kWh onto coal or biomass-fired electricity prices, in order to remove over 99% of the dusts and particulates from exhaust gases. Electrostatic precipitators cost $50/kWe of up-front capex to install. Energy penalties average 0.2%. These systems are also important upstream of CCS plants.

Download the Model?

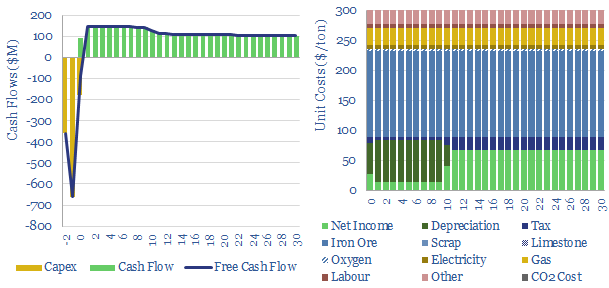

Direct reduced iron (DRI) is produced by reacting iron ore with H2-CO syngas, fueled by natural gas, in over 150 facilities worldwide. Direct reduction iron costs $300/ton, consuming 3,000kWh/ton of energy and CO2 intensity of 0.6 tons/ton. The process can be decarbonized via low-carbon hydrogen in the syngas, as the world strives towards decarbonized steel.

Download the Model?

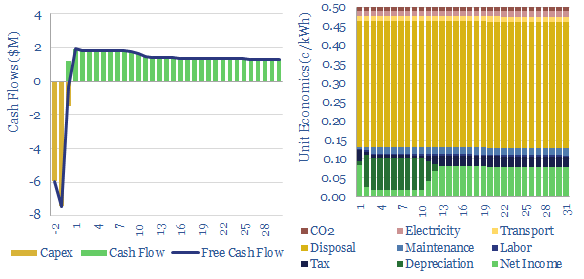

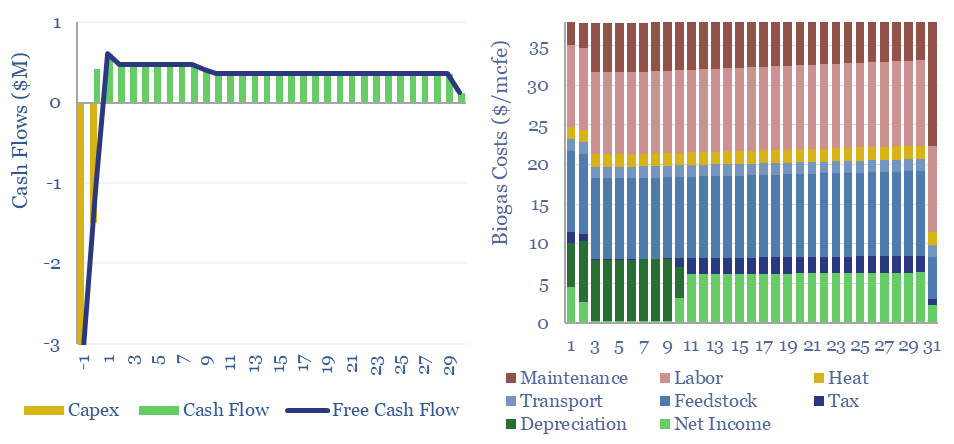

Biogas costs are broken down in this economic model, generating a 10% IRR off $180M/kboed capex, via a mixture of $16/mcfe gas sales, $60/ton waste disposal fees and $50/ton CO2 prices. High gas prices and landfill taxes can make biogas economical in select geographies. Although diseconomies of scale reward smaller projects?

Download the Model?

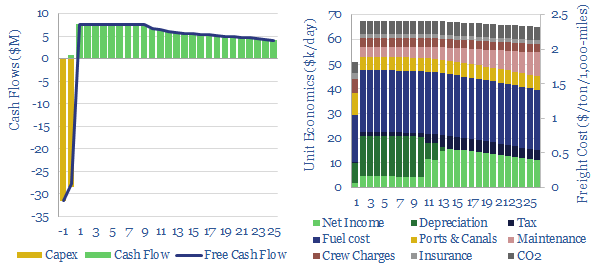

Bulk carriers move 5GTpa of commodities around the world, explaining half of all seaborne global trade. This model is a breakdown of bulk shipping cost. We estimate a cost of $2.5 per ton per 1,000-miles, and a CO2 intensity of 5kg per ton per 1,000-miles. Marine scrubbers increasingly earn their keep and uplift IRRs from 10% to 12% via fuel savings.

Download the Model?

This data-file captures selective catalytic reduction costs to remove NOx from the exhaust gas of combustion boilers and burners. Our base case estimate is 0.25 c/kWh at a combined cycle gas plant, which equates to $4,000/ton of NOx removed. Capex costs, operating costs, coal plants and marine fuels can be stress-tested in the model.

Download the Model?

This data-file captures the costs of producing different grades of silicon carbide: from materials grade SiC ($1,500/ton marginal cost, 5 tons/ton CO2 intensity) through to SiC wafers that are used in the electronics industry ($30M/ton, 200 tons/ton?). SiC semiconductor remains opaque.

Download the Model?

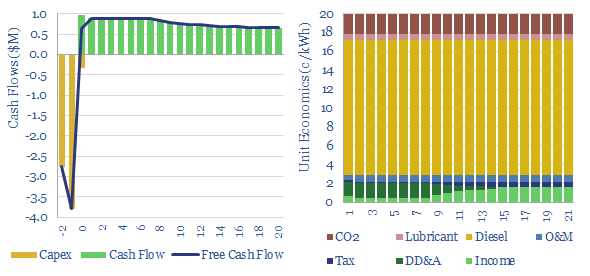

A multi-MW scale diesel generator requires an effective power price of 20c/kWh, in order to earn a 10% IRR, on c$700/kW capex, assuming $70 oil prices and c150km trucking of oil products to the facility. Economics can be stress-tested in the Model-Base tab.

Download the Model?

This economic model captures the costs of capturing CO2 using membranes, with a base case of $50/ton to earn 10% IRRs on early commercial deployments, and a possibility of deflating to $20/ton in next-generation membranes. This requires $50/m2 membranes, with 100-2,500 GPU permeance and 125-200x selectivity.

Download the Model?

This data-file captures the costs of flue gas desulfurization, specifically the costs of SO2 scrubbers, used to remove SO2 from the exhaust of coal- or distillate- fueled boilers and burners. We think a typical scrubber will remove 95% of the SO2 from the flue gas, but requires a >1c/kWh surcharge on electricity sales in order to earn a 10% IRR.

Download the Model?

The Sabatier process combines CO2 and hydrogen to yield synthetic natural gas using a nickel catalyst at 300-400C. A gas price of $100/mcf is needed for a 10% IRR, energy penalties exceed 75% and CO2 abatement cost is $2,000/ton?

Download the Model?

The US plants over 1.3bn tree seedlings per year. Especially pine. These seedlings are typically 8-10 months old, with heights of 25-30mm, root collars of 5mm, and total mass of 5-10 grams, having been grown by dedicated producers. This data-file captures the costs of tree seedlings, to support afforestation, reforestation or broader forestry.

Download the Model?

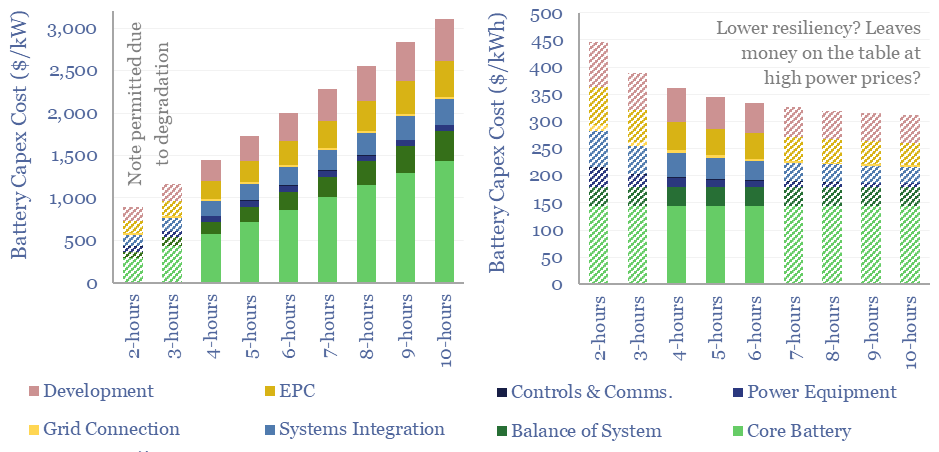

Grid-scale batteries are envisaged to store up excess renewable electricity and re-release it later. Grid-scale battery costs are modeled at 20c/kWh in our base case, which is the 'storage spread' that a LFP lithium ion battery must charge to earn a 10% IRR off $1,200/kW installed capex costs. Other batteries can be compared in the data-file.

Download the Model?

This model captures the economics of a CO2 carrier, i.e., a large marine vessel, carrying liquefied CO2, at -50ºC temperature and 6-10 bar pressure, for CCS. A good rule of thumb is seaborne CO2 shipping costs are $8/ton/1,000-miles. Shipping rates of $100k/day yield a 10% IRR on a c$150M tanker.

Download the Model?

Polyurethane production costs are estimated at $2.5-3.0/kg in our base case model, which looks line-by-line across the inputs and outputs, of a complex, twenty stage production process, which ultimately yields spandex-lycra fibers. Costs depend on oil, gas and hydrogen input prices.

Download the Model?

Ethylene vinyl acetate is produced by reacting ethylene with vinyl acetate monomer. This data-file estimates production costs, with a marginal cost between $1,500-2,000/ton, and a total embedded CO2 intensity of 3.0 tons/ton. EVA comprises 5% of the mass of a solar panel and could be an important solar bottleneck.

Download the Model?

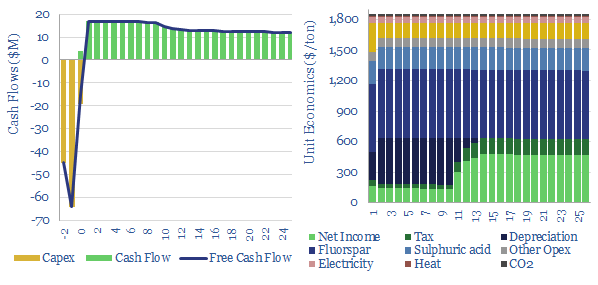

Hydrogen fluoride is a crucial commodity chemical. This model captures its production from acid-grade fluorspar and sulfuric acid. We think marginal costs are around $1,850/ton, in order to earn a 10% IRR on a production facility costing $4,000/Tpa, while the fully loaded CO2 intensity is around 0.75 tons/ton.

Download the Model?

Global production of nitric acid is 60MTpa, in a $25bn pa market, spanning c500 production facilities. This data-file estimates a marginal cost of $350/ton HNO3 and a CO2 intensity averaging 1.8 tons/ton. There are feedback loops where gas shortages could result in fertilizer and metal shortages.

Download the Model?

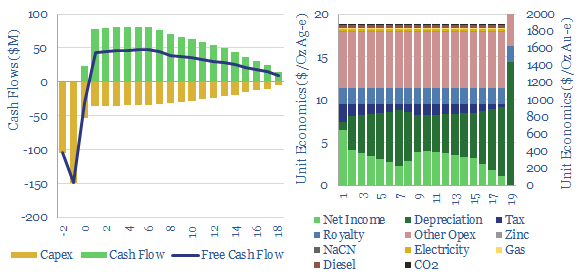

This data-file captures the marginal cost of silver and gold production, at an integrated mining-refining operation. In our base case, a 10% IRR requires a silver price of $17/Oz and a gold price of $1,750/Oz, while the energy and CO2 intensities are an eye watering 100-150 tons/ton and 9,000 tons/ton, respectively. Numbers vary widely on ore grade.

Download the Model?

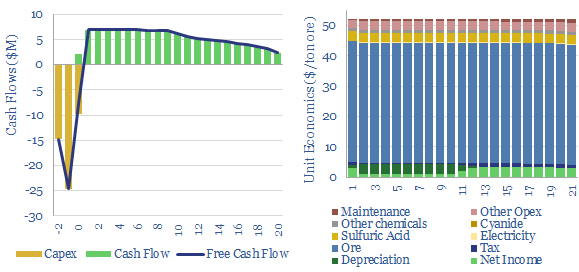

This data-file captures the energy economics of leaching in the mining industry, especially the costs of heap leaching, for the extraction of copper, nickel, gold, silver, other precious metals, uranium, and Rare Earths. The data-file allows you to stress test costs in $/ton of ore, $/ton of metal, capex, opex, chemicals costs, energy intensity and CO2 intensity.

Download the Model?

Electrowinning costs and energy economics are built up in this data-file. A charge of $900/ton is required to earn a 10% IRR on a $3,000/kTpa plant with a median energy consumption of 2-3 MWH/ton. Although this will vary metal by metal.

Download the Model?

What are the costs of hydrogen from coal gasification? This model looks line-by-line, across different plant configurations, aggregating data from technical papers. Black hydrogen costs $1-2/kg. But CO2 intensity is very high, as much as 25 tons/ton. It can possibly be decarbonized resulting in semi-clean hydrogen costing c$2.5/kg.

Download the Model?

The costs of froth flotation are aggregated in this data-file, building up the typical capex costs (in $/Tpa), energy costs (in kWh/ton) and other opex lines (in $/ton) of one of the most important processes for the modern metals and materials industry. A good rule of thumb is $10/ton costs to concentrate a material by over 4x.

Download the Model?

Hydrogen cyanide is a chemical intermediate, used for making perspex, nylon-6,6 and sodium cyanide, which in turn is a crucial chemical for extracting gold and silver from precious metal ores. Marginal costs are usually $1,500-1,650/ton and CO2 intensities are 2-3 tons/ton.

Download the Model?

Marginal costs of a HPHT project in the UK North Sea are captured via modeling Shell's 40kboed Jackdaw project, FID'ed in 2022. A $7/mcf marginal cost results mostly from high hurdle rates associated with project complexity. CO2 intensity has been lowered to c14kg/boe, we think.

Download the Model?

Bio-coke is a substitute for coal-coke in steel-making and other smelting operations. We model it will cost c$450/ton, c50% more than coal-coke, but saves 2 - 2.5 tons/ton of CO2. Abatement costs can be as low as $70/ton. Although not always, and there are comparability issues.

Download the Model?

Mining crushing-grinding costs are typically $10/ton of ore, breaking 3-10cm rubble into 30-100 micron powders. Capex averages $20/Tpa and energy cost averages 20kWh/ton.

Download the Model?

This data-file assesses pumped hydro costs, to back up wind and solar. A typical project has 0.5GW of capacity, 12-hours storage duration, 80% efficiency, and capex costs of $2,250/kW. Thus it requires a 25c/kWh storage spread, in order to generate a 10% IRR.

Download the Model?

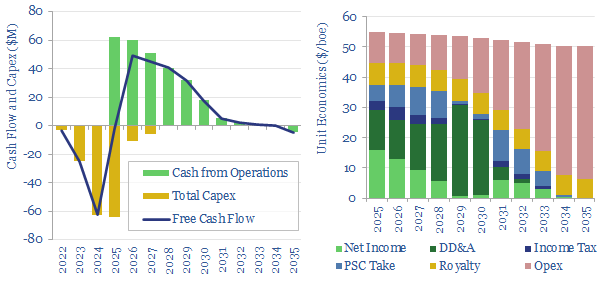

What is the marginal cost of offshore oil and gas? This data-file captures a small project, off Africa, with $15/boe development cost, $15/boe opex, 70% fiscal take. Break-even is at $35-45/bbl. But a $90/bbl forward curve may be needed for definitive go-ahead.

Download the Model?

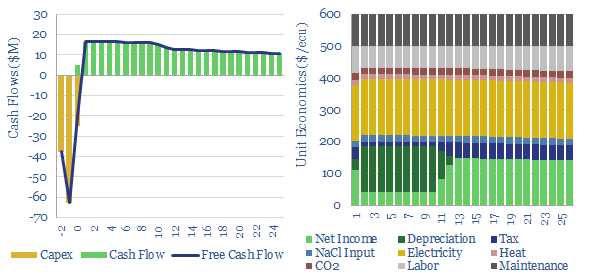

This data-file captures chlor-alkali process economics, to produce 80MTpa of chlorine and 90MTpa of caustic soda. Our base case requires $600 per ecu for a 10% IRR and a growth project costing $600/Tpa. Electricity is 45% of cash cost. CO2 intensity is 0.5 tons/ton. Interestingly, chlor-alkali plants can demand shift.

Download the Model?

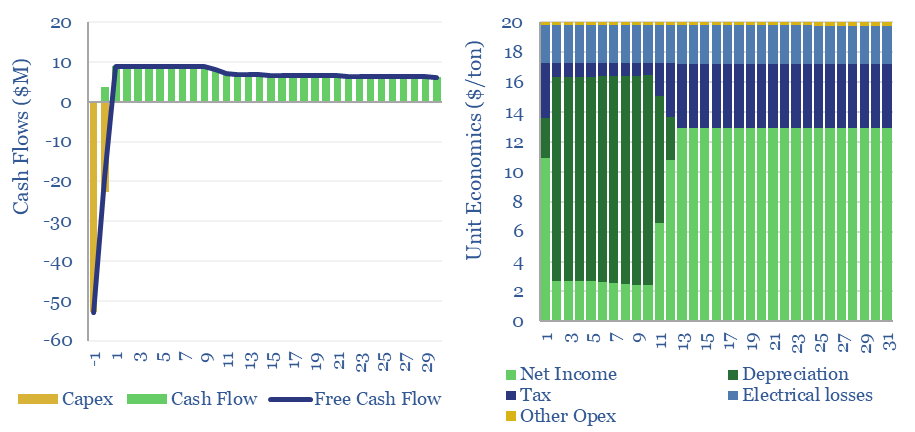

This model captures the economics of power factor correction via installing capacitor banks upstream of inductive loads. A 10% IRR is derived from a system costing $30/kVAR, reducing real power losses by 0.5%, thus saving on 8c/kWh electricity prices (75% of savings), $3.5/kW demand charges (15%) and a $20/ton CO2 price (10%).

Download the Model?

Technology Screens and Company Screens

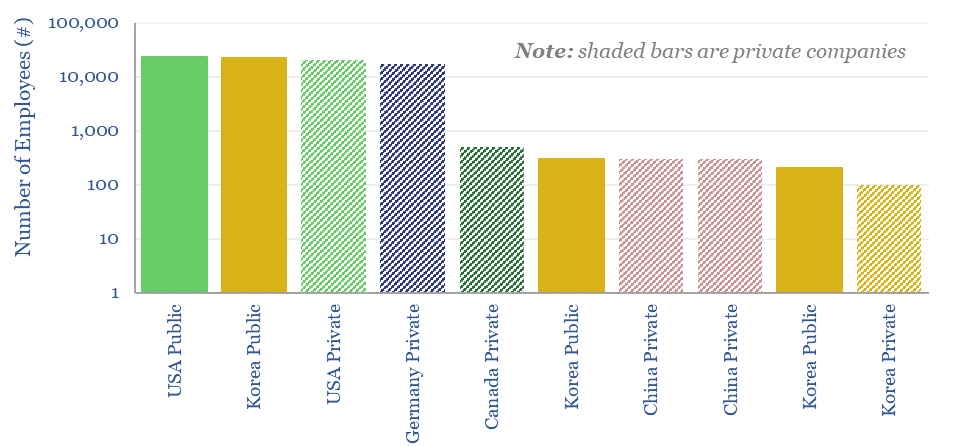

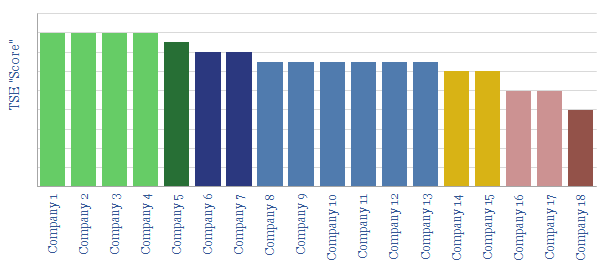

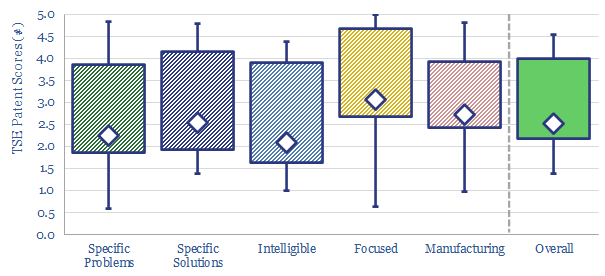

This database contains a record of every company that has ever been mentioned across Thunder Said Energy's energy transition research, as a useful reference for TSE's clients. The database summarizes over 3,000 mentions of 1,400 energy transition companies, their size, focus and a summary of our key conclusions, plus links to further research.

Download the Screen?

What is the market concentration by industry in energy, mining, materials, semiconductors, capital goods and other sectors that matter in the energy transition? The top five firms tend to control 45% of their respective markets, yielding a ‘Herfindahl Hirschman Index’ (HHI) of 700.

Download the Screen?

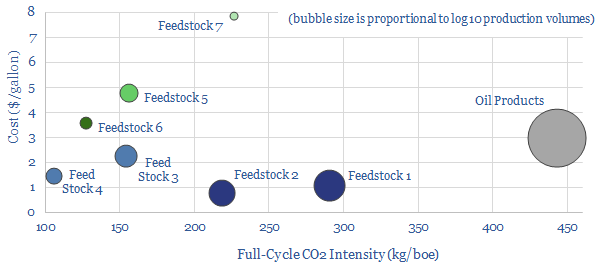

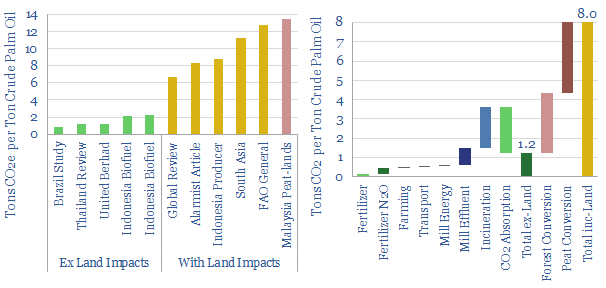

Biofuels are currently displacing 3.5Mboed of oil and gas. But they are not carbon-free, and their weighted average CO2 emissions are only c50% lower. This data-file breaks down the biofuels market across seven key feedstocks, to help identify which opportunities can scale for the lowest costs and CO2, versus others that require further technical progress.

Download the Screen?

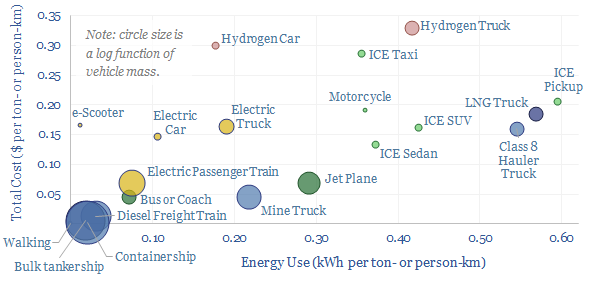

Vehicles transport people and freight around the world, explaining 70% of global oil demand, 30% of global energy use, 20% of global CO2e emissions. This overview summarizes all of our research into vehicles, and key conclusions for the energy transition.

Download the Screen?

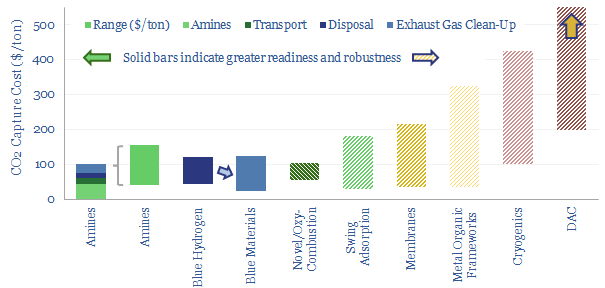

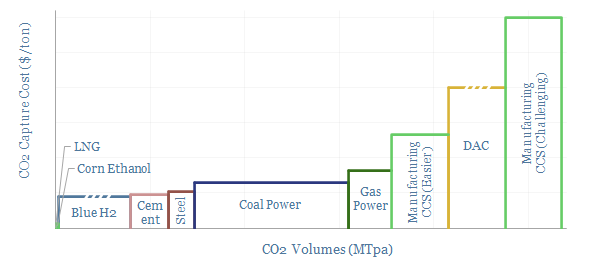

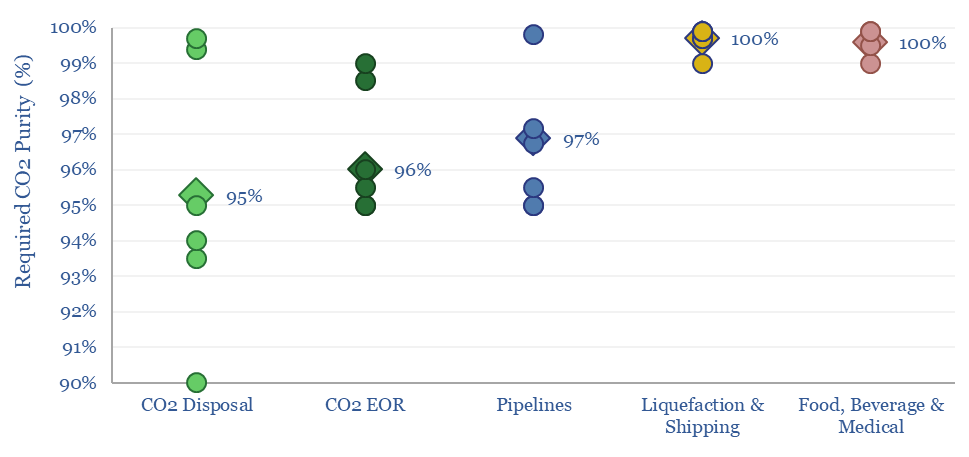

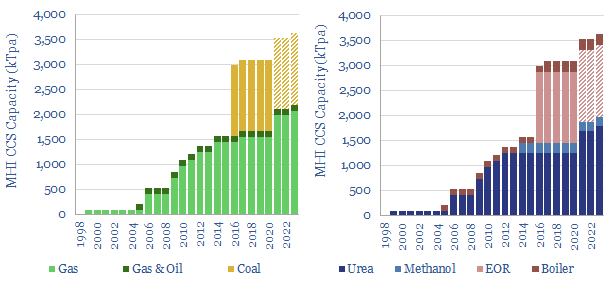

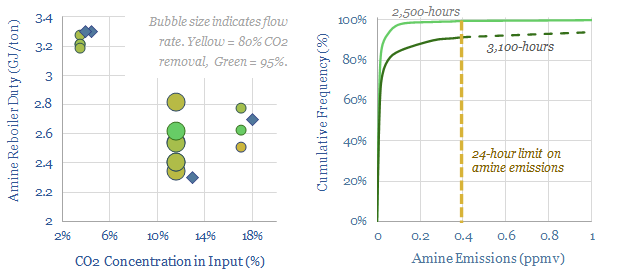

Carbon capture and storage (CCS) prevents CO2 from entering the atmosphere. Options include the amine process, blue hydrogen, novel combustion technologies and cutting edge sorbents and membranes. Total CCS costs range from $80-130/ton, while blue value chains seem to be accelerating rapidly in the US. This article summarizes the top conclusions from our carbon capture and storage research.

Download the Screen?

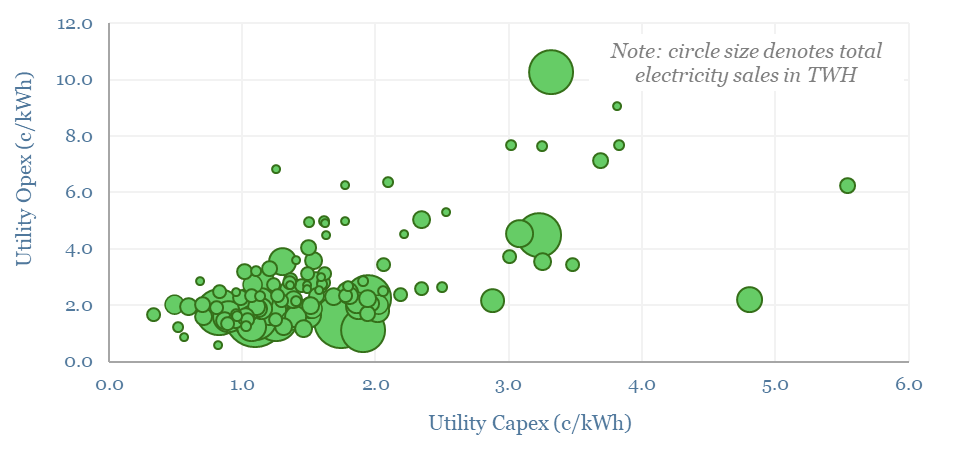

This data-file evaluates transmission and distribution costs, averaging 7c/kWh in 2024, based on granular disclosures for 200 regulated US electric utilities, which sell 65% of the US's total electricity to 110M residential and commercial customers. Costs have doubled since 2005. Which utilities have rising rate bases and efficiently low opex?

Download the Screen?

This data-file is a screen of leading companies in vapor deposition, manufacturing the key equipment for making PV silicon, solar, AI chips and LED lighting solutions. The market for vapor deposition equipment is worth $50bn pa and growing at 8% per year. Who stands out?

Download the Screen?

Thermoelectric devices convert heat directly into electricity, or conversely provide localized cooling/heating by absorbing electricity. This data-file screens leading companies in thermoelectrics, their product specifications, applications and underlying calculations for thermoelectric efficiency.

Download the Screen?

50 companies make conductive silver pastes to form the electrical contacts in solar modules. This data-file tabulates the compositions of silver pastes based on patents, averaging 85% silver, 4% glass frit and 11% organic chemicals. Ten companies stood out, including a Korean small-cap specialist.

Download the Screen?

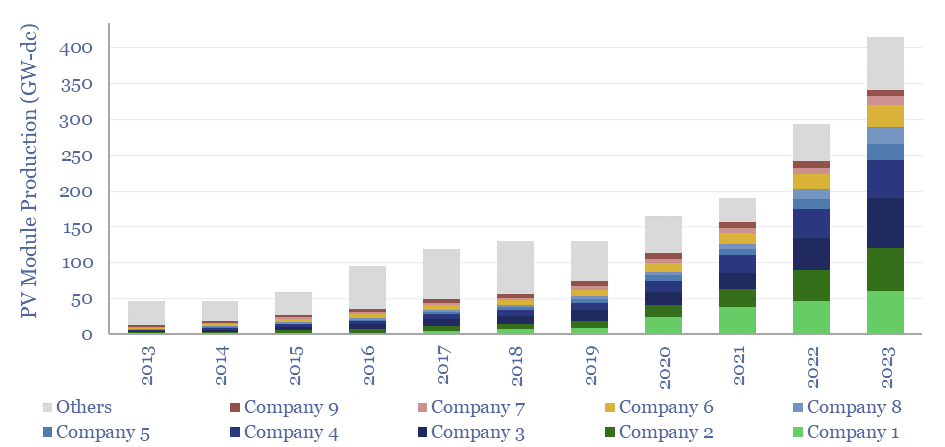

The world produced over 400GW of solar modules in 2023, which is up 10x from a decade ago. This data-file breaks down solar module production by company and over time, comparing the companies by solar module selling prices ($/kW), margins (%), efficiency (%), transparency, and technology development.

Download the Screen?

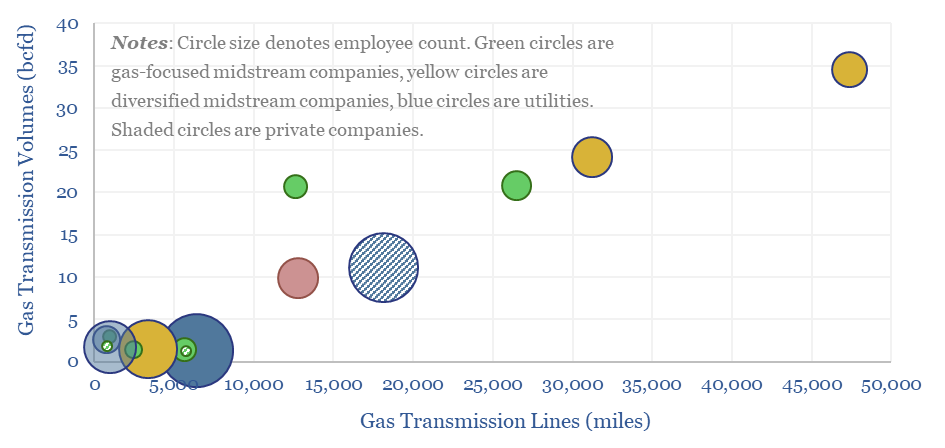

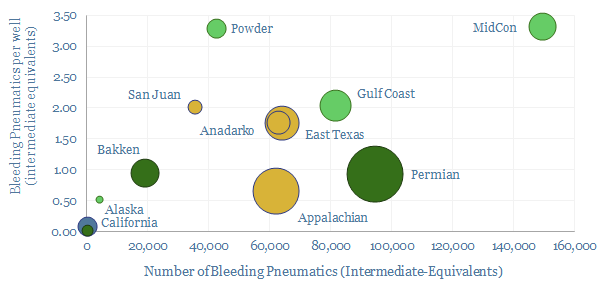

This data-file aggregates granular data into US gas transmission, by company and by pipeline, for 40 major US gas pipelines which transport 45TCF of gas per annum across 185,000 miles; and for 3,200 compressors at 640 related compressor stations.

Download the Screen?

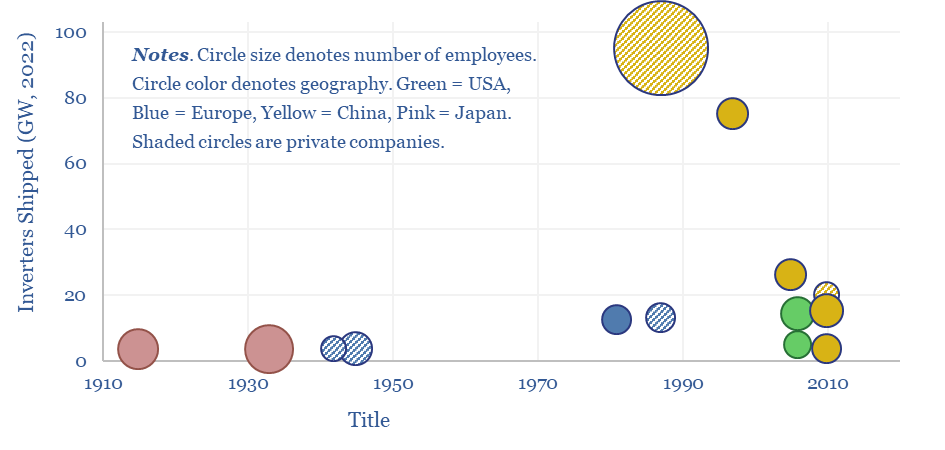

This data-file tracks some of the leading solar inverter companies and inverter costs, efficiency and power electronic properties. As China now supplies 85% of all global inverters, at 30-50% lower $/W pricing than Western companies, a key question explored in the data-file is around price versus quality.

Download the Screen?

This data-file screens 20 leading companies in harmonic filters, tabulating their size, geography, ownership details, patent filings and a description of their offering. Active harmonic filters reduce total harmonic distortion below 5%, with 97% efficiency, within 5 ms. Half a dozen companies stood out in our screen, including one large, listed Western capital goods company.

Download the Screen?

This data-file is a screen of thermal energy storage companies, developing systems that can absorb excess renewable electricity, heat up a storage medium, and then re-release the heat later, for example as high-grade steam or electricity. The space is fast-evolving and competitive, with 17 leading companies progressing different solutions.

Download the Screen?

Leading direct air capture companies (DAC companies) are assessed in this data-file, aggregating company disclosures, project disclosures and other data from patents and technical papers. The landscape is evolving particularly rapidly, trebling in the past half-decade, especially towards novel DAC solutions.

Download the Screen?

20 leading companies in LED lighting are compared in this data-file, mostly mid-caps with $2-10bn market cap and $1-8bn of lighting revenues, listed in the US, Europe, Japan, Taiwan. Operating margins averaged 8% in 2022, due to high competition, fragmentation and inorganic activity. The value chain ranges from LED semiconductor dyes to service providers installing increasingly efficient lighting systems as part of the energy transition.

Download the Screen?

35 indium producers are screened in this data-file, as our energy transition outlook sees primary demand rising 4x from 900 tons in 2022 to over 3.5ktons in 2050, for uses in HJT solar cells and digital devices. 60% of global supply is produced by 20 Chinese companies. But five listed materials companies in Europe, Canada, Japan and Korea also stand out.

Download the Screen?

This data-file is a screen of leading companies in polyurethane production, capturing 80% of the world's 25MTpa market, across 20 listed companies and 3 private companies. We see growing demand for polyurethanes -- especially for insulation, electric vehicles and consumer products -- while there is also an exciting prospect that EVs displace reformates from the gasoline pool, helping to deflate feedstock costs. So who benefits?

Download the Screen?

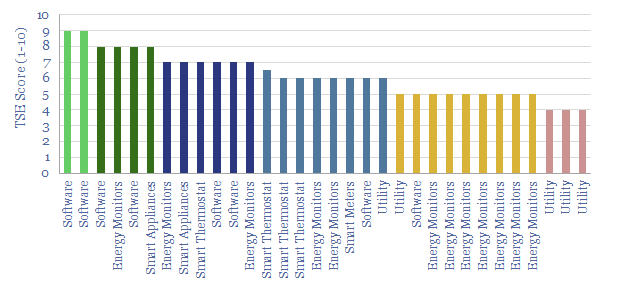

Demand shifting flexes electrical loads in a power grid, to smooth volatility and absorb more renewables. This database scores technical potential and economical potential of different electricity-consuming processes to shift demand, across materials, manufacturing, industrial heat, transportation, utilities, residential HVAC and commercial loads.

Download the Screen?

10MTpa of hydrogen is produced in the US, of which 40% is sold by industrial gas companies, 20-25% is generated on site at refineries, 20% at ammonia plants and 15-20% in chemicals/methanol. This datafile breaks down US hydrogen production by facility. Owners of existing steam methane reforming units may readily be able to capture CO2 and benefit from CO2 disposal credits under the US Inflation Reduction Act?

Download the Screen?

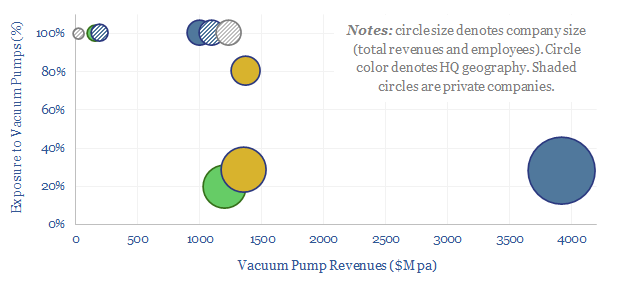

The global market for vacuum pumps is worth $15bn per year, with growing importance for making semiconductors, solar panels and AI chips. This data-file reviews ten leading companies in vacuum pumps, including one European-listed capital goods leader, a European pure-play and a Japanese-listed pure-play.

Download the Screen?

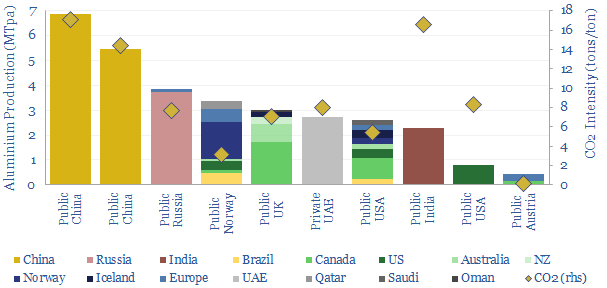

Leading aluminium producers are reviewed in this data-file, across ten companies, producing half of the world's global output. Scale ranges 1MTpa to 7MTpa. CO2 intensity of primary aluminium production ranges from 3 tons/ton to 17 tons/ton, in aggregate across these companies.

Download the Screen?

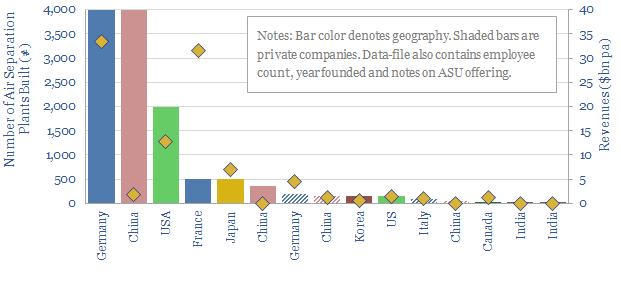

Over $100bn pa of industrial gases and $5-6bn pa of cryogenic air separation plants are produced each year. This data-file is a screen of leading industrial gas companies and cryogenic air separation companies, breaking down their market share (number of ASUs constructed) history, geography, sales and headcounts.

Download the Screen?

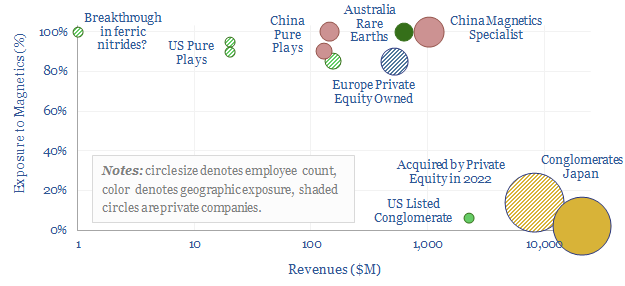

The global magnet industry is fragmented across hundreds of suppliers, including 800 in Asia-Pacific. The total market is worth $20bn pa. The purpose of this data-file is to highlight a dozen leading magnet companies, including producers of permanent magnets, Rare Earth magnets (e.g., NdFeB), ferrites and other magnetic components.

Download the Screen?

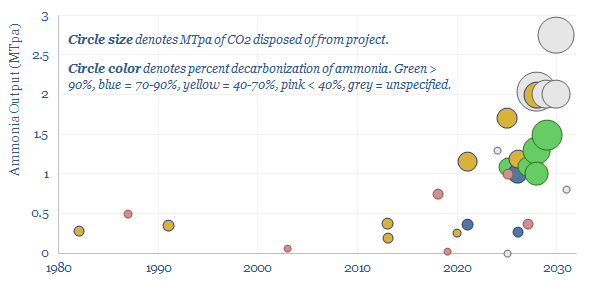

This data-file captures a sample of 30MTpa of blue ammonia projects from 1980 to 2030, including their location, companies, timings (year of FID, year of start-up), their sizes (in MTpa), their CO2 reductions (in %), their capex costs (in $M, where disclosed) and the implied capex costs ($/Tpa). We have also summarized each project with 3-10 lines of text.

Download the Screen?

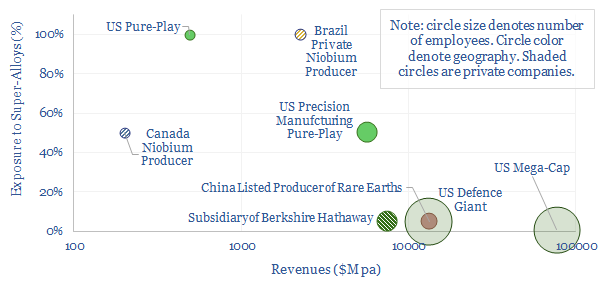

This data-file is a screen of leading companies in super-alloys, covering US pure-plays, mega-caps in industrials and defence, and emerging world producers of Rare Earth metals. In each case, we have included our notes and observations.

Download the Screen?

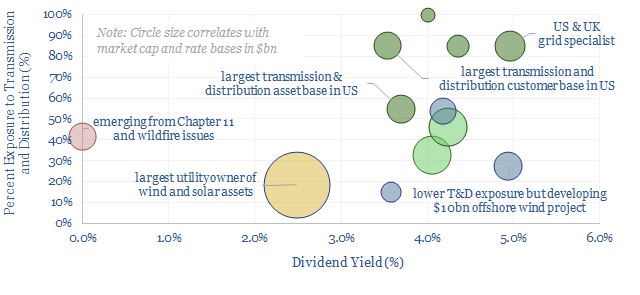

The average US electric utility has 25 GW of generation, 15,000-miles of power transmission, 100,000 miles of distribution, 8M customers, 3.5% dividend yields and 6.5% long-term target growth. We wonder if there is upside on expanding power grids? A dozen companies are in our screen.

Download the Screen?

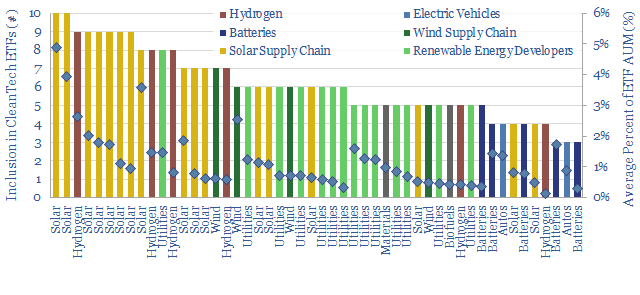

Which stocks are most considered to be energy transition stocks? To answer this question, we have aggregated the holdings of ten well-known energy transition ETFs and clean tech ETFs, in early 2023.

Download the Screen?

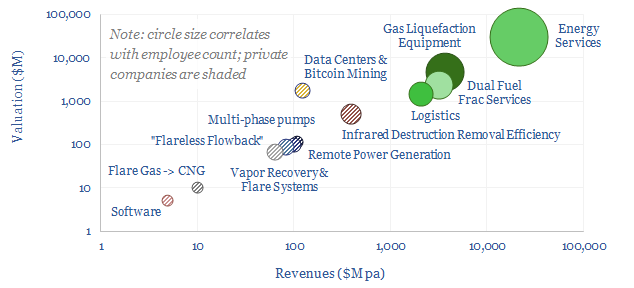

This data-file is a screen of companies that can reduce routine flaring and reduce the ESG impacts of unavoidable residual flaring. The landscape is broad, ranging from large, listed and diversified oil service companies with $30bn market cap to small private analytics companies with

Download the Screen?

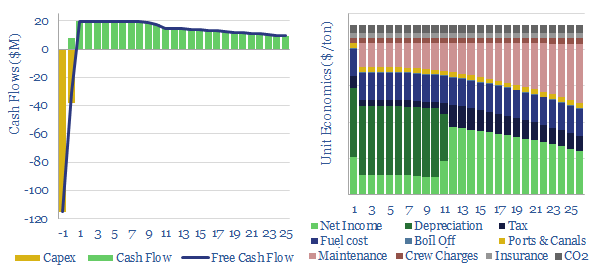

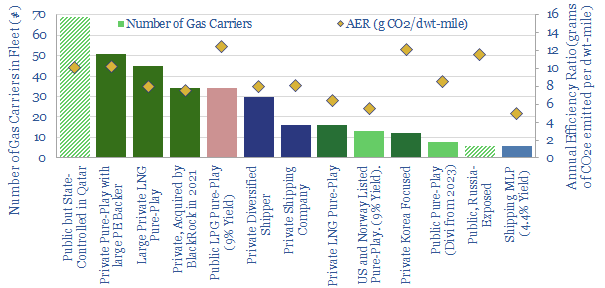

This data-file is a screen of LNG shipping companies, quantifying who has the largest fleet of LNG carriers and the cleanest fleet of LNG carriers (i.e., low CO2 intensity). Many private companies are increasingly backed by private equity. Many public companies have dividend yields of 4-9%.

Download the Screen?

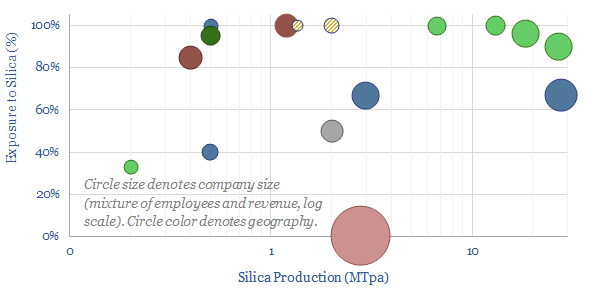

Highly pure silica sand, with well over 95% SiO2 content and less than 0.6% iron oxide, is an important resource used in making glass, metal foundries, "proppant" for hydraulic fracturing in the oil and gas industry and making high-grade silicon (for chips and PV silicon). The market is 350MTpa. This data-file is a screen of the world's largest silica sand producers.

Download the Screen?

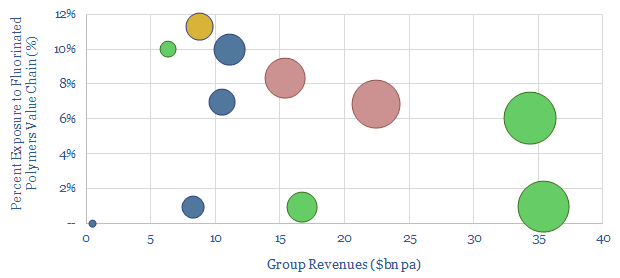

This data-file is a screen of companies in the fluorinated polymer value chain. It captures ten large Western companies, with exposure to producing fluorspar, refining fluorspar into hydrofluoric acid (HF) and/or further processing into fluorinated polymers, which matter increasingly in the energy transition.

Download the Screen?

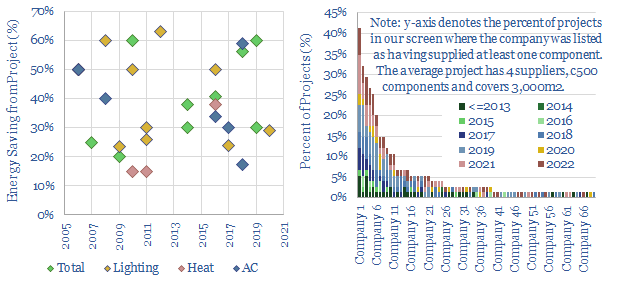

High-quality building automation typically saves 30-40% of the energy needed for lighting, heating and cooling a building. This matters amidst energy shortages, and reduces payback times on $100-500k up-front capex. This data-file aggregates case studies of KNX energy savings, and screens 70 companies, from Capital Goods giants to private pure-plays.

Download the Screen?

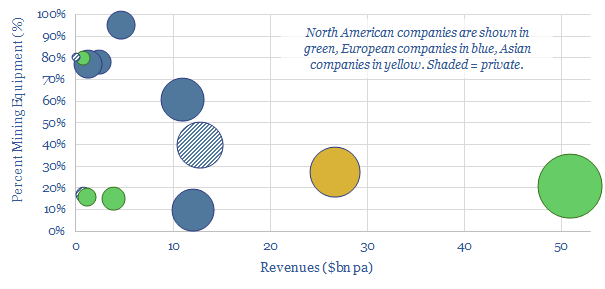

This data-file is an overview of mining equipment companies. For each company, we have noted its location, size, age, number of employees, number of patents, latest revenues, operating margins, exposure to the mining equipment industry, and a few short summary sentences. Where possible, we have also broken down the company's revenues by end-market or by commodity.

Download the Screen?

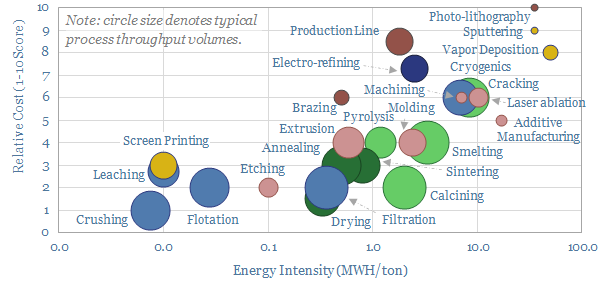

An of overview of manufacturing methods is given in this data-file. Costs are 70% correlated with energy intensity, ranging from well below 0.3 MWH/ton to well above 7MWH/ton. The lowest cost techniques take place at huge throughput in the mining industry, while the most intricate are used in semiconductor.

Download the Screen?

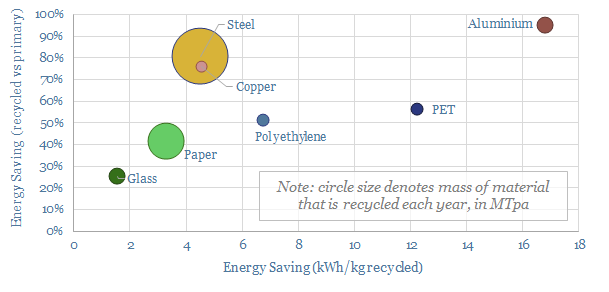

1GTpa of material is recycled globally, across steel, paper, glass, plastics and other metals. On average, 35% of these materials are produced from recycled feeds, saving 70% of the energy and CO2, with upside in the Energy Transition.

Download the Screen?

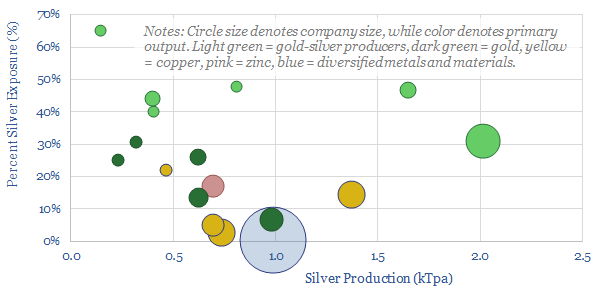

Half of the world's 28kTpa global silver market is controlled by 17 public companies, with silver output ranging from 0.1 - 2.0 kTpa, and co-producing gold, copper or other metals. This data-file is a screen of silver producers, in order to identify leading companies.

Download the Screen?

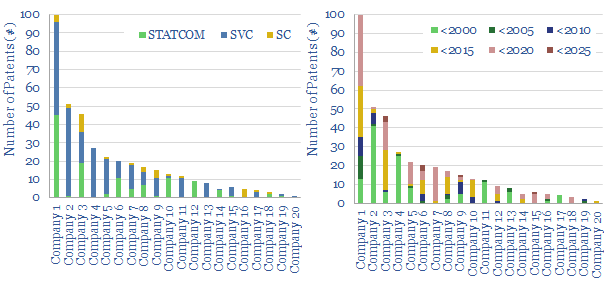

This data file looks for leading companies in STATCOMs and SVCs by aggregating all Western patents that refer in their title, abstract or claims to "STATCOMs", "Static VAR Compensators", or similar. ABB (now part of Hitachi), Siemens Energy and GE stand out as Western leaders in a concentrated space, although competition is growing.

Download the Screen?

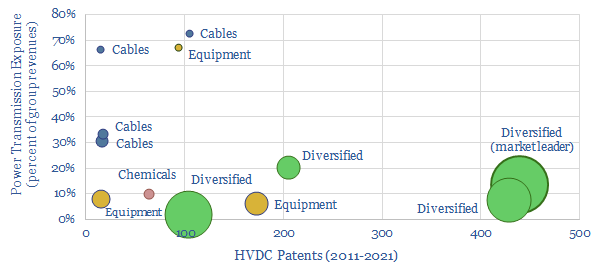

The global HVDC market is $10bn pa, and it might typically cost c€100-600 M to connect a large and remote renewables project to the grid or run a small HVDC inter-connector. This data-file reviews the market leaders in HVDC, based on 5,500 patents. A dozen companies stand out, with c$40bn of combined revenues from power transmission projects.

Download the Screen?

This data-file screens 15 companies that are developing graphite mines, plus downstream refining facilities, to upgrade their output into highly pure spheronized graphite that can be used as an anode material for lithium ion batteries, such as in electric vehicles.

Download the Screen?

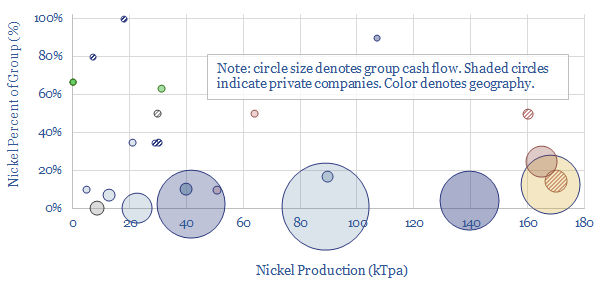

25 companies dominate the world's nickel production, although the supply chain is heavily split between battery-grade materials, Class I metals, and lower-grade products. Each company is summarized, according to its size and asset base. CO2 intensity varies by a very wide 10x margin, from sub-10 tons/ton nickel to 100 tons/ton.

Download the Screen?

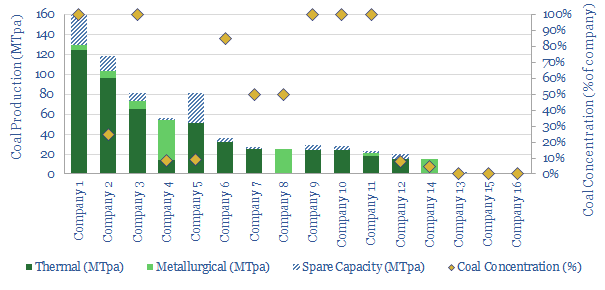

In 2022-25, bizarrely, we could be in a market where deployment of important energy transition technologies is being held back by energy shortages and metals shortages, which both pull on the demand for coal. This data-file screens fifteen of the largest Western coal producers.

Download the Screen?

Power MOSFETs are an energy transition technology, the building block behind inverters, DC-DC converters, EV drive trains, EV chargers and other renewables-battery interfaces. Hence this data-file is a screen of companies making power MOSFETs, especially new and higher-efficiency devices using Silicon Carbide as the semi-conductor.

Download the Screen?

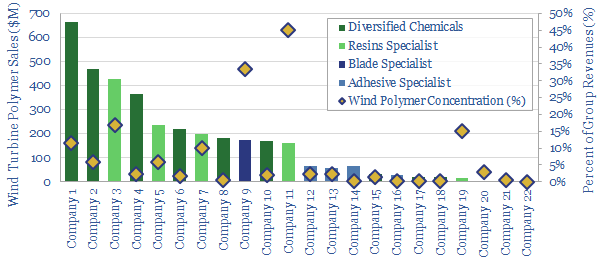

This data-file tabulates details for 20 companies that make epoxy- or polyurethane resins and adhesives, especially those that feed into the construction of wind turbines. We think there are 5 public companies ex-China with 5-35% exposure to this sub-segment of the wind industry.

Download the Screen?

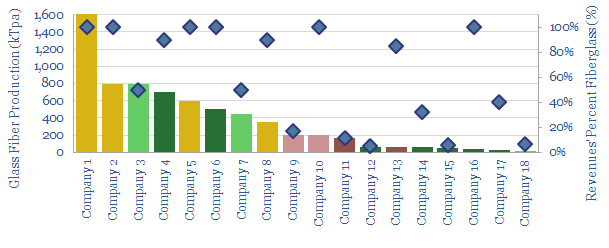

This data-file aims to provide an overview of the world's largest glass fiber manufacturers, quantifying their market share, and summarizing their offering. Covered companies include China Jushi, Owens Corning, Saint Gobain-Vetrotrex, Johns Manville and smaller Europeans.

Download the Screen?

This data-file profiles leading companies and products in the space of axial flux motors, with an average power density of almost 8kW/kg, which is 10x higher than a typical AC induction motor in heavy industry. Leading companies are profiled, based on reviewing over 1,200 patents.

Download the Screen?

This data-file outlines the top twenty companies producing variable frequency drives to precisely control electric motors. The top three companies are European capital goods players. High-quality VFDs may protect against growing competition from China.

Download the Screen?

This data-file profiles a dozen companies that make thermal insulation materials, as 50-75% of all buildings standing today will likely need insulation upgrades on the road to 'net zero', while the pace of progress should be amplified in times of energy shortages.

Download the Screen?

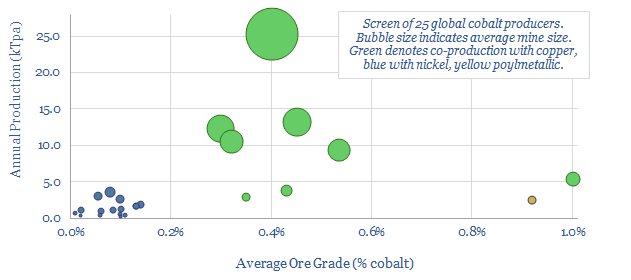

Our global decarbonization models burn through the world's entirely terrestrial cobalt resources. Hence this data-file reviews c25 mines around the world, and the resultant positions of 25 global cobalt producers. All cobalt is produced alongside copper or nickel, but some companies are more cobalt-exposed.

Download the Screen?

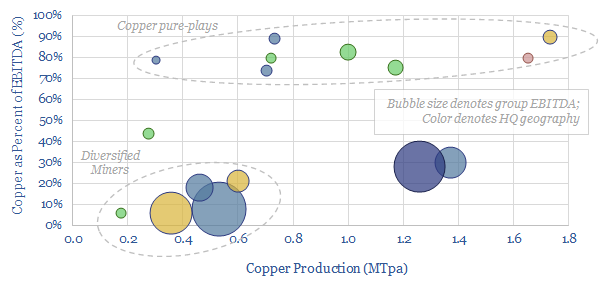

This data-file is a screen of the world's largest copper miners and producers, covering 16 companies that produce half of all global output. The average company produces around 0.8MTpa, has a 30-year reserve life, and derives 30% of its EBITDA from copper.

Download the Screen?

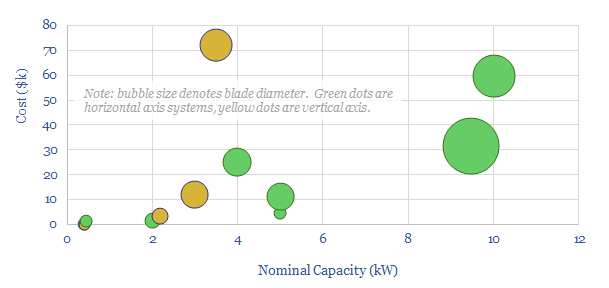

This screen compares the offerings of a dozen small-scale wind turbine providers, with power ratings below 30kW, for residential energy generation. Costs range from $1,000-6,000/kW. The three key challenges are performance, relaibility and cost.

Download the Screen?

This data-file tabulates our subjective opinions on c20 different heat pump companies, based on their consumer reviews, pricing, reliability, efficiency, company size, models, integration, and visual/acoustic properties. We conclude heat pumps are opaque and must be selected carefully.

Download the Screen?

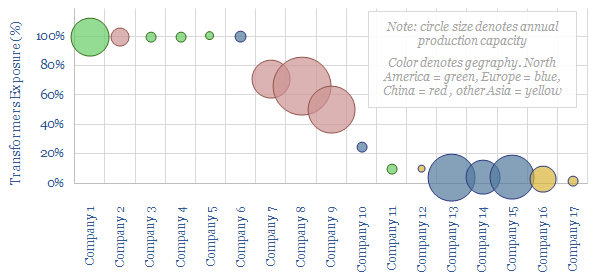

This data-file aims to tabulate helpful data on the grid-scale transformer industry, covering the sizes (tons), costs ($/kW) and companies in the space. Margin pressure looks challenging, amidst material re-inflation, and a competitive set of capital goods giants and emerging Chinese companies.

Download the Screen?

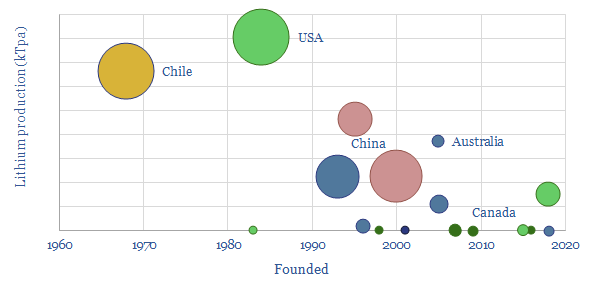

This data-file captures c20 lithium producers, their output (in kTpa), their size and their recent progress. Eight companies effectively control 90% of global supply. 3 out of 12 earlier-stage companies underwent restructurings in 2020, illustrating risks, but also potential future supply shortages.

Download the Screen?

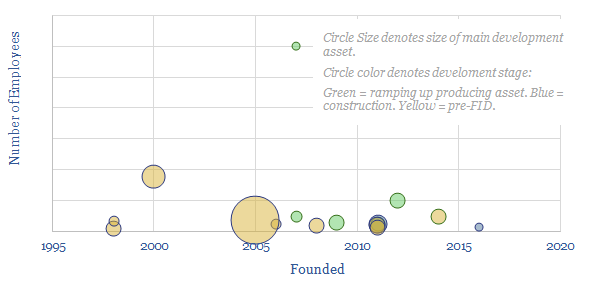

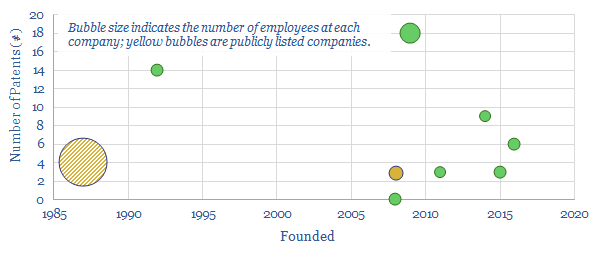

This data-file summarizes the details of c15 companies aiming to commercialise low-carbon electro-fuels, using power-to-liquids technologies, and their progress to-date. The average company was founded in 2015, with 5 patents and 15 employees. Although this is skewed towards 3-4 leaders.

Download the Screen?

This data-file gives an overview of gas sweetening and treatment processes. The main method is chemical absorption using amines. We estimate that a mid-size facility of 500mmcfd must levy a $0.15/mcf cost and emits 3.5kg/boe to take out c7% H2S and CO2. Other processes are compared.

Download the Screen?

This screen tabulates details of almost twenty leading companies in the production and commercialization of biochar. The average company was founded in 2012, has 8 employees and 1.2 patents, showing an early-stage and competitive space.

Download the Screen?

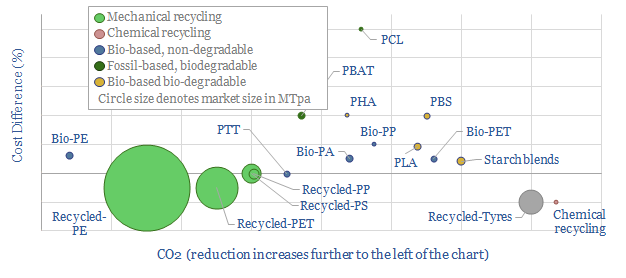

This data-file captures 17 plastic products derived from mechanical recycling, biologically-sourced feedstocks or that is bio-degradable. The 'greenest" plastics are c30% lower in CO2 than conventional plastics, but around 2x more costly.

Download the Screen?

This screen assesses a dozen companies sequestering CO2 by farming seaweeds and kelp. The area is fast-growing but early-stage. The average company was founded in 2017 and employs 10 people. Commercial products include foods, animal feeds, fertilizers, plastics and even distilled spirits.

Download the Screen?

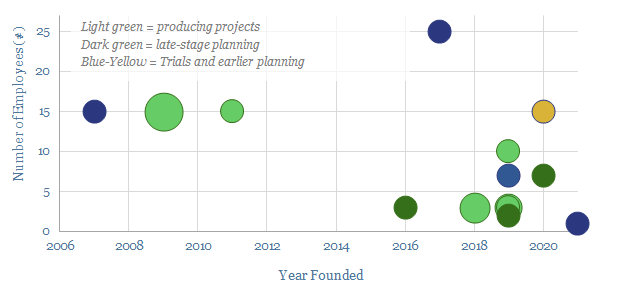

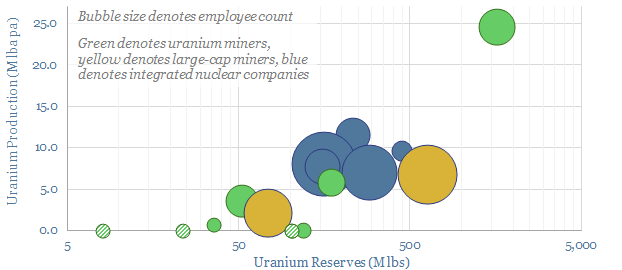

We have screened c20 uranium miners, assessing each company's production, reserves, asset base, size and recent news flow. 10 are publicly listed. Our market outlook is that firm uranium supply may be running 25% short of the level required on our roadmap to net zero.

Download the Screen?

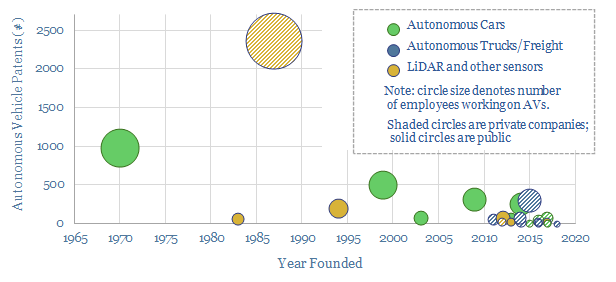

We have screened 25 leading companies in autonomous vehicles (public and private), tabulating their technical progress and proposals for Level 4-5 autonomy. 75% of the companies were founded in the last decade. Leaders are focused on freight, cars, taxis and LiDAR sensing.

Download the Screen?

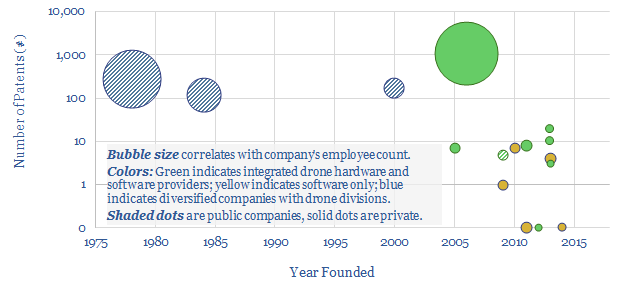

This data-file is a simple screen of companies manufacturing drones and commercializing drone software. It includes 12 private companies and 4 public companies. For each company, we have tabulated their history, geography, number of patent filings and a short description.

Download the Screen?

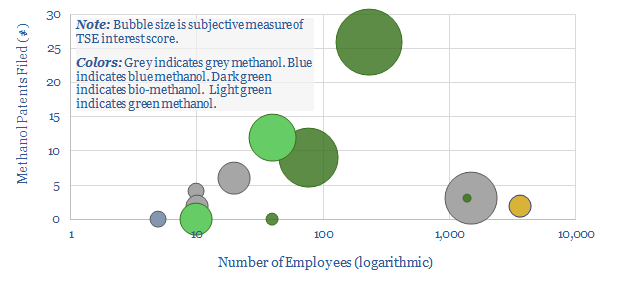

This data-file tabulates details of companies in the methanol value chain. For incumbents, we have quantified market shares. For technology providers, we have simply tabulated the numbers of patents filed. For newer, lower-carbon methanol producers, we have compiled a screen to assess leading options.

Download the Screen?

This screen tracks companies that can improve productivity of agricultural land (so more land is available for reforestation) or increase CO2 uptake rates of plants. It includes large-cap seed and crop protection companies, through to biotech firms, through to indoor farms that achieve 350-400x higher yields per acre.

Download the Screen?

Smart meters and smart devices are capable of transmitting and receiving real-time consumption data and instructions. This data-file tracks 40 leading companies, mostly at the venture and growth stages. They help lower demand, smooth grid volatility and encourage appliance upgrades.

Download the Screen?

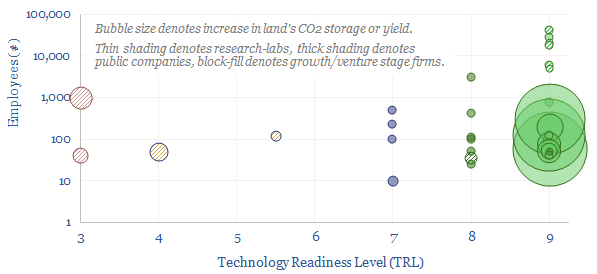

This data-file assesses who has the leading technology for producing industrial hydrogen, but especially blue hydrogen from auto-thermal reformers, after reviewing public disclosures and 750 patents. Companies include Air Liquide, Air Products, Casale, Haldor Topsoe, Johnson Matthey, KBR, Linde, Thyssenkrupp.

Download the Screen?

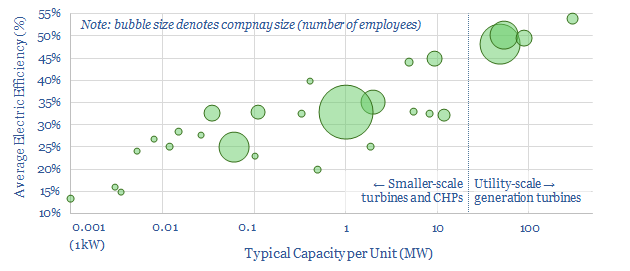

This data-file profiles 30 leading companies in gas turbines and CHPs, from mega-caps such as GE, Siemens and Mitsubishi, down to small-caps and private companies with exciting new technologies. Case studies are also presented, with details on turbine installations.

Download the Screen?

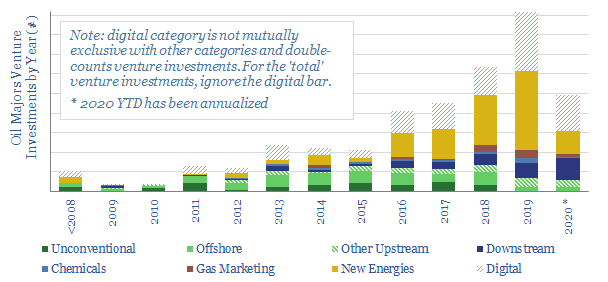

This database tabulates c300 venture investments, made by 9 of the leading Oil Majors. Their strategy is increasingly geared to advancing new energies, digital technologies and improving mobility. Different companies are compared and contrasted, including the full list of venture investments over time.

Download the Screen?

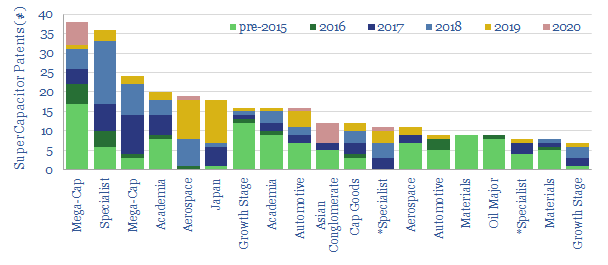

This data-file screens for the 'top twenty' technology leaders in super-capacitors, by assessing c2,000 Western patents filed since 2013. The screen comprises capital goods conglomerates, materials companies, an Oil Major with exposure and specialist companies improving SC energy density.

Download the Screen?

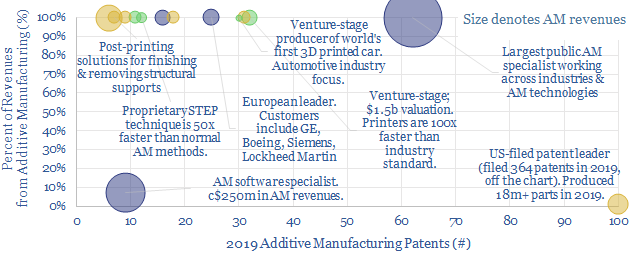

This data-file tabulates 5,500 patents into additive manufacturing (3D printing), in order to identify technology leaders. Patent filings over time show a sharp acceleration, making AM one of the fastest growth areas for the energy transition. We profile 14 concentrated specialists, plus broader Cap Goods and Materials companies.

Download the Screen?

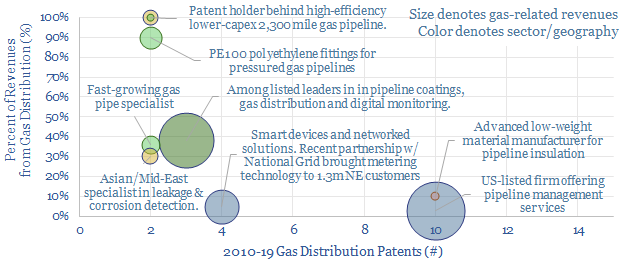

This data-file tracks 800 patents innovating pipeline transportation of natural gas, to screen for exciting technologies and companies. 6 publicly listed firms and 6 venture-stage start-ups stood out from the analysis, commercialising next-generation materials, monitoring methods and optimizing gas distribution.

Download the Screen?

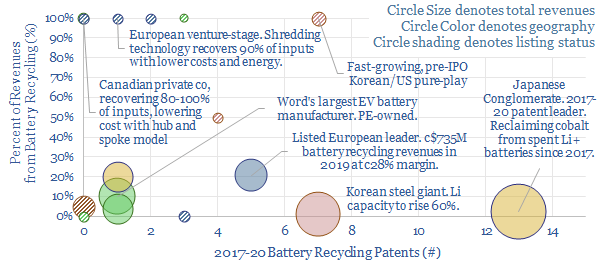

This data-file tracks over 6,000 patents filed into battery recycling technology, escalating at a 15% CAGR since 2000. 18 technology leaders are profiled ex-China, including 6 global, large-cap listed companies and 10 private companies, including some exciting, early-stage concepts to improve material recovery and costs.

Download the Screen?

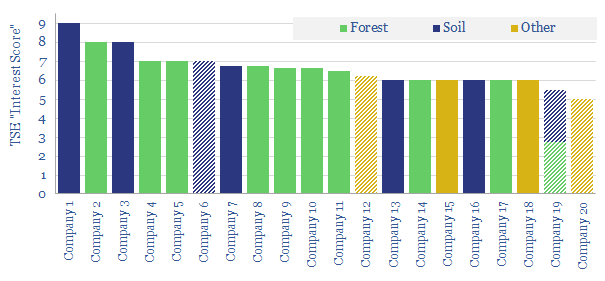

This data-file screens twenty companies measuring and verifying nature-based carbon offsets, in forests and soils. It includes 5 leading private companies at the cutting edge. Traditionally cumbersome, manual methodologies have evolved rapidly, towards technology-driven, real-time remote sensing, to enable the scale-up of nature-based CO2 offsets.

Download the Screen?

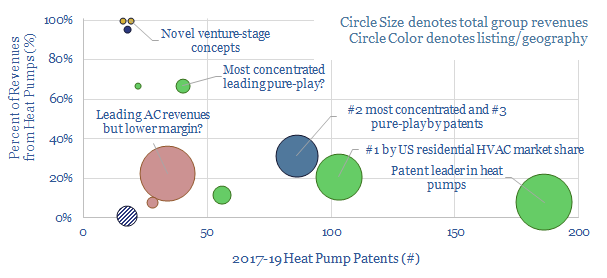

Heat pumps can halve the CO2 intensity of residential heating. Hence we have screened for the leading companies, focusing in upon 4,000 Western-centric patents from 2017-19. The space is competitive. 7 public companies and 4 private companies stand out, with concentrated exposure to the theme.

Download the Screen?

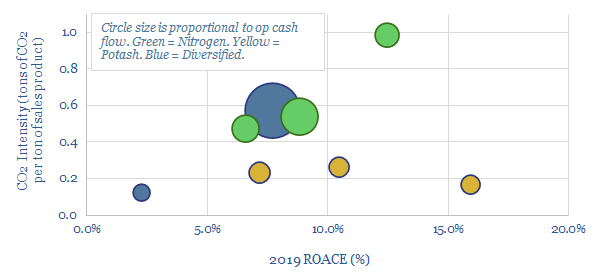

This data-file screens the large, listed fertilizer companies, comparing their CO2 intensity, ROACE, cash flow and recent patent filings. The industry could be disrupted by the rise of conservation agriculture, eroding thee 186MTpa global fertilizer market, which also comprises c1% of global emissions.

Download the Screen?

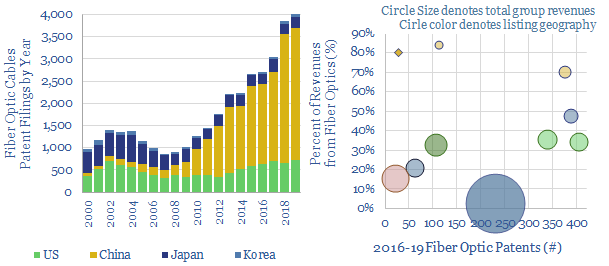

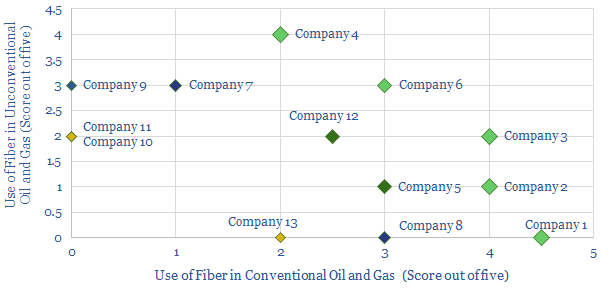

This data-file screens for the technology leaders in fiber-optic cables, which are crucial for the digitization of industries and the world's structural shift towards remote-working, based on screening 37,000 patents. Revenues and market shares are summarized for the leaders.

Download the Screen?

We have compiled a database of 25 leading companies in Redox Flow Batteries, by looking across 1,237 patents since 2017. Exciting progress is visible, with technical maturity rapidly progressing, demonstration facilities under construction and a promise of cost-competitive, long-life, energy storage.

Download the Screen?

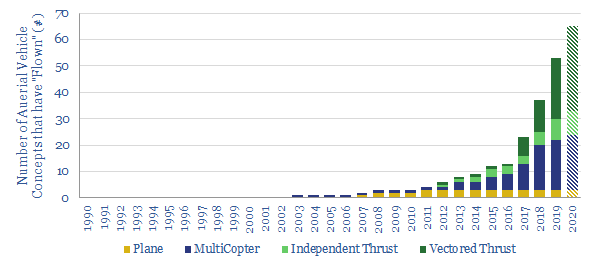

We have updated our database of over 100 companies, which have already flown c50 aerial vehicles (aka "flying cars"), to identify the leading contenders. We categorize each vehicle by fuel type, speed, range, fuel economy and credibility. The data strongly imply aerial vehicles taking off in the 2020s.

Download the Screen?

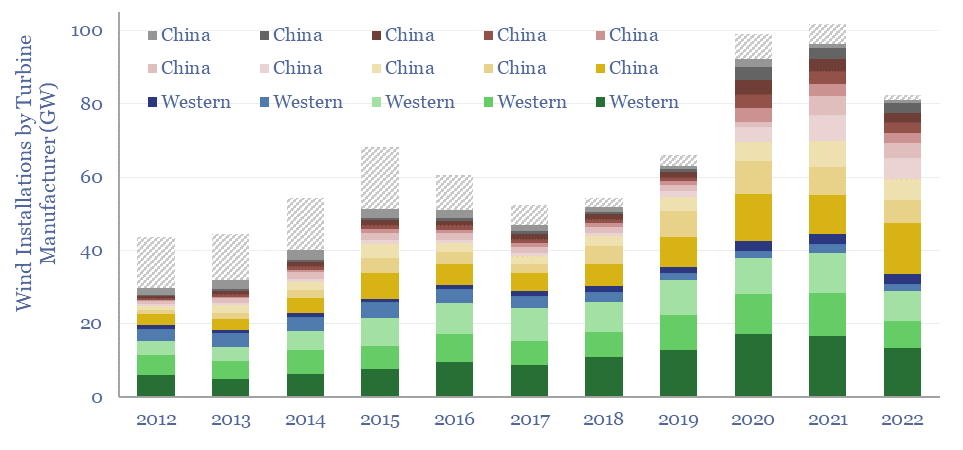

This data-file tracks wind turbine manufacturers, their market shares and their margins. By 2022, fifteen companies account for 98% of global wind turbine installations. This includes large Western incumbents, and a growing share for Chinese entrants, which now comprise over half of the total market, limiting sector-wide operating margins to c3%.

Download the Screen?

This data-file assesses the outlook for 30 plastic pyrolysis companies, operating (or constructing) 100 plants around the world, which use chemical processes to turn waste plastic back into oil. The data-file has been updated in 2023, concluding that the theme is 'on track', but segmented between leaders and setbacks.

Download the Screen?

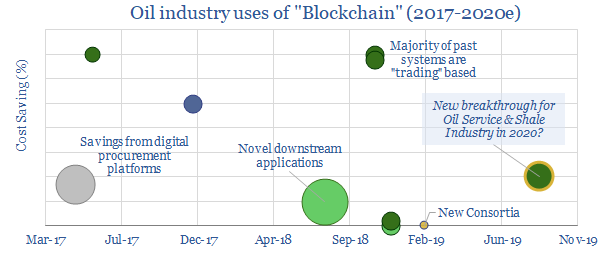

This datafile tabulates ten examples of deploying Blockchain in the oil and gas industry since 2017; including companies and cost savings. Most prior examples are in trading. For 2020, we are particularly excited by the broadening of Blockchain technologies into the procurement industry, which can deflate shale costs.

Download the Screen?

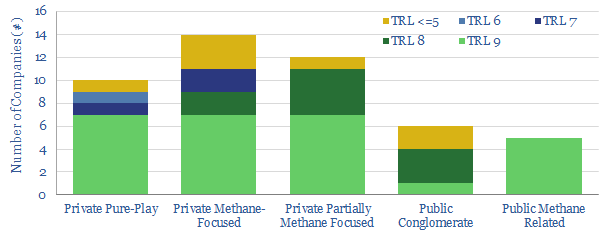

This data-file screens the methods available to monitor for methane emissions. Notes and metrics are tabulated. Emerging methods, such as drones and trucks are also scored, based on technical trials. The best drones can now detect almost all methane leaks >90% faster than traditional methods. c34 companies at the cutting edge are screened.

Download the Screen?

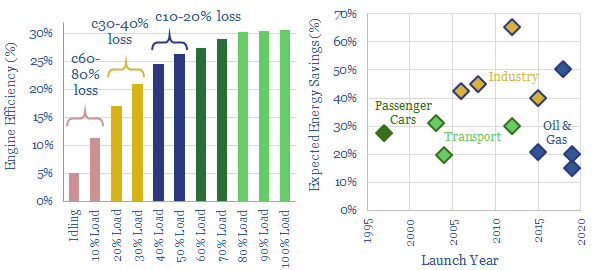

Gas and diesel engines can be 30-80% less efficient when idling, or running at low loads. This is the rationale for hybridizing engines with backup batteries. Industrial applications are increasing, achieving 30-65% efficiency gains, across multiple industries. In 2018-19, the biggest new horizon has been in oil and gas, including hybrid rigs, supply vessels, construction vessels and even LNG plants.

Download the Screen?

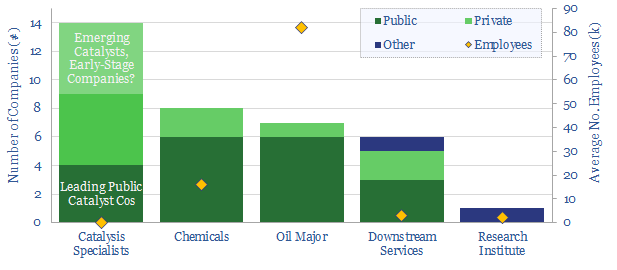

This data-file tabulates headline details of c35 companies commercialising catalysts for the refining industry, in order to improve conversion efficiencies and lower CO2 emissions. Five early-stage private companies stand out, while we also profile which Majors have recently filed the most patents to improve downstream catalysis.

Download the Screen?

This data-file is a global hydrogen market breakdown, disaggregating the 110MTpa market (mainly ammonia, methanol and refining), how it is met via different production technologies, and our estimates of those technologies' costs (in $/kg) and CO2 intensities (in kg/kg or tons/ton).

Download the Screen?

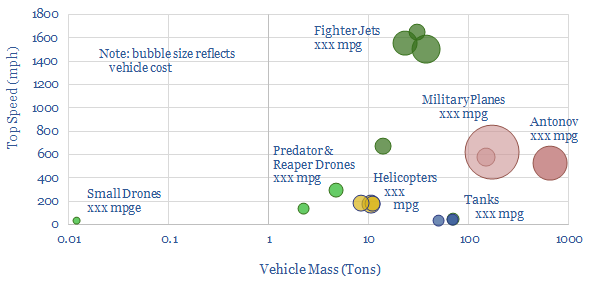

Swarms of drones are emerging as the most devastating military weapon of the 21st century. This was evidenced by the recent attack on Saudi oil infrastructure. But drones' impact on 0.7Mbpd of global military oil demand could be even more devastating. This data-file quantifies their fuel economy at >1,000 mpge compared to today's fighter jets, tanks, helicopters and planes that achieve

Download the Screen?

What if CO2 was not a waste product, but a valuable commercial feedstock? We have assessed the top 27 companies at the cutting edge, commercialising CO2 into next-generation plastics, foams, concretes, specialty chemicals and agricultural products. Each company is assessed in detail. 13 are particularly exciting. 21 are start-ups. Aramco, Chevron, Repsol also screen well.

Download the Screen?

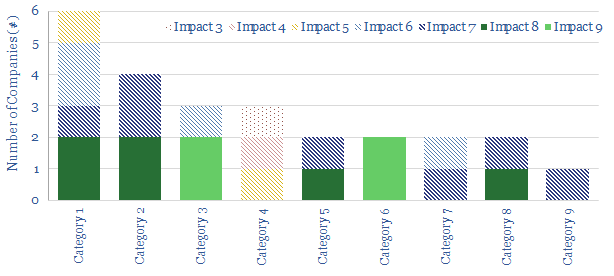

Over 20 next-generation subsea robotics concepts are presented. These electric solutions are increasingly autonomous, they reside subsea and can conduct more thorough inspection/intervention work. Inspection is 2-6x faster, and maintenance costs can be halved, yielding savings of $0.5-1/boe at a typical field. The data-file also summarizes the leading Majors and Service Companies in the space.

Download the Screen?

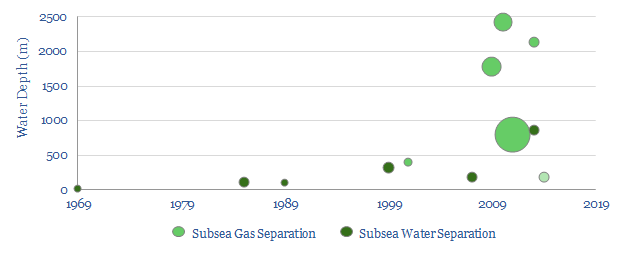

This database covers all 14 subsea separation projects across the history of the oil industry, going back to the "dawn of subsea" in 1969. The technology has been elusive, with just a handful of applications, the largest of which is 2.3MW. This could change, with the pre-salt partners pioneering an unprecedented 6MW facility at Mero.

Download the Screen?

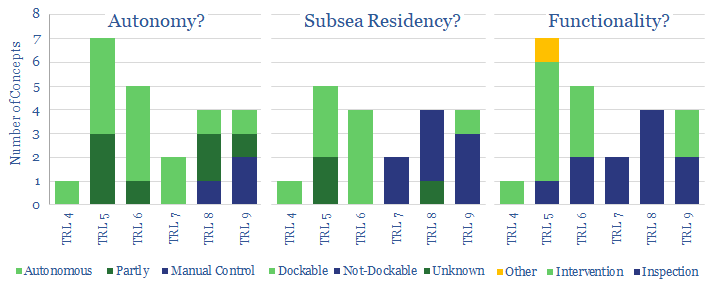

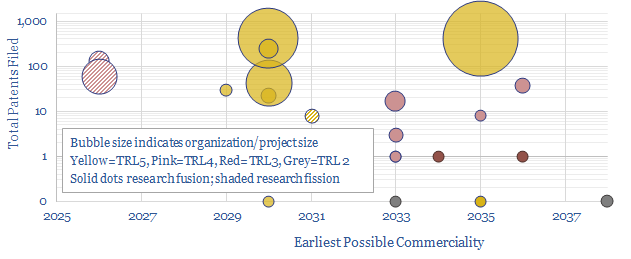

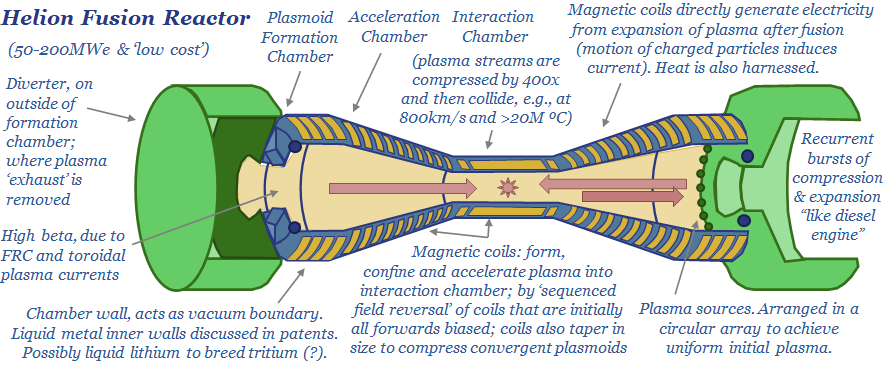

Can next-generation nuclear technologies realistically be factored into long-run forecasts of energy markets or energy-transition? The impacts of nuclear fusion would be vast, and several companies are making exciting progress, but no facility in our sample has yet surpassed TRL6, achieved an "energy gain" or system stability beyond c10 mS - 2mins.

Download the Screen?

This data-file quantifies the leading companies in Distributed Acoustic Sensing (DAS), the game-changing technology for enhancing shale and conventional oil industry productivity. Operators are screened from their patents and technical papers. Services are screened based on their size and their technology.

Download the Screen?

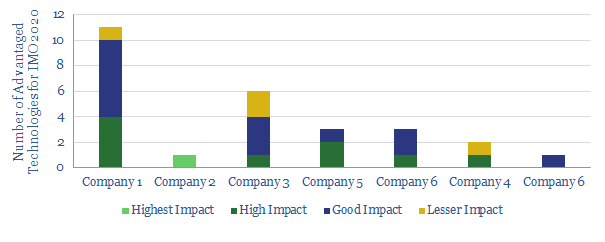

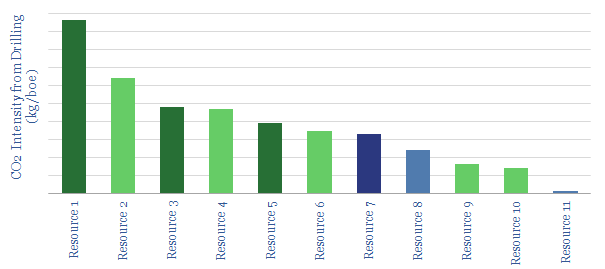

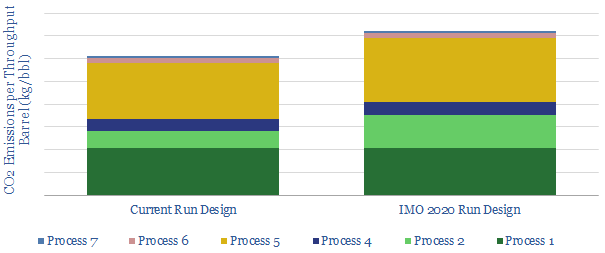

We review the top, proprietary technologies that we have seen from analysing patents and technical papers, to capitalise on IMO 2020 sulphur regulation, across the world's leading integrated oil companies.

Download the Screen?

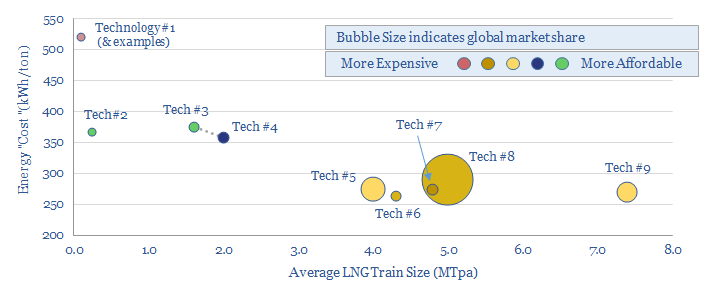

This data-file is an overview of different LNG liquefaction technologies: APCI, APX, Optimised Cascade, Fluid Cascade, DMR, SMR, PRICO and MMLS. A typical LNG liquefaction plant has energy intensity of 280kWh/ton, consuming 5% of the input gas entering the plant, with 20kg/boe of Scope 1&2 CO2 intensity. But efficient and electric-drive compression can lower these penalties by at least 50-75%.

Download the Screen?

Breakthrough Technologies