Everybody knows that “AI data centers use round-the-clock power”. Yet in fact, one of the biggest power challenges for AI data centers is precisely that they do not use round-the-clock power. They incur large load transients that cannot be handled by batteries, power grids or most generation sources. This 15-page report explores data center load profiles, which may require 5-10x more capacitors?

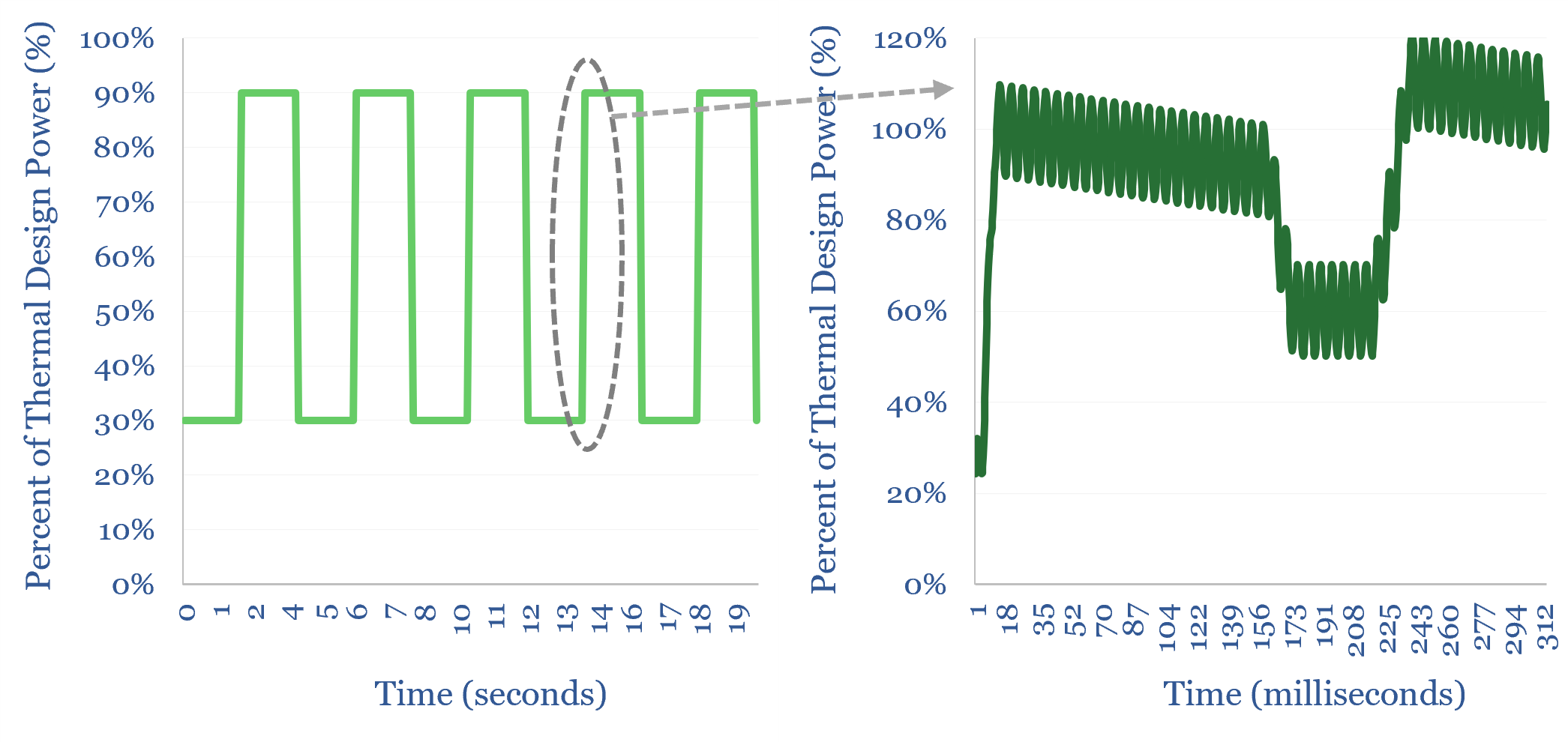

At a granular level, looking second by second, or millisecond by millisecond, a key issue is that AI data centers precisely do not use round the clock power, but incur rapid load transients. Examples from technical papers, and reasons for these load transients, are discussed on pages 2-3.

These load profiles would quickly trip conventional power generation, wear out lithium ion batteries, or potentially destabilize entire grids, for the reasons on page 4.

Stepping down the power from a medium-voltage input at say 15kV to individual chip circuits at 1V is a complex, cascaded process at an AI data-center, covered on pages 5-7.

Adding capacitors throughout this cascade, can buffer the load transients, and smooth out the input power demands for gas-fired generation sources and broader power grids, per pages 8-10.

Some hyper-scalers are likely burning through over $1bn pa of batteries, which are partly being used as a backstop for the load profiles of AI data-centers, when really there should be more capacitors and supercapacitors smoothing out the chips, AC and DC buses across these facilities.

Across the $30bn pa global capacitor market, therefore, almost every company is now flagging strong demand within AI data-centers, re-accelerating the market, per pages 11-12.

Leading companies in capacitors, and in AI data-center UPS and power quality solutions for smoothing out data center load profiles, are discussed on pages 13-15.