Solar semiconductors have changed the world, converting light into clean electricity. Hence can thermoelectric semiconductors follow the same path, converting heat into electricity with no moving parts? This 14-page report reviews the opportunity, challenges, efficiency, costs and companies.

MOSFETs: energy use and power loss calculator?

MOSFETs are fast-acting digital switches, used to transform electricity, across new energies and digital devices. MOSFET power losses are built up from first principles in this data-file, averaging 2% per MOSFET, with a range of 1-10% depending on voltage, switching, on resistance, operating temperature and reverse recovery charges.

MOSFETs and other power transistors matter, as they are the basis for solar inverters, wind converters, electric vehicle traction inverters, AC-DC rectifiers, other DC-DC converters, the power supplies to data-servers, AI and other digital devices.

Transistors are digital switches made of semiconductor materials, which allow one circuit to control another. Our overview of semiconductors explains how a transistor works, from first principles, by depicting a bipolar junction transistor (BJT), which is driven by current.

However, it is better to control a transistor using voltage than current. Ambient electrical fields induce currents that can cause current-driven transistors to misfire. Hence MOSFETs and IGBTs are driven by voltage.

MOSFETs were invented at Bell Labs in 1959 and are now the most used power semiconductor device in the world. Something like 2 x 10^22 transistors have been produced across human history by 2023.

MOSFETs: how do they work?

We are sorry to say it, but it is simply not possible to understand how a MOSFET works, without a basic understanding of voltage, current, conduction band electrons, valence band holes, N-type semiconductor, P-type semiconductor and Fermi Levels. Do not despair! To help decision-makers understand these concepts, we have written an overview of semiconductors and an overview of electricity.

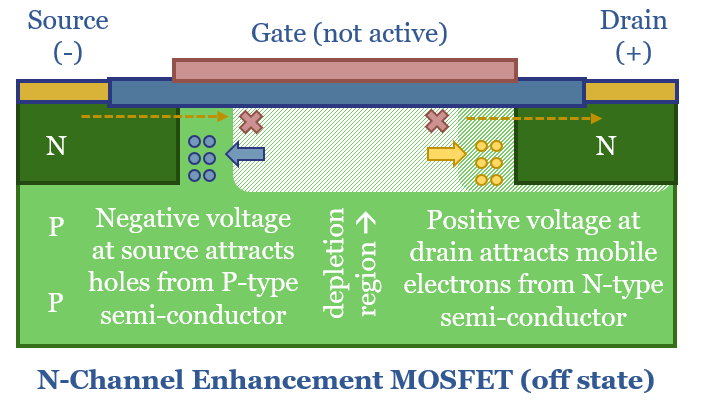

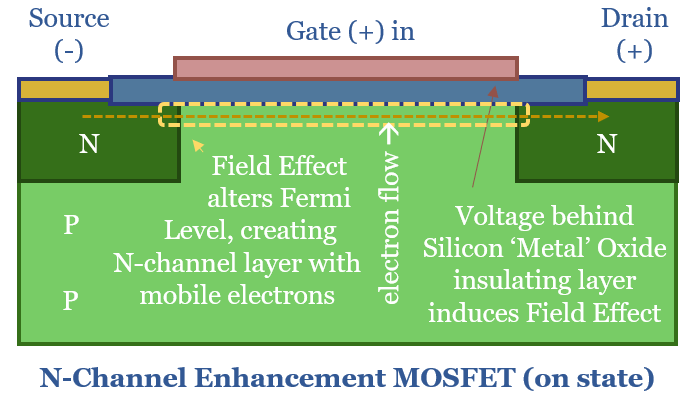

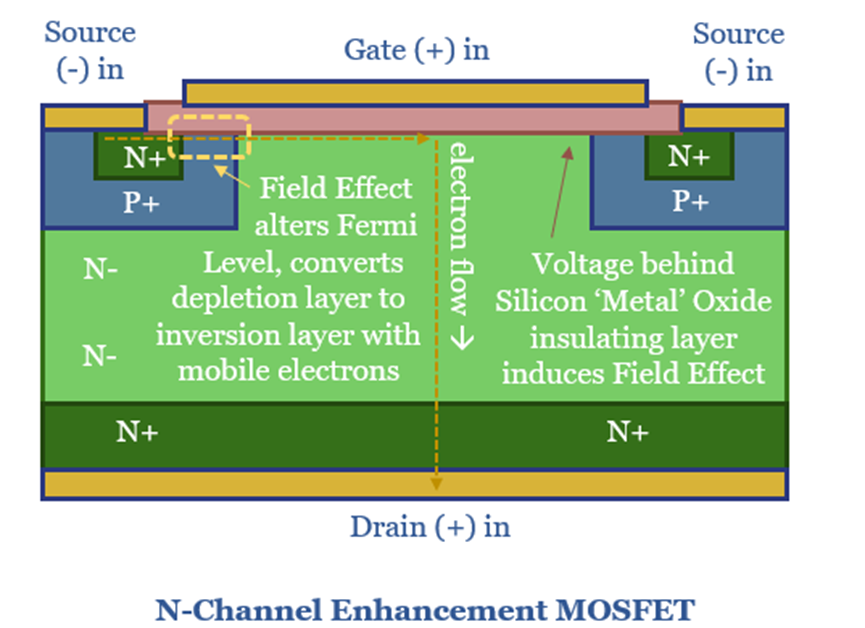

MOSFET stands for Metal Oxide Semiconductor Field Effect Transistor. Actually this is something of a misnomer. The eponymous ‘metal oxide’ is referring to an oxide of silicon metal, or in other words, a highly pure layer of silicon dioxide. It is oxidized to create an insulating layer. In turn, the reason for creating this insulating layer is so that a Field Effect can be induced by a potential difference (voltage) across the gate.

Why can’t current flow through a MOSFET in the off-state? A simplified diagram of an N-channel enhancement MOSFET is shown below. Ordinarily, electrons cannot flow from the source to the drain, due to the PN junction between the body and the drain, which is effectively a reverse-biased diode. A negative voltage at source draws in the mobile holes from the P-type semiconductor. A positive voltage at the drain attracts the mobile electrons in the N-type layer. And this creates a depletion zone where no current can flow, just like in any other diode.

How can current flow through a MOSFET in the on-state? The ‘Field Effect’ occurs when a positive voltage is applied to the gate, raising the Fermi level of the P-type semiconductor. Remember the Fermi Level is the energy level likely to be exceeded by 50% of electrons. A large enough voltage raises the Fermi Level above the lower bound of the conduction band. Suddenly there is a sea of mobile electrons, forming an N-channel, so that electrons can flow from source to drain.

What power losses in a MOSFET?

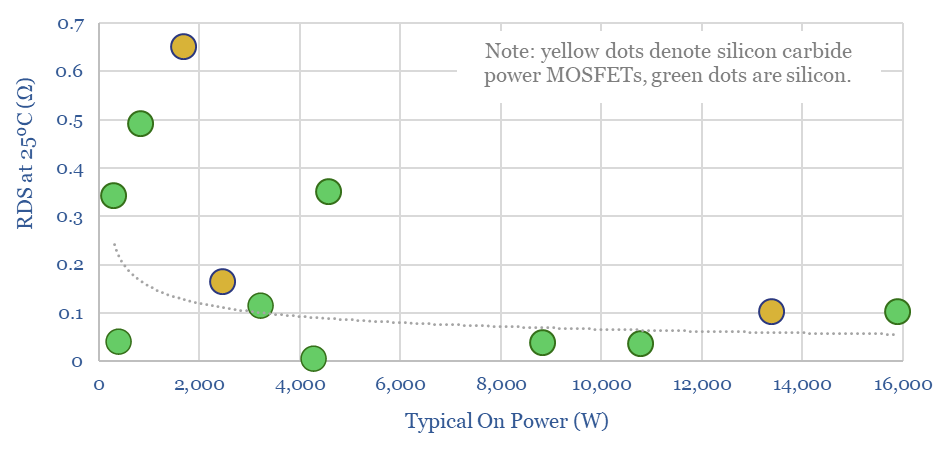

Resistive losses occur when a current flows through a semiconductor, proportional to the on-resistance of the semiconductor, and a square function of the current. The on resistance of different MOSFETs is typically in the range of 0.1-0.6 Ohms, at power ratings of 1-20kW, based on data-sheets from leading manufacturer, Infineon (as profiled in our screen of SiC and MOSFET companies).

Hence a better depiction of an N-channel enhancement MOFSET follows below. In the chart above, the N-channel through the P-layer is very long and thin, which is going to result in high resistance. Hence in the chart below, the NPN junction is slim-lined, and the on resistance from source to drain is going to be lower, which helps efficiency.

Raising voltage is also going to reduce I2R conduction losses, because less current is flowing. However, voltage is limited by a MOSFET’s breakdown voltage. Above this level, the PN junction will fail to block the flow from source to drain, and the MOSFET will be destroyed (avalanche breakdown). The voltage ratings of different MOSFETs are tabulated in our data-file. A clear advantage for silicon carbide power MOSFETs is their higher breakdown voltage, which allows them to be operated at higher voltages across the board, reducing conductive losses.

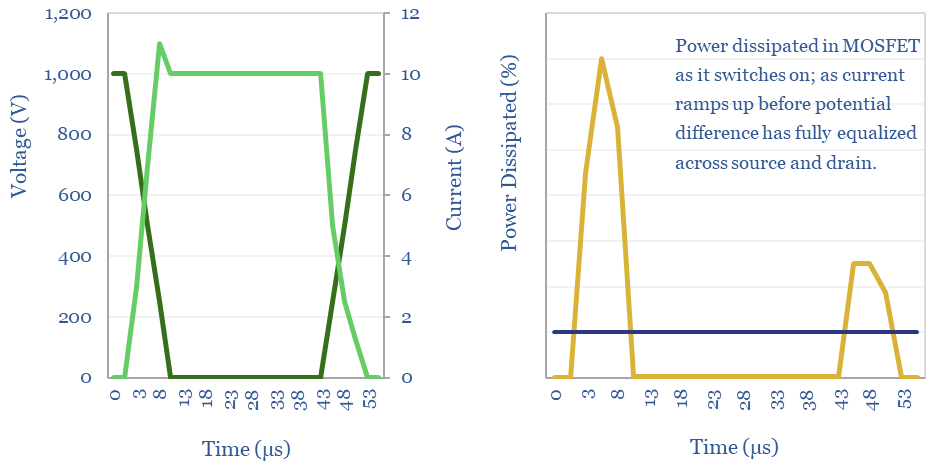

Switching losses are also incurred whenever a MOSFET turns on or off. When the MOSFET is off, there is a large potential difference (voltage) between the source and the drain. When the MOSFET turns on, current flows in while the voltage is still high, which dissipates power. And then the voltage falls when current flow is high, which dissipates power. The same effect happens in reverse when the MOSFET is switched off. These losses add up, as the pulse width modulation in an inverter will often exceed 20kHz frequency. And the latest computer chips run with a clock speed in the GHz. Minimizing switching losses is the rationale for soft switching, being progressed by companies such as Hillcrest.

A reverse recovery loss is also incurred by a MOSFET, because every time the MOSFET switches on, the body diode needs to be inverted from reverse bias to forward bias. This physically requires moving charge carriers, or in other words, requires flowing a current. The reverse recovery loss can often be the largest single loss on a MOSFET.

Transistors: IGBTs vs MOSFETs?

IGBTs stand for Integrated Gate Bipolar Transistors, which is another transistor design that has been heavily used in solar, wind, electric vehicles and other new energies applications.

An IGBT is effectively a MOSFET coupled with a Bipolar Junction Transistor, to improve the current controlling ability.

IGBTs are generally more expensive than MOSFETs, and can handle higher currents at lower losses. However when switching speeds are high (above 20kHz), MOSFETs have lower losses than IGBTs, because IGBTs have slow turn off speeds with higher tail currents.

Finally, in the past, it was suggested that IGBTs performed better than MOFSETs above breakdown voltages of 400V, although this is now more nuanced, as there are many high-performance MOSFETs with voltages in the range of 600-2,000 V.

The very highest voltage IGBTs and MOSFET modules we have seen are in the range of 6-12 kV. This explains why so much of new energies requires generating at low-medium voltage then using transformers to step up the power for transmission; or conversely using transformers to step down the voltage for manipulation via power electronics modules.

Formulae for the losses in a power MOSFET?

This data-file aims to calculate the power losses of a power MOSFET from first principles, covering I2R conduction losses, voltage drops across the diode, switching losses and reverse recovery losses, so that important numbers can be stress tested.

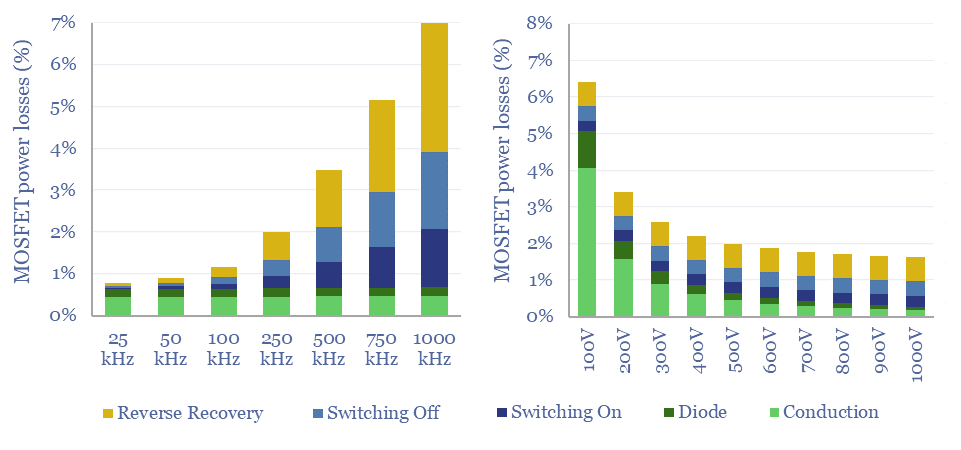

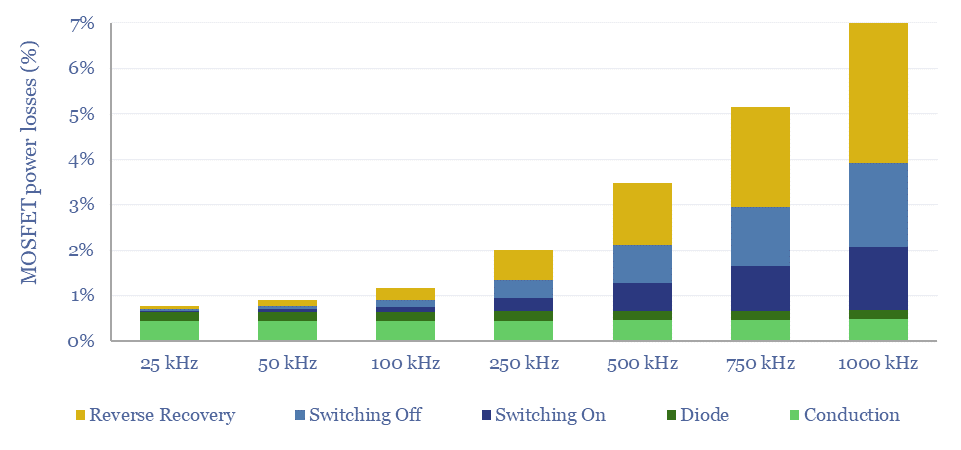

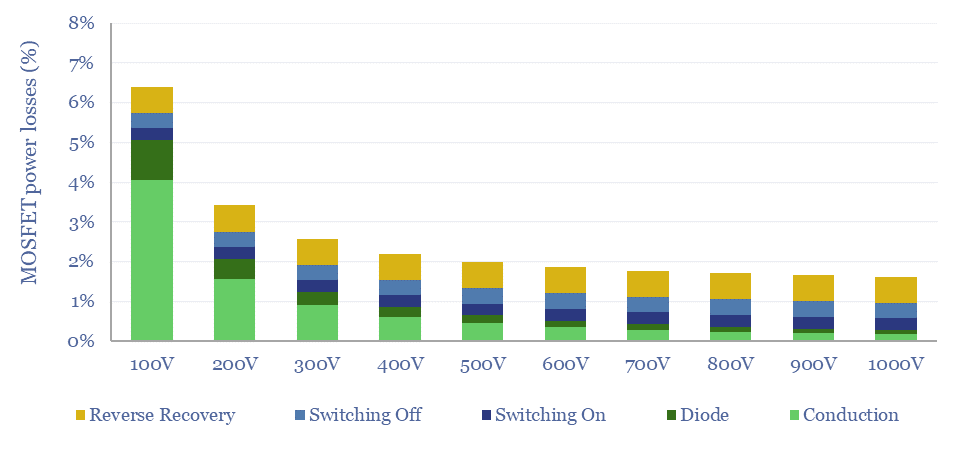

Generally, the losses through a MOSFET will range from 1-10%, with a base case of 2% per MOSFET. These numbers consist of conduction losses, voltage drops across the diode layer, switching losses and reverse recovery charges.

Losses add. Many circuit designs contain multiple MOSFETs, or layers of MOSFETs and IGBTs (example below). Roughly, flowing power through 6 MOSFETs, each at c2% losses, explains why the EV fast-charging topology depicted below might have losses in the range of 10-20%.

Power losses in a MOSFET rise as a function of higher switching speeds, as calculated in the data-file, shown in the chart below, and for the reasons stated above. High switching speeds produce a higher quality power signal, but are also more energetically demanding.

Power losses in a MOSFET fall as a function of Voltage, as calculated in the data-file, shown in the chart below, and for the reasons stated above. Although lower voltage MOSFETs face less electrically demanding conditions and are less expensive.

Overall, our model is intended as a simple, 30-line calculator to compute the likely power flow, electricity use and losses in a MOSFET. This should enable decision makers to ballpark the loss rates of MOSFETs, and power electronic devices containing them.

However, interaction effects are severe. Drain current, breakdown voltage, gate voltage, temperature, on resistance, reverse recovery charges and all of the switching times depend on one-another. Hence for specific engineering of MOSFETs it is better to consult data-sheets.

Semiconductor manufacturers also stood out in our recent review of market concentration versus operating margins.

Energy Recovery Inc: pressure exchanger technology?

A pressure exchanger transfers energy from a high-pressure fluid stream to a low-pressure fluid stream, and can save up to 60% input energy. Energy Recovery Inc is a leading provider of pressure exchangers, especially for the desalination industry, and increasingly for refrigeration, air conditioners, heat pump and industrial applications. Our technology review finds a strong patent library and moat around Energy Recovery’s pressure exchange technology.

Energy Recovery Inc was founded in 1992, it is headquartered in California, listed on NASDAQ, with 250 employees and $1.3bn of market cap at the time of writing. Financial performance in 2022 yielded $126M revenues, 70% gross margin, 20% operating margin.

The PX Pressure Exchanger is Energy Recovery Inc’s core product. It transfers pressure energy from a high pressure fluid stream to a low pressure fluid stream at 98% efficiency, yielding up to 60% energy savings in specific contexts. The company aims to grow revenues as much as 5x in the next half-decade due to increasing need for global energy efficiency.

Pascal’s Law states that bringing a high pressure and low pressure fluid into contact will result in their pressures equalizing with minimal mixing. This principle is used in pressure exchangers. As a rotor rotates, it brings a low pressure fluid A into contact with a high pressure fluid B, equilibrating their pressure, then discharging fluid A at higher pressure.

For example in a desalination plant, incoming seawater at 1-3 bar of pressure is pressurized up to 40-80 bar using pumps, pushing it across a membrane that is porous to water but not to dissolved salts. Energy remains in this 40-80 bar concentrate stream. It is better to recover this energy than blast it back into the Ocean! Thus pressure exchange can lower the energy requirements of desalination by as much as 60%.

Energy Recovery Inc’s patents note that rotary pressure exchangers were first invented in the 1960s, progressed in the 1990s, but prior to its own designs, the company argues that no one had designed efficient and reliable systems, which could run without an external motor to rotate them, achieved by optimizing the shape of the flow channels.

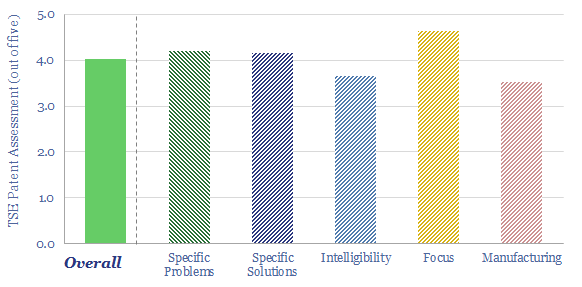

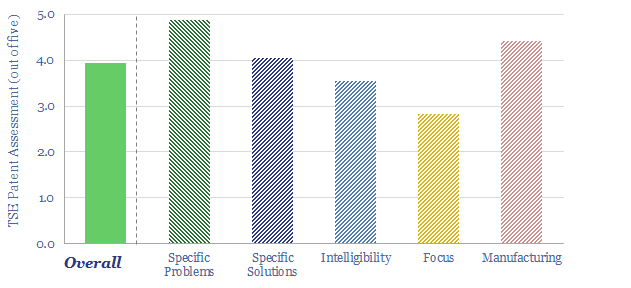

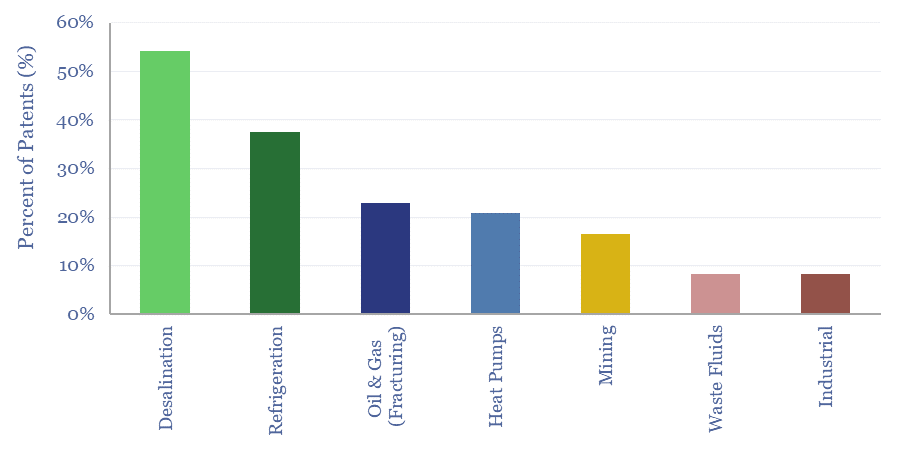

Our technology review found 65 patent families from Energy Recovery Inc. Overall, we think the patent library is high-quality and the company will retain a moat and leadership in the pressure exchange market, based on its patents and historical experience. Although some early patents are coming up to expiry. Details are in the data-file.

Desalination has been Energy Recovery’s core market historically. However new markets are emerging, from cryogenic cycles through to applications focused on shale (although the latter requires avoiding the corrosive impacts of sand and debris in fluid streams).

Refrigeration, air conditioning and heat pumps are seen as a growing source of demand. One patent notes that regulation is increasingly phasing out HFCs that can have 13,000x higher GWPs than CO2, and these systems use CO2 as the refrigerant instead. However CO2 based refrigeration cycles have maximum pressures of 1,500 psi or greater, compared to HFC/CFC systems at 200-300psi. This makes the energy savings from pressure exchange increasingly important, siphoning away a portion of the evaporated refrigerant and re-pressurizing it using high-pressure refrigerant downstream of the heat rejection stage, before the expansion valve stage.

LEDs: seeing the light?

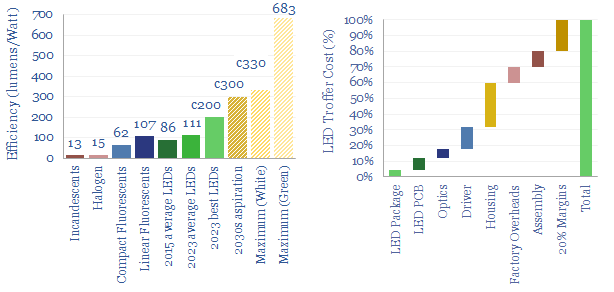

Lighting is 2% of global energy, 6% of electricity, 25% of buildings’ energy. LEDs are 2-20x more efficient than alternatives. Hence this 16-page report is our outlook for LEDs in the energy transition. We think LED market share doubles to c100% in the 2030s, to save energy, especially in solar-heavy grids. But demand is also rising due to ‘rebound effects’ and use in digital devices. We have screened 20 mature and (mostly) profitable pure plays.

LED lighting: leading companies in LEDs?

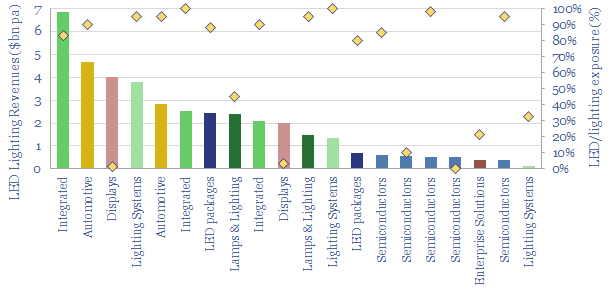

20 leading companies in LED lighting are compared in this data-file, mostly mid-caps with $2-10bn market cap and $1-8bn of lighting revenues, listed in the US, Europe, Japan, Taiwan. In 2022, operating margins averaged 8%, due to high competition, fragmentation and inorganic activity. The value chain ranges from LED semiconductor dyes to service providers installing increasingly efficient lighting systems as part of the energy transition.

The global LED industry is worth $80bn per year, with LEDs used in lighting indoor and outdoor spaces, in the automotive industry and to back-light the screens associated with the rise of the internet.

Leading companies in LED lighting range from specialists manufacturing semiconductor dyes, other specialists combining these components with other conductors and phosphors into LED packages, others encasing this product into LED lamps, others adding further drivers and housings to yield LED luminaires, and others ultimately selling entire lighting systems.

Integration versus fragmentation. Some companies are involved in the entire, integrated value chain discussed above, while others specialise in specific parts, e.g., dye/phosphor specialists upstream, or enterprise solutions businesses that design and implement overall lighting systems for corporate customers.

This data-file profiles 20 leading companies in LED lighting and the broader lighting industry. For each company, we have quantified size, recent financial metrics (e.g., revenue, operating margins), estimated exposure to the LED lighting industry and tabulated key notes. Backup tabs in the file cover LED costs, LED payback periods and LED efficiency.

Competition is high in the LED lighting industry and the average company reported an 8% operating margin in 2022.

Fragmentation is high, as the largest company in the screen derives just $7bn per annum of LED-related revenues. All 20 companies in our screen generated c$40bn of revenue in 2022.

Company sizing is therefore more skewed towards mid-cap and smaller-cap names than other company screens we have undertaken, with the larger companies in the screen having market caps in the range of $2-10bn.

Acquisition activity in the LED lighting industry has been high, and half a dozen of the companies in our screen have recently changed hands or been spun out. Details are in the data-file. For example, Philips Lighting was IPO’ed as Signify in 2016 and remains the largest integrated LED lighting company in the world.

Industry leaders in LED lighting include listed companies in the Netherlands, Japan, the US, Taiwan, China, Austria and Germany.

AirJoule: Metal Organic Framework HVAC breakthrough?

Montana Technologies is developing AirJoule, an HVAC technology that uses metal organic frameworks, to lower the energy costs of air conditioning by 50-75%. The company is going public via SPAC and targeting first revenues in 2024. Our AirJoule technology review finds strong rationale for next-gen sorbents in cooling, good details in Montana’s patents, and challenges that decision-makers may wish to explore further.

The global HVAC market is worth $355bn per year, as air conditioning consumes 2,000 TWH per year, or 7% of all global electricity (note here).

Each one of the world’s 1.7bn vehicles also has an in-built air conditioner, and in hot/congested conditions, the AC can sap 70% of the range of an electric vehicle.

Typical air conditioners have a coefficient of performance (COP) of 2.5-3.5x, which means that each kWh of electricity inputs will deliver 2.5-3.5 kWh-th of cooling energy.

Cooling energy, in turn, is used to reject heat from air (about one-third of the total) and to reject heat from the inevitable condensation of water, as air cools down (about two-third of the total). To re-iterate: (a) cooler air can hold less water; (b) hence some water will condense as air is cooled; (c) condensation of water releases energy (called the latent heat of vaporization, and running at 41 kJ/mol, or 640 Wh/liter); and (d) this adds to the cooling load that the air conditioner needs to provide; (e) by a factor of 1-2x more than the cooling load needed to cool down the air in the first place (called the specific heat of air, or 1.0 kJ/kg-K). For more details, please see our overview of air conditioning energy demand.

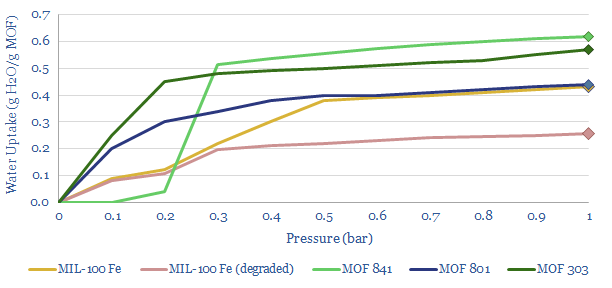

Metal Organic Frameworks are a type of sorbent with a high surface area, able to adsorb water from air. To re-release that water, a vacuum pump can be used to lower the pressure (chart below). Effectively this is a swing adsorption process rather than a cryogenic process.

How does AirJoule technology work? This data-file pieces together details from Montana’s disclosures and patent filings. In particular, the work covers five core patents describing a Latent Energy Harvesting System, using Metal Organic Frameworks to adsorb and re-vaporize atmospheric water; test stable MOFs, and novel methods for manufacturing those MOFs.

What COP for AirJoule? After reflecting the loads of the vacuum pump, other fans and blowers, heat transfer from an adsorption chamber to a desorbtion chamber, and auxiliary loads, AirJoule is targeting a total electricity use of 60-90 Wh/liter of water. Hence if water is adsorbed, then desorbed, then misted, it will absorb 640Wh/liter as it re-vaporizes (see above). Divide 640Wh/liter by 60-90Wh/liter, and you derive a coefficient of performance of 7-10x. Or in other words, the energy consumption will be 50-75% below a typical air conditioner. More details are in the data-file.

What are the key challenges for AirJoule technology? We see five thematic challenges for using MOFs and sorbents in cooling systems, and two specific challenges based on reviewing Montana’s patents.

Overall, we think there is very strong potential for next-gen sorbents, and metal organic frameworks, across cooling and other industrial processes, as described in our recent research note here. Key conclusions into Montana’s technology are in our AirJoule technology review below.

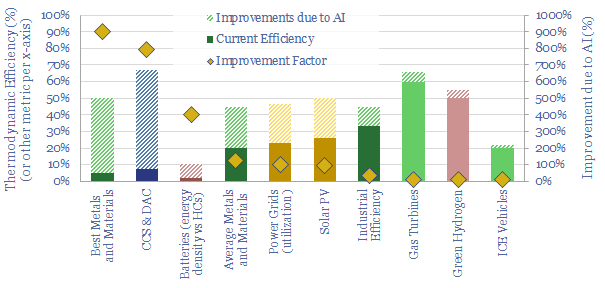

Omniscience: how will AI reshape the energy transition?

AI will be a game-changer for global energy efficiency. It will likely save 10x more energy than it consumes directly, closing ‘thermodynamic gaps’ where 80-90% of all primary energy is wasted today. Leading corporations will harness AI to lower their costs and accelerate decarbonization. This 19-page note explores the opportunities.

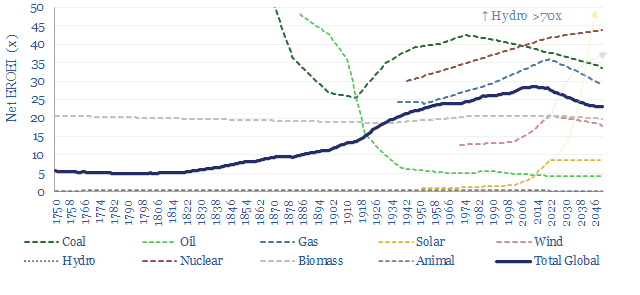

EROEI: energy return on energy invested?

Net EROEI is the best metric for comparing end-to-end energy efficiencies, explored in this 13-page report. Wind and solar currently have EROEIs that are lower and ‘slower’ than today’s global energy mix; stoking upside to energy demand and capex. But future wind and solar EROEIs could improve 2-6x. This will be the make-or-break factor determining the ultimate share of renewables?

Energy efficiency: a riddle, in a mystery, in an enigma?

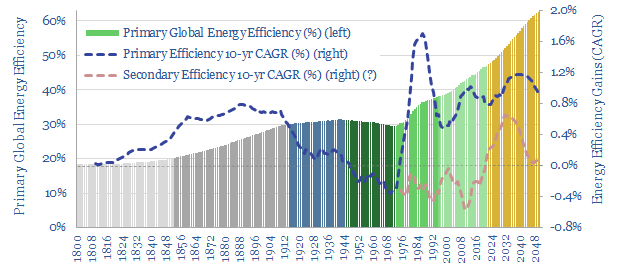

Projections of future global energy demand depend on energy efficiency gains, which are hoped to step up from <1% per year since 1970, to above 3% per year to 2050. But there is a problem. Energy efficiency is vague. And hard to measure. This 17-page explores three different definitions. We are worried that global energy demand will surprise to the upside as efficiency gains disappoint optimistic forecasts.

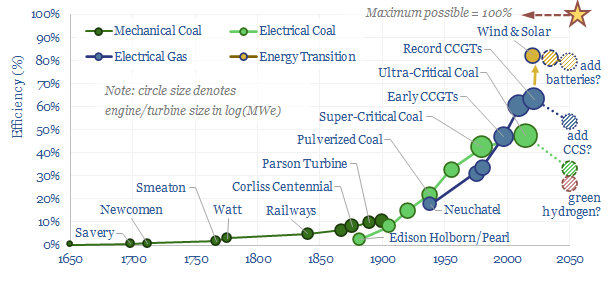

Prime movers: efficiency of power generation over time?

How has the efficiency of prime movers increased across industrial history? This data-file profiles the continued progress in the efficiency of power generation over time, from 1650 to 2050e. As a rule of thumb, the energy system has shifted to become ever more efficient over the past 400-years.

In the early industrial revolution, mechanical efficiency ranged from 0.5-2% at coal-fired steam engines of the 18th and 19th centuries, most famously Newcomen’s 3.75kW steam engine of 1712. This is pretty woeful by today’s standards. Yet it was enough to change the world.

Electrical efficiency started at 2% in the first coal-fired power stations built from 1882, starting at London’s Holborn Viaduct and Manhattan’s Pearl Street Station, rising to around 10% by the 1900s, to 30-50% at modern coal-fired power generation using pulverised, then critical, super-critical and ultra-critical steam.

The first functioning gas turbines were constructed in the 1930s, but suffered from high back work ratios and were not as efficient as coal-fired power generation of the time. Gas turbines are inherently more efficient than steam cycles. But realizing the potential took improvements in materials and manufacturing. And the best recuperated Brayton cycles now surpass 60% efficiency in world-leading combined cycle gas turbines.

Renewables, such as wind and solar, offer another step-change upwards in efficiency, and will harness over 80% of the theoretically recoverable energy in diffuse sunlight and blowing in the wind (i.e., relative to the Betz Limit and Shockley-Queisser limit, respectively).

There is a paradox about many energy transition technologies. Long-term battery storage and green hydrogen would depart quite markedly from the historical trend of ever-rising energy efficiency in power cycles. Likewise, there are energy penalties for CCS.

The data-file profiles the efficiency of power generation over time, noting 15 different technologies, their year of introduction, typical size (kW), mechanical efficiency (%), equivalent electrical efficiency (%) and useful notes about how they worked and why they matter.