Groq has developed LPUs for AI inference, which are up to 10x faster and 80-90% more energy efficient than today’s GPUs. This 8-page Groq technology review assesses its patent moat, LPU costs, implications for our AI energy models, and whether Groq could ever dethrone NVIDIA’s GPUs?

Groq is a private company, founded in 2018, with 250 employees, based in Mountain View, California, founded by ex-Google engineers. The company raised a $200M Series C in 2021 and a $640M Series D in August-2024, which valued it at $2.8bn.

The Groq LPU is already in use, by “leading chat agents, robotics, FinTech, and national labs for research and enterprise applications”. You can try out Meta’s Llama3-8b running on Groq LPUs here.

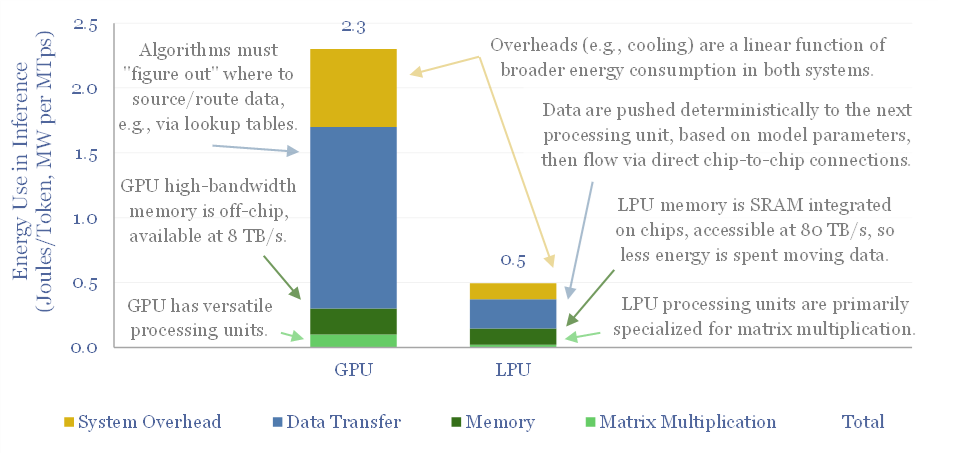

Groq is developing AI inference engines, called Language Processing Units (LPUs), which are importantly different from the GPUs. The key differences are outlined in this report, on pages 2-3.

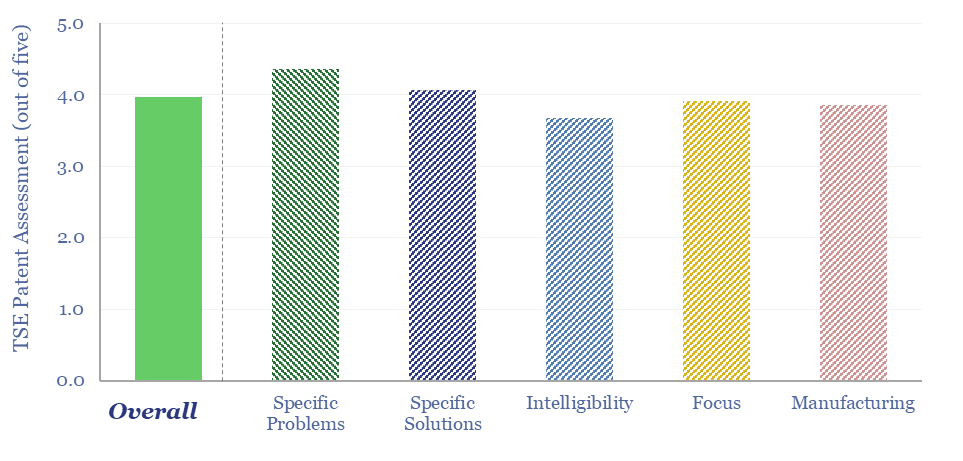

Across our research, we have generally used a five-point framework, in order to determine which technologies we can start de-risking in our energy transition models. For Groq, we found 46 patent families, and reviewed ten (chart below). Our findings are on pages 4-5.

Our latest published models for the energy consumption of AI assumed an additional 1,000 TWH of electricity use by 2030, within a possible range of 300 – 3,000 TWH based on taking the energy consumption of computing back to first principles. Groq’s impact on these numbers is discussed on pages 6-7.

NVIDIA is currently the world leader in GPUs underlying the AI revolution, which in turn underpins its enormous $3.6 trn of market cap at the time of writing. Hence could Groq displace or even dethrone NVIDIA, by analogy to other technologies we have seen (e.g., the shift from NMC to LFP in batteries). Our observations are on page 8.

For our outlook on AI in the energy transition, please see the video below, which summarizes some of the findings across our research in 2024.