-

Energy transition: classic blunders?!

Classic blunders famously include “never start a land war in Asia” and “never go up against a Sicilian when death is on the line”. But this video sets out what we believe are the three classic blunders that should be avoided by energy analysts, and in the energy transition, based on our own experiences over the…

-

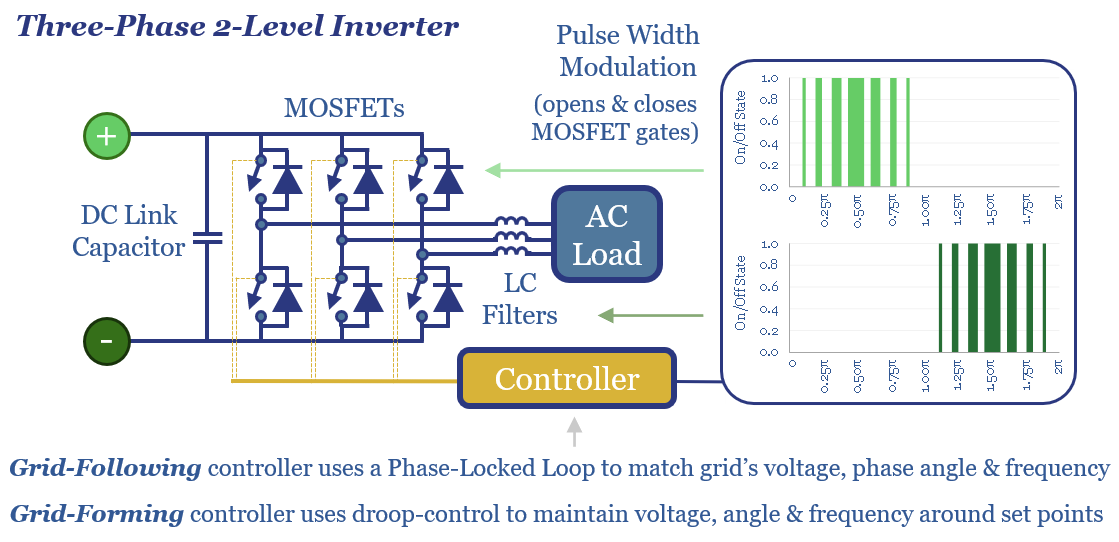

Grid-forming inverters: islands in the sun?

The grid-forming inverter market may soon inflect from $1bn to $15-20bn pa, to underpin most grid-scale batteries, and 20-40% of incremental solar and wind. This 11-page report finds that grid-forming inverters cost c$100/kW more than grid-following inverters, which is inflationary, but integrate more renewables, raise resiliency and efficiency?

-

Commodity intensity of global GDP in 30 key charts?

The commodity intensity of global GDP has fallen at -1.2% over the past half-century, as incremental GDP is more services-oriented. So is this effect adequately reflected in our commodity outlooks? This 4-page report plots past, present and forecasted GDP intensity factors, for 30 commodities, from 1973->2050. Oil is anomalous. And several commodities show rising GDP…

-

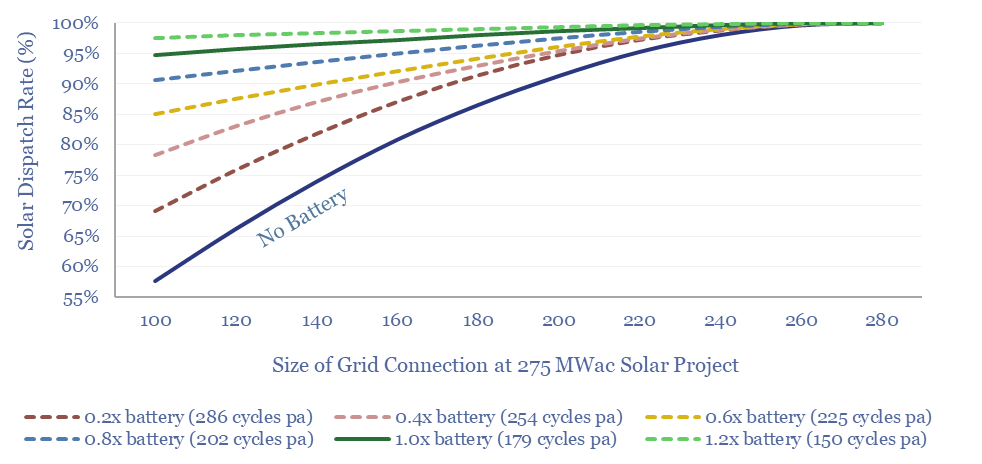

Solar plus batteries: the case for co-deployment?

This 9-page study finds unexpectedly strong support for co-deploying grid-scale batteries together with solar. The resultant output is stable, has synthetic inertia, is easier to interconnect in bottlenecked grids, and can be economically justified. What upside for grid-scale batteries?

-

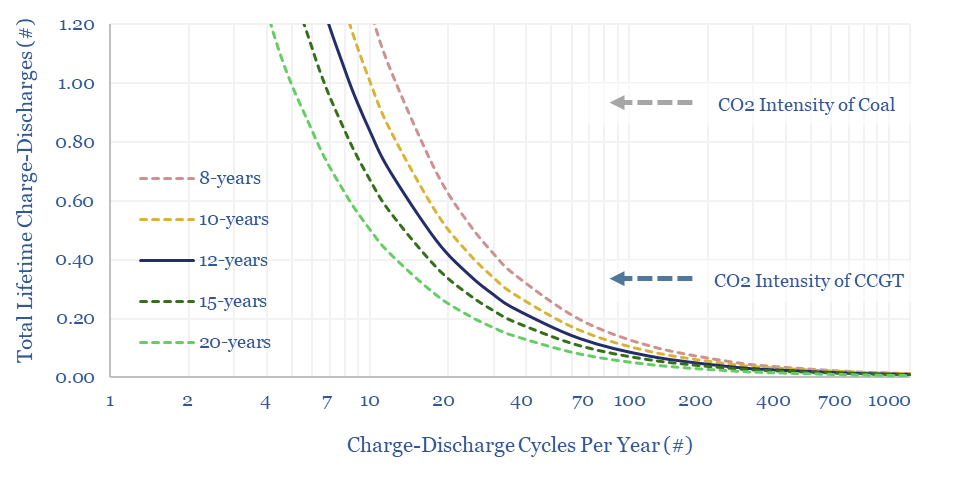

Long-duration storage: dirtier than gas peakers?

The CO2 credentials of long-duration batteries may be as bad as 0.35-2.0 kg/kWh, which is worse than gas peakers, or even than coal power. Grid-scale batteries are best deployed in high-frequency applications, to maximize power quality, downstream of renewables. But we were surprised to find that there is almost no net climate benefit from turning…

-

Energy research in the age of AI?

How will AI change the research and investment worlds? Our view is that large language models (LLMs) will soon surpass human analysts in assimilating and summarizing information. Hence this video explores three areas where human analysts can continue to earn their keep, and possibly even help decision-makers beat the ‘consensus engines’.

-

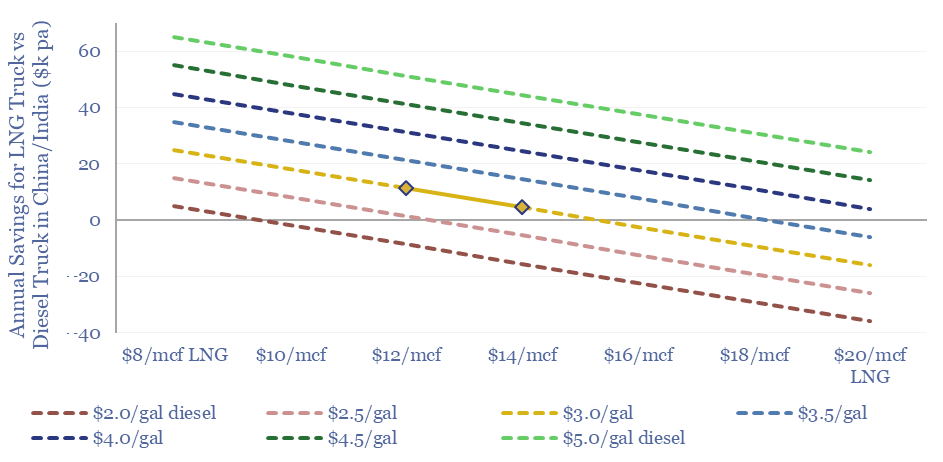

LNG trucks: Asian equation?

LNG trucking is more expensive than diesel trucking in the developed world. But Asian trucking markets are different, especially China, where exponentially accelerating LNG trucks will displace 150kbpd of oil demand in 2024. This 8-page note explores the costs of LNG trucking and sees 45MTpa of LNG displacing 1Mbpd of diesel?

-

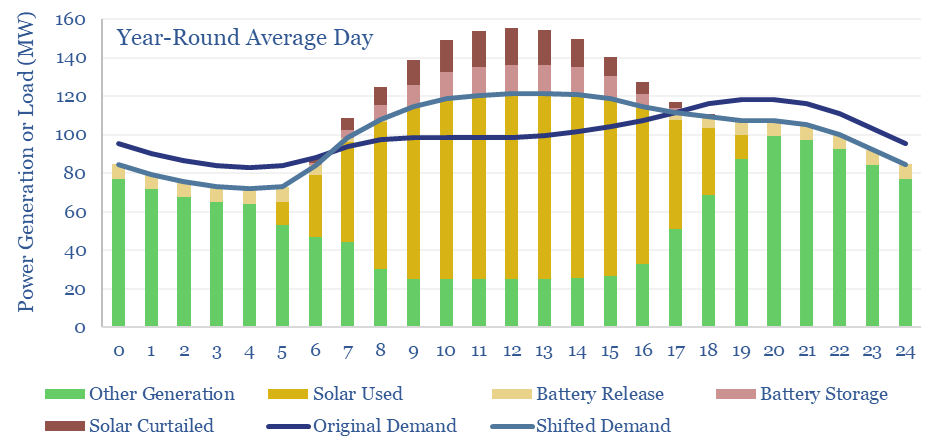

Can solar reach 45% of a power grid?

Can solar reach 45% of a power grid? This has been one of the biggest pushbacks we received on a recent research note, scoring solar potential by country, where we argued that the best regions – California, Australia – would reach 45% solar by 2050. Hence today’s short article explores what a 45% solar grid…

-

AI and power grid bottlenecks?

The number one topic in energy this year has been the rise of AI. Which might not seem like an energy topic. Yet it is inextricably linked with power grid bottlenecks, the single biggest issue for energy markets in the mid-late 2020s. The goal of today’s video is to recap our key conclusions. There is…

-

Methane leaks: by gas source and use

Methane leakage rates in the gas industry vary by source and use. Across our build-ups, the best-placed value chains are using Marcellus gas in CCGTs (0.2% methane leakage, equivalent to 6kg/boe, 1kg/mcfe, or +2% on Scope 3 emissions) and/or Permian gas in LNG or blue hydrogen value chains (0.3%). Residential gas use is likely closer…

Content by Category

- Batteries (87)

- Biofuels (44)

- Carbon Intensity (49)

- CCS (63)

- CO2 Removals (9)

- Coal (38)

- Company Diligence (92)

- Data Models (827)

- Decarbonization (159)

- Demand (110)

- Digital (58)

- Downstream (44)

- Economic Model (202)

- Energy Efficiency (75)

- Hydrogen (63)

- Industry Data (277)

- LNG (48)

- Materials (81)

- Metals (76)

- Midstream (43)

- Natural Gas (146)

- Nature (76)

- Nuclear (23)

- Oil (164)

- Patents (38)

- Plastics (44)

- Power Grids (125)

- Renewables (149)

- Screen (113)

- Semiconductors (30)

- Shale (51)

- Solar (67)

- Supply-Demand (45)

- Vehicles (90)

- Wind (43)

- Written Research (349)