The capex costs of data-centers are typically $10M/MW, with opex costs dominated by maintenance (c40%), electricity (c15-25%), labor, water, G&A and other. A 30MW data-center must generate $100M of revenues for a 10% IRR, while an AI data-center in 2024 may need to charge $5/EFLOP of compute.

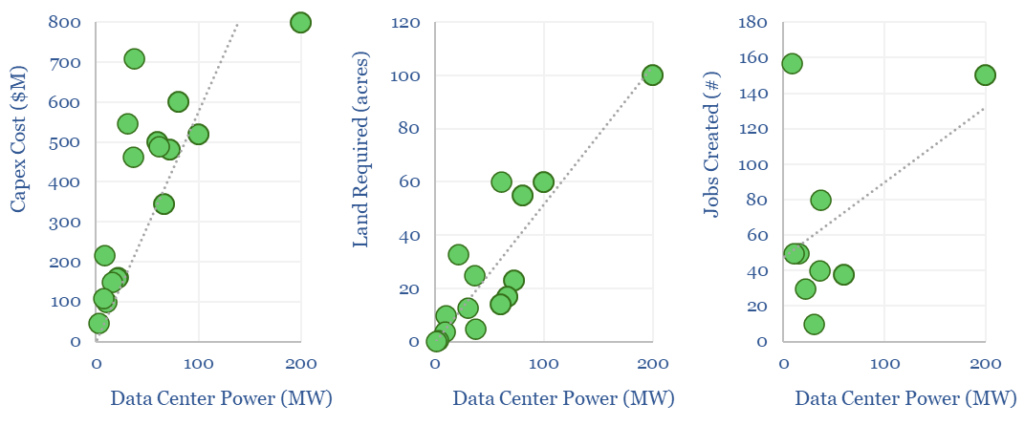

Data-centers underpin the rise of the internet and the rise of AI, hence this model captures the costs of data-centers, from first principles, across capex, opex, land use and other input variables (see below).

In 2023, the global data-center industry is $250bn, across 500 large facilities, 20,000 total facilities, and around 40 GW of capacity, which likely rises by 2-5x by 2030.

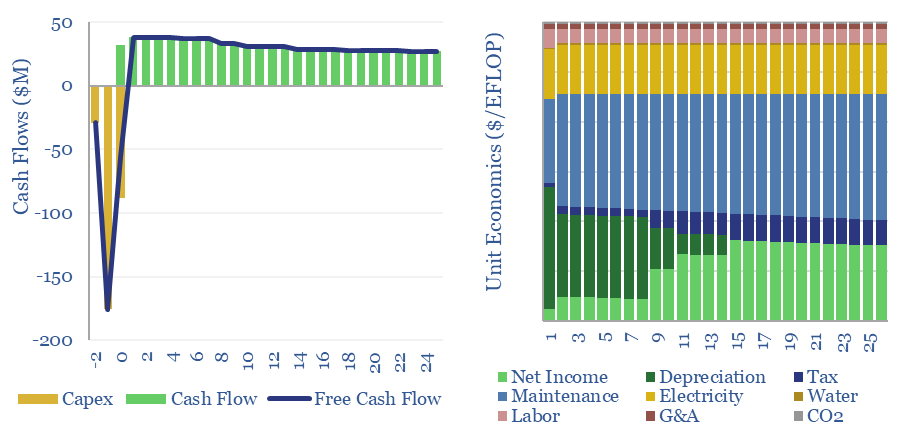

A 30MW mid-scale data-center, costing $10M/MW of capex, must generate $100M pa of revenues, in order to earn a 10% IRR, after deducting electricity costs and maintenance.

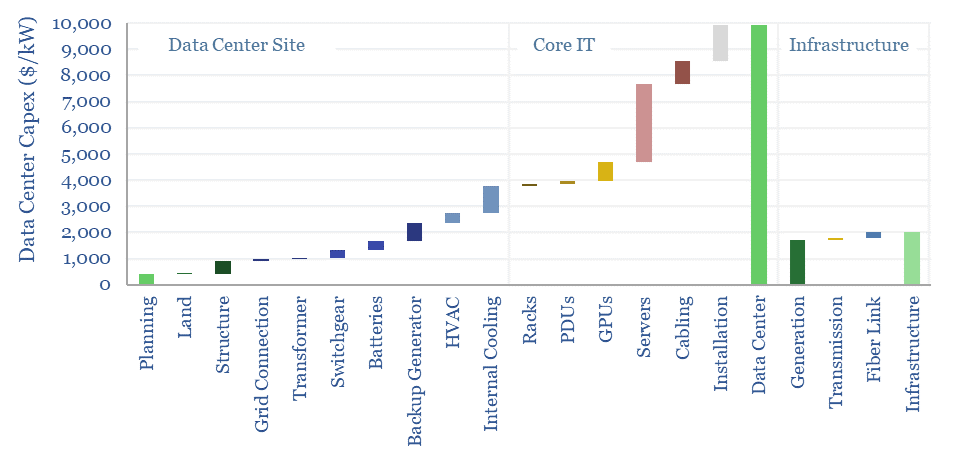

The capex breakdown for a typical non-AI data center is built up in the data-file, drawing on cost data for various IT components, cooling, chillers, transformers, switchgear, battery UPS, backup generators, plus broader infrastructure such as generation, transmission and fiber links.

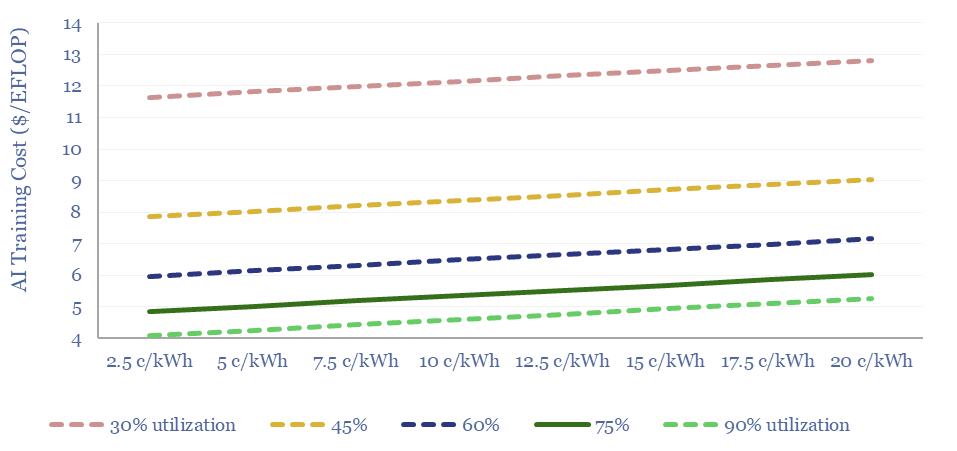

If the data-center is computation heavy, e.g., for AI applications, this might equate to a cost of around $5/EFLOP of compute in 2023. This fits with disclosures from OpenAI, stating that training GPT 4 had a total compute of 60M EFLOPs and a training cost of around $160M.

However, new generations of chips from NVIDIA will increase the proportionate hardware costs and may lower the proportionate energy costs (see ComputePerformance tab). An AI-enabled data-center can easily cost 2x more than a conventional one, surpassing $20M/MW.

Reliability is also crucial to the economics of data-centers: uptime and utilization have a 5x higher impact on overall economics than electricity prices. This makes it less likely that AI data-centers will be demand-flexed to power them using the raw output from renewable electricity sources, such as wind and solar?

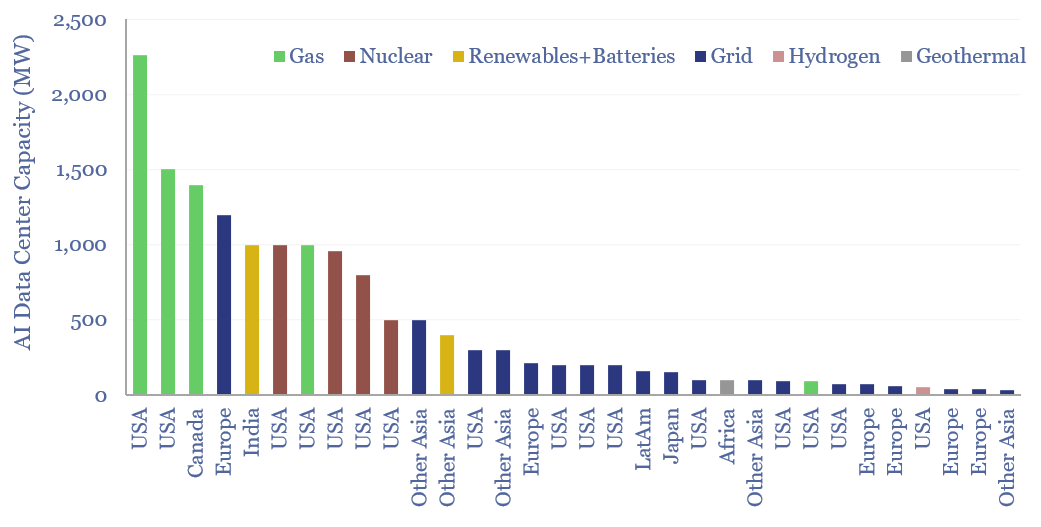

Economic considerations may tip the market towards sourcing the most reliable power possible, especially amidst grid bottlenecks, and it also explains the routine use of backup power generation. In early-2025, we have added to the data-file, by showing how 30 of the AI data centers announced in 2024 intend to procure their power (chart below).

Another major theme is the growing power density per rack, rising from 4-10kW to >100kW, and requiring closed-loop liquid cooling. Although there are also interesting concepts to combine the cooling with fuel cells and absorption chillers.

Please download the data-file to stress-test the costs of a data-center, performance of an AI data-center, and we will also continue adding to this model over time. Notes from recent technical papers are in the final tab.