The second world war was decided by oil. Each country’s war-time strategy was dictated by its availability, its quality and attempts to secure more of it; including by rationing non-critical uses of it. Ultimately, limiting the oil meant limiting the war. This would all re-shape the future of the oil, gas and midstream industries, and also the whole world. Today’s short essay about oil and war outlines out top ten conclusions from reviewing the history.

(1) War machines run on oil products

Fighter planes, bombers, tanks, battleships, submarines and supply trucks are all highly energy intensive. For example, a tank achieves a fuel economy of around 0.5 miles per gallon. Thus, Erwin Rommel wrote that “neither guns nor ammunition are of much use in modern warfare unless there is sufficient petrol to haul them around… a shortage of petrol is enough to make one weep”.

If the First World War was a war of stagnation, then the Second World War was one of motion. Overall, America’s forces in Europe would use 100x more gasoline in World War II than in World War I. Thus in 1944, General Patton berated Eisenhower that “my men can eat their belts, but my tanks have gotta have gas”.

The fuel for Germany’s war machine was imported from Romania’s Ploiesti fields (c30-40% of total use) and earlier in the War, from the Soviet Union (10-20%). Another achievement of ‘blitzkrieg’ warfare was that the German army initially captured more fuel than it used. Its remaining oil was produced in Germany, as synfuel (c50-60% of total).

Synfuel. Germany had always been an oil-poor, coal-rich nation, relying on the latter for 90% of its energy in the 1930s. But it could manufacture synthetic gasoline by hydrogenating the coal at high temperatures and pressures. The industrial methods were developed by IG Farben, with massive state subsidies (Hitler stated “the production cost [is] of no importance”). In 1936, Hitler re-doubled the subsidies, expecting to be at war by 1940, by which time, 14 hydrogenation plants were producing 72kbpd. By 1943, this was increased to 124kbpd. It was over half of Germany’s total war-time oil use and 90% of the aviation gasoline for the Luftwaffe.

On the other side, America provided 85% of the allies’ total oil. US output rose from 3.7Mbpd to 4.7Mbpd. 7bn bbls were consumed by the US and its allies from 1941-45, of which 6bn bbls was produced in the US.

(2) Securing oil dictated each country’s war strategy.

In 1939, Hitler and Stalin had carved up Europe via the Molotov-Ribbentrop pact, declaring mutual non-aggression against one another. But oil was a key reason that Hitler reneged, and went to war with the Soviet Union, in Operation Barbarossa, in June 1941. Stalin had already occupied Northern Rumania, which was too close for comfort to Ploiesti. Hitler would tell Mussolini that “the Life of the Axis depends on those oilfields”.

Moreover, Hitler wanted the oilfields of the Caucasus, at Maikop, Grozny and Baku. They were crucial. At the end of 1942, Hitler wrote “unless we get the Baku oil, the war is lost”. Even Rommel’s campaign in North Africa was the other arm of a large pincer movement, designed to converge on Baku.

Similarly for Japan, the entire Pacific War (and necessarily antecedent attack on Pearl Harbor), was aimed at capturing crucial oil fields of the Dutch East Indies, to which Japan would then commit 4,000 oilfield workers.

For the Allies, one of the most pressing needs was to ensure clear passage of American Oil across the Atlantic, without being sunk by German U-boats. Hence the massive step-up of cryptography at Bletchley Park under Alan Turing. In March-1943, the Allies broke the U-boat codes, allowing a counter-offensive. In May-1943 alone, 30% of the U-boats in the Atlantic were sunk. Increased arrivals of American oil would be a turning point in the war.

(3) Limiting the oil meant limiting the war.

Germany’s initial blitzkrieg warfare was particularly effective, as the Germans captured more fuel than they used. But they had less luck on their Eastwards offensives. Soviet tanks rank on diesel. Whereas the German Panzers ran on gasoline. And it became increasingly difficult to sustain long, Eastwards supply lines. Stalingrad became Germany’s first clear ‘defeat’ in Europe in 1942-43.

Fuel shortages were also illustrated in North Africa, where Rommel later said his tactics were “decided more by the petrol gauge than by tactical requirements”. He wrote home to his wife about recurring nightmares of running out of fuel. To make his tank numbers look more intimidating, he even had ‘dummy tanks’ built at workshops in Tripoli, which were then mounted on more fuel-efficient Volkswagens.

Similarly in Japan, oil shortages limited military possibilities. ‘Kamikaze’ tactics were named after the ‘divine wind’, a typhoon which disrupted Kublai Khan’s 13th century invasion fleet. But they were motivated by fuel shortages: no return journey was necessary. And you could sink an American warship with 1-3 kamikaze planes, versus 8-24 bombers and fighters. It made sense if you had an excess of personnel and planes, and a shortage of fuel.

Similarly, in 1944, in the Marianas campaign’s “great turkey shoot”, Japan lost 273 planes and the US lost 29, which has been attributed to a lack of fuel, forcing the Japanese planes to fly directly at the enemy, rather than more tactically or evasively.

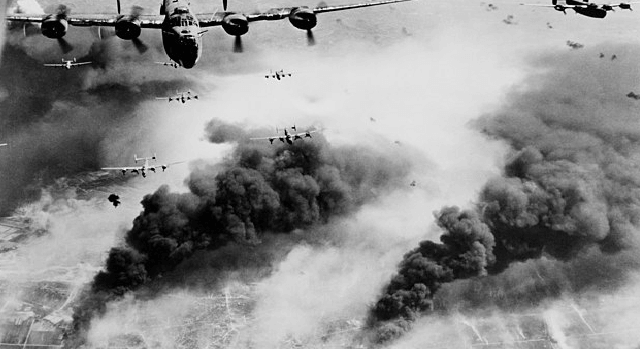

Remarkably, back in Europe, it took until May-1944, for Allied bombers to start knocking out Germany’s synthetic fuels industry, in specifically targeted bombing missions, including the largest such facility, run by IG Farben at Leuna. “It was on that day the technological war was decided”, according to Hitler’s Minister of War Production. In the same vein, this note’s title image above shows B-24s bombing the Ploiesti oilfields in May-1944.

By September-1944, Germany’s synthetic fuel output had fallen to 5kbpd. Air operations became impossible. In the final weeks of the War, there simply was no fuel. Hitler was still dictating war plans from his bunker, committing divisions long immobilized by their lack of fuel. In the final days of the War, German army trucks were seen being dragged by oxen.

Swiftly halting oil might even have prevented war. Japan had first attached Manchuria in 1931. As tensions escalated, in 1934, executives from Royal Dutch and Standard of New Jersey suggested that the mere hint of an oil embargo would moderate Japanese aggression, as Japan imported 93% of its oil needs, of which 80% was from the US. In 1937, an embargo was proposed again, when a Japanese air strike damaged four American ships in the Yangtze River. It was 1939 before the policy gained support, as US outrage grew over Japan’s civilian bombings in China. By then it was too late. In early 1941, Roosevelt admitted “If we stopped all oil [to Japan]… it would mean War in the Pacific”. On December 7th, 1941, a Japanese attack on Pearl Harbor forced the Americans’ hand.

(4) Fuel quality swayed the Battle of Britain?

The Messerschmitt 109s in the Luftwaffe were fueled by aviation gasoline derived from coal hydrogenation. This had an octane rating of 87. However, British Spitfires often had access to higher-grade fuel, 100-octane aviation gasoline, supplied by the United States. It was produced using catalytic cracking technology, pioneered in the 1930s, and deployed in vast, 15-story refinery units, at complex US refineries. The US ramped its production of 100-octane gasoline from 40kbpd in 1940 to 514kbpd in 1945. Some sources have suggested the 100-octane fuel enabled greater bursts of speed and greater maneuverability, which may have swung the balance in the Battle of Britain.

(5) The modern midstream industry was born.

Moving oil by tankers turned out to be a terrible war-time strategy. In 1942, the US lost one-quarter of all its oil tanker tonnage, as German U-boats sunk 4x more oil tankers than were built. This was not just on trans-Atlantic shipments, but on domestic routes from the Gulf Coast, round Florida, and up the East Coast. Likewise, by 1944-45, Japan was fairly certain that any tanker from the East Indies would be sunk shortly after leaving port.

The first truly inter-continental pipelines were the result. In 1943, ‘Big Inch’ was brought into service, a 1,254-mile x 24” line carrying oil from East Texas, via Illinois, to New Jersey. In 1944, ‘Little Inch’ started up, carrying gasoline and oil products along the same route, but starting even further south, at the US Gulf Coast refining hub, between Texas and Louisiana. The share of East Coast oil arriving by pipeline increased from 4% in 1942 to 40% by the end of 1944.

The first subsea pipeline was also deployed in the second world war, known as PLUTO (the Pipeline Under the Ocean). It ran under the English channel and was intended to supply half of the fuel needs for the Allies to re-take Europe. One of the pumping stations, on the Isle of Wight, was disguised as an ice cream shop, to protect it from German bombers. However, PLUTO was beset by technical issues, and only flowed 150bpd in 1944, around 0.15% of the Allied Forces’ needs.

Other mid-downstream innovations were small portable pipeline systems, invented by Shell, to transport fuel to the front without using trucks; and the five gallon ‘jerry can’. The Allies initially used 10-gallon portable fuel cannisters, but they were too heavy for a single man to wield. The smaller German convention was adopted. And improved, with a spout that prevented dirt from being transferred into vehicle engines.

(6) The modern gas industry was also born.

As the US tried to free up oil supplies from its residential heating sector, Roosevelt wrote to Harold Ickes, his Secretary of the Interior, in 1942, “I wish you would get some of your people to look into the possibility of using natural gas… I am told there are a number of fields in the West and the Southwest where practically no oil has been discovered, but where an enormous amount of natural gas is lying idle in the ground because it is too far to pipe”.

(7) Rationing fuel became necessary everywhere.

In the UK, war-time rationing began almost immediately, with a ‘basic ration’ set at 1,800 miles per year. As supplies dwindled, so did the ration, eventually towards nil. The result was a frenzy of war-time bicycling.

In Japan, there was no domestic oil use at all. Even household supplies of spirits or vegetable oils were commandeered to turn into fuel. Bizarrely, millions were sent to dig up pine roots, deforesting entire hillsides, in the hope that they could be pyrolyzed into an fuel-substitute.

Curtailing US demand was slower. In 1941, Ickes did start implementing measures to lower demand. He recommended a return to the ‘Gasoline-less Sundays’ policy of WWI and ultimately pressed oil companies to cut service station deliveries by 10-15%. Homeowners who heated their houses with oil were politely asked to keep their temperatures below 65ºF in the day, 55ºF at night.

Outright US rationing occurred later, starting in early-1942. First gasoline use was banned for auto-racing. Then general rationing of gasoline started on the East Coast. Even later, nationwide rationing was brought in at 1.5-4 gallons per week, alongside a 35mph speed limit and an outright ban on “non-essential driving” in 1943.

General US oil rationing provoked outrage. Interestingly, it was motivated just as much by rubber shortages as oil shortages. Japan’s capture of the East Indies had cut off 90% of the US’s rubber imports, and what little rubber was available, was largely needed for military vehicles. Ultimately, the consumption of fuel per passenger vehicle was 30% less in 1943 than in 1941.

(8) War-time measures tested civilian resolve.

In WWII, ambivalence was most clearly seen in the US, where support for the War was initially marginal, and conflicted with domestic economic interests.

The State of New Jersey denounced fuel rationing, lest it hamper tourism at its summer resorts. Likewise, in Miami, the tourism industry rebuffed a campaign to turn off 6-miles of beach-front neon lights, which were literally lighting up the coastal waters, so German U-boats could easily pick off the oil tankers.

In direct opposition to war-time interests, some US gasoline stations openly declared they would make as much fuel available to motorists as required, advertising that motorists should come “fill it up”. There will always be a few idiots who go joy-riding during a crisis.

(9) The map of the modern World

The entire future of the 20th century would also be partly decided by ‘who got there first’ in the liberation of Nazi Europe. Thus, Russia’s sphere of influence, was decided in particular by oil supplies in the final months of the War.

The Allies’ path to Berlin in 1944-45 was 8-months slower than it should have been, hampered by logistical challenges of fueling three separate forces, on their path to the heart of Europe. General Patton wrote home in 1944 that “my chief difficulty is not the Germans, but gasoline”.

The lost time was important. It is what allowed the Soviet Union to capture as much ground as it did, including reaching Berlin before the Western Allies. This would help decide the fate of Republics such as East Germany, Poland, Czechoslovakia, Hungary and Yugoslavia. All ended up being ‘liberated’ by the Soviets. This sealed their fate, ending up as part of the greater Soviet Empire.

Further East, oil-short Japan also approached the Soviet Union as a potential seller of crude. However, Churchill and Roosevelt made Stalin a better offer. The return of territories that Czarist Russia had lost to Japan in the humiliating War of 1905, such as Northern Manchuria and the Sakhalin Islands. The latter, ironically, now produces 300kbpd of oil and 12MTpa of LNG.

(10) Scorched Earth after capture (but NOT BEFORE)

Scorched Earth is a phrase that now conjures images of giant plumes of smoke, rising into the air from 600 large Kuwaiti oil wells, as Iraqi forces retreated during the 1990-91 Gulf War.

However, scorched earth policies were implemented everywhere in the Second World War. The Soviets absolutely destroyed Maikop before it was captured, so the Germans could only produce 70bpd there by the following year.

In 1940-42, in the Dutch East Indies, a Shell team was drafted in to obliterate the oil fields and refinery complex at Balikpapan before it could fall into Japanese hands, with fifteen sticks of TNT affixed to each tank in the tank farm. It burned for days.

Back at Shell-Mex House, the British also drew up plans to destroy their fuel stocks if invaded. Most incredibly, at the Start of World War II, France even offered Rumania $60M to destroy its oilfields and deprive their Prize to the Germans.

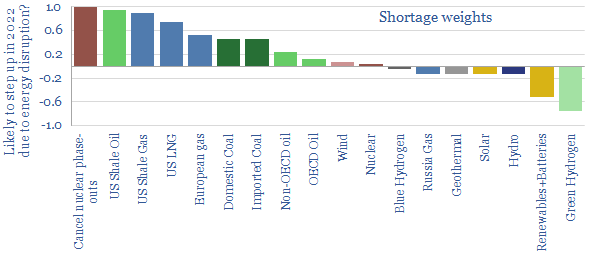

Strangely, some policymakers and investors appear to have had something of a ‘scorched earth’ policy towards the West’s oil and gas industry in recent years. As war re-erupts in the Western world, the history may be a reminder of the strategic need for a well-functioning energy industry. Energy availability has a historical habit of determining the course of wars.

End note. The world’s best history book has provided the majority of anecdotes and data-points for this article. Source: Yergin, D.(1990). The Prize: The Epic Quest for Oil, Money & Power. Simon & Schuster. London. I cannot recommend this book highly enough. The cover image is from Wikimedia Commons.