-

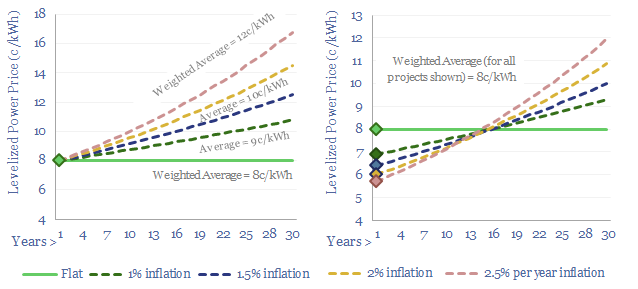

Levelized costs: real issues?

Real levelized costs can be a misleading metric. The purpose of today’s short note is simply to inform decision-makers who care about levelized costs. Our own modelling preference is to compare costs, on a flat pricing basis, using apples-to-apples assumptions across our economic models.

-

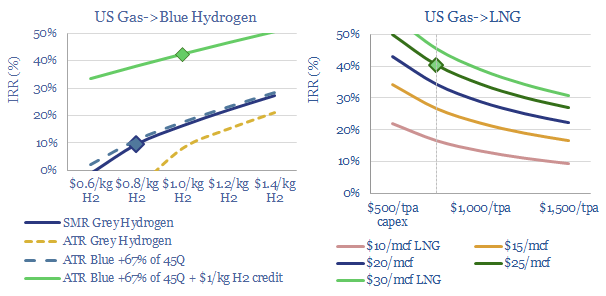

US natural gas: blue hydrogen tightens global LNG markets?

Blue hydrogen value chains are starting to boom in the US, as they are technically ready, low cost, and are now receiving enormous economic support from the Inflation Reduction Act. But will this divert gas away from expanding US LNG, raise global LNG prices above $20/mcf and impact global energy markets more than expected?

-

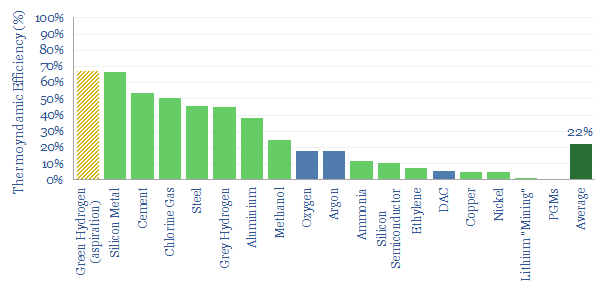

Industrial materials: thermodynamic efficiency?

The thermodynamic efficiency of materials production averages 20%, within an interquartile range of 5% to 50%. There is most room for improvement in complex value chains. And very different energy costs for blue vs green H2.

-

Energy economics: engineering wonders?

Many questions that matter in the energy transition are engineering questions, which flow through to energy economics: which technologies work, what do they cost, what energy penalties they have, and which materials do they use? We see an intersection for economics and engineering in our energy transition research.

-

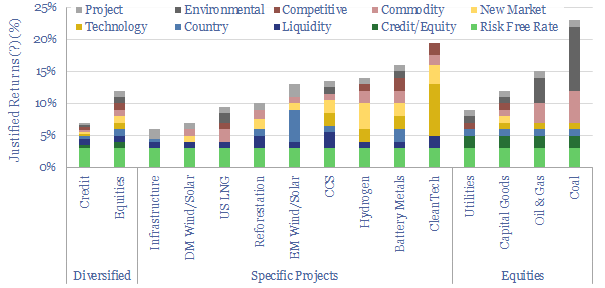

Energy transition: investment strategies?

Investing involves being paid to take risk. And we think energy transition investing involves being paid to take ten distinct risks, which determine justified returns. This note argues that investors should consider these risk premia, which ones they will seek out, and which ones they will avoid.

-

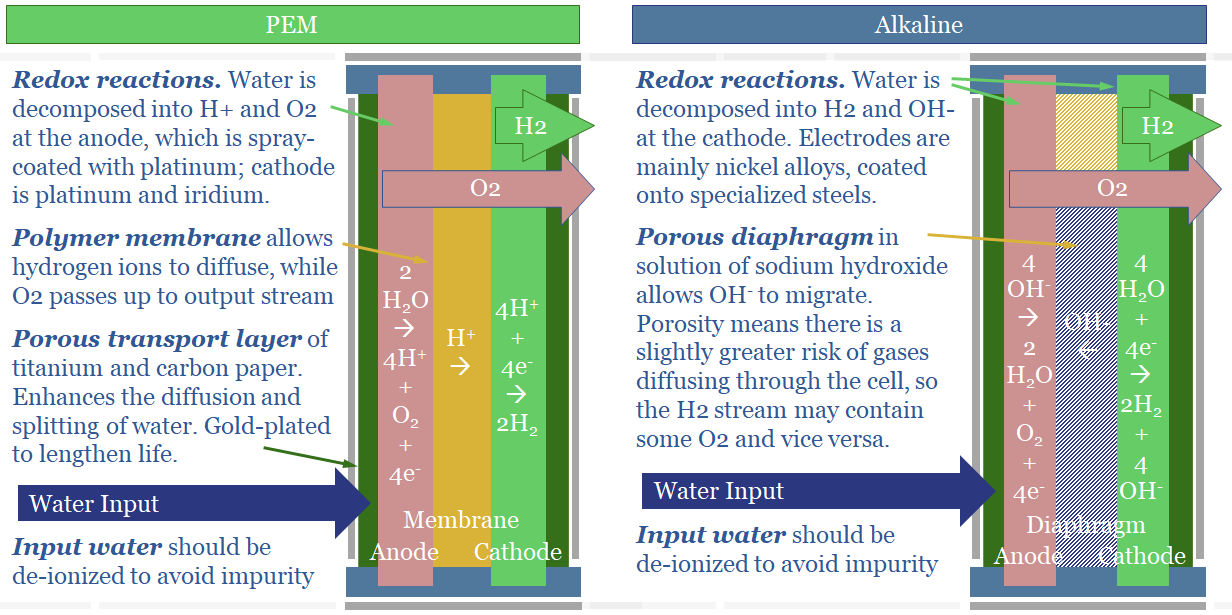

Green hydrogen: alkaline versus PEM electrolysers?

This note spells out the top ten differences between alkaline and PEM electrolysers. The lowest cost green hydrogen will likely come from alkaline electrolysers in nuclear/hydro-heavy grids. If hydrogen is to back up wind/solar, it would likely require PEMs.

-

How does methane increase global temperature?

How does methane increase global temperature? This article outlines the theory. The formulae suggest 0.7 W/m2 of radiative forcing and 0.35ºC of warming has occurred due to methane leaks, which is 20-30% of the total. There are controversies and uncertainties. But ramping gas is still heavily justified in a practical roadmap to net zero.

-

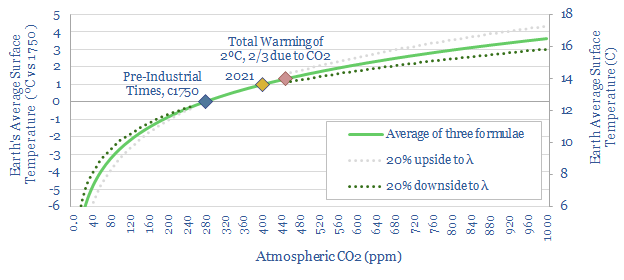

How does CO2 increase global temperature?

The purpose of this short article is to explain mathematical formulas linking global temperature to the concentration of CO2 in the atmosphere. In other words, our goal is to settle upon a simple equation, explaining how CO2 causes global warming. In turn, this is why our roadmap to net zero aims to reach ‘net zero’…

-

Energy transition: a six page summary from summer-2022?

“It provokes the desire, but it takes away the performance.” That is the porter’s view of alcohol in Act II Scene III of Macbeth. It is also our view of 2022’s impact on the energy transition. Our resultant outlook is captured in six concise pages, published in the Walter Scott Journal in Summer-2022.

-

Energy transition: five reflections after 3.5 years?

This video covers our top five reflections after 3.5 years, running a research firm focused on energy transition. The greatest value is found in low-cost decarbonization technologies, resource bottlenecks and hidden nuances and bottom-up opportunities.

Content by Category

- Batteries (87)

- Biofuels (44)

- Carbon Intensity (49)

- CCS (63)

- CO2 Removals (9)

- Coal (38)

- Company Diligence (92)

- Data Models (828)

- Decarbonization (159)

- Demand (110)

- Digital (58)

- Downstream (44)

- Economic Model (202)

- Energy Efficiency (75)

- Hydrogen (63)

- Industry Data (277)

- LNG (48)

- Materials (81)

- Metals (77)

- Midstream (43)

- Natural Gas (146)

- Nature (76)

- Nuclear (23)

- Oil (164)

- Patents (38)

- Plastics (44)

- Power Grids (126)

- Renewables (149)

- Screen (114)

- Semiconductors (30)

- Shale (51)

- Solar (67)

- Supply-Demand (45)

- Vehicles (90)

- Wind (43)

- Written Research (350)