-

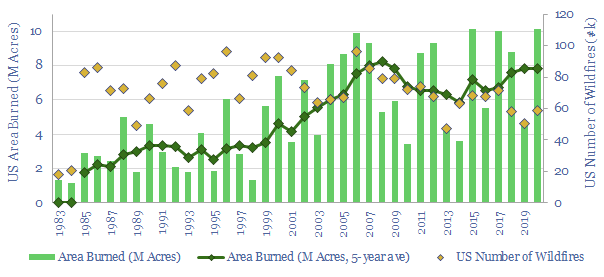

Forest fires: what climate conclusions?

Global CO2 from wildfires could be c25% as large as anthropogenic CO2, while US wildfires reached an all-time record in 2020. Hence this note reconsiders nature-based solutions. Hands-off conservation may do more harm than good. Sustainable forestry, carbon-negative materials, biochar and biomass energy also look more favorable.

-

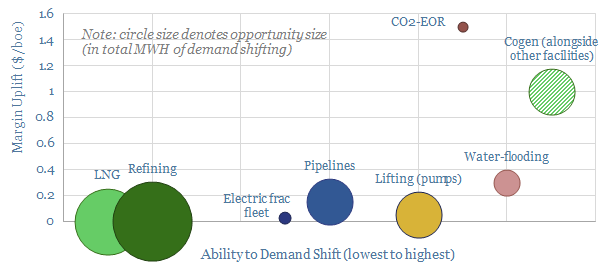

Renewables: can oil and gas assets “demand shift”?

Industrial facilities that can shift electricity demand to coincide with cheap renewables can effectively start printing money as renewables get over-built. Oil and gas assets are generally less able to demand-shift than other industries. But this note outlines the best opportunities, capable of uplifting cash margins by 3-10%.

-

Carbon accounting: philosophical investigations?

Current carbon accounting frameworks are not entirely helpful: simplistic rules can lack comparability, completeness, generality, and can be gamed. We prefer granular carbon accounting at the process- or asset-level, which can be compared to competitors and counterfactuals.

-

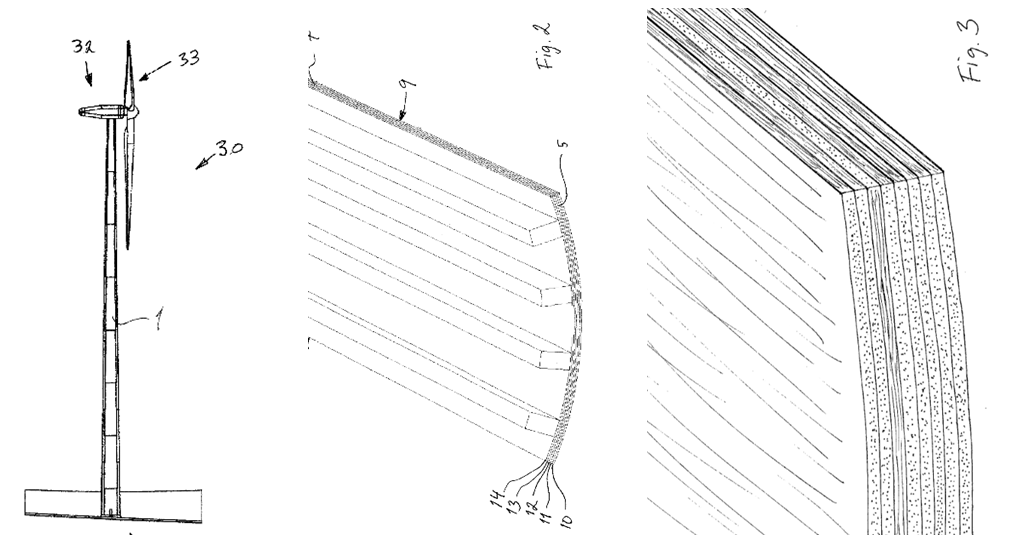

Wooden wind turbines?

Carbon-negative construction materials derived from wood could be used to deflate the levelized costs of a wind turbine by 2.5 – 10%, while sequestering around 175 tons of CO2-equivalents per turbine. The opportunity is being progressed by Modvion and Vestas, as discussed in this short note below.

-

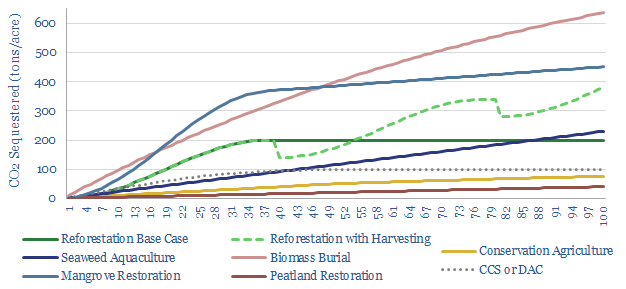

One hundred years of carbon offsetting?

An acre of land can absorb 40-800 tons of CO2 over the course of a century. Today’s note ranks different options for CO2 sequestration over 100-year timeframes. Active management techniques and blue carbon eco-systems are most effective.

-

Renewable Energy Certificates?

Renewable Energy Certificates are legal contracts where all parties agree to pretend that gas and coal electrons are wind and solar electrons and vice versa. This note discussed the merits, drawbacks, and some strange implications for energy analysts. Our view is that nature-based carbon credits may come to be seen as ‘more valid’.

-

The laws of thermodynamics: what role in the energy transition?

The laws of thermodynamics are often framed in such arcane terms that they are overlooked. This note outlines these three fundamental laws of physics and why they matter for energy transition. Renewables and efficiency gains are prioritized, while hydrogen scores poorly.

-

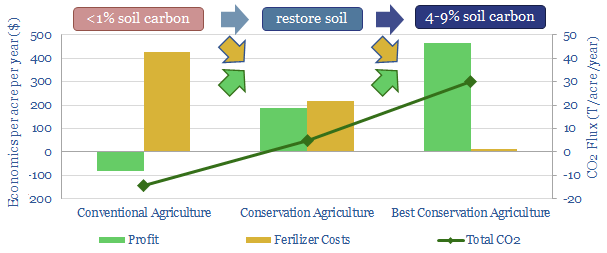

Farming carbon into soils: a case study?

“As a no till farmer, I’m doing my part [on climate change… if the carbon market grows] farmers will change their practices”. These were the comments of an Iowa farmer that has now commercialized $330,000 of carbon credits from conservation agriculture. The case study shows how the carbon market is causing CO2-farming to expand and…

-

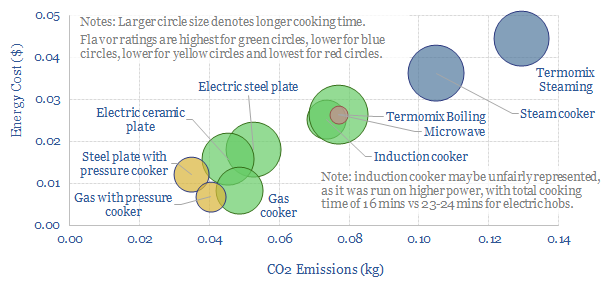

Illustrating industrial energy efficiency in the context of cooking?

Industrial energy efficiency is an overwhelmingly complex concept. Hence economy-wide CO2 prices may be the most effective way to maximize efficiency gains. This note illustrates the argument, in the context of ‘home cooking’ a process technology with which most readers are likely familiar.

-

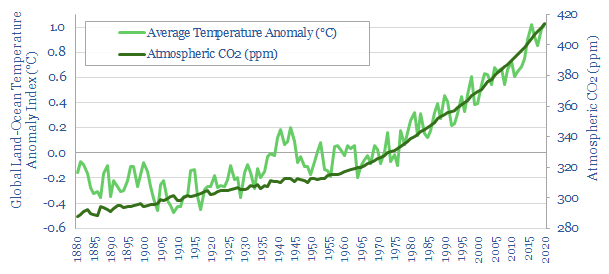

Global average temperature data?

Global average surface temperatures have risen by 1.2-1.3C since pre-industrial times and continue rising at 0.02-0.03C per year, according to most data-sets . This note assesses their methodologies and controversies. Uncertainty in the data is likely higher than admitted. But the strong upward warming trend is robust.

Content by Category

- Batteries (89)

- Biofuels (44)

- Carbon Intensity (49)

- CCS (63)

- CO2 Removals (9)

- Coal (38)

- Company Diligence (95)

- Data Models (840)

- Decarbonization (160)

- Demand (110)

- Digital (60)

- Downstream (44)

- Economic Model (205)

- Energy Efficiency (75)

- Hydrogen (63)

- Industry Data (279)

- LNG (48)

- Materials (82)

- Metals (80)

- Midstream (43)

- Natural Gas (149)

- Nature (76)

- Nuclear (23)

- Oil (164)

- Patents (38)

- Plastics (44)

- Power Grids (130)

- Renewables (149)

- Screen (117)

- Semiconductors (32)

- Shale (51)

- Solar (68)

- Supply-Demand (45)

- Vehicles (90)

- Wind (44)

- Written Research (355)