The costs to power a real-world load – e.g., a data center – with solar+gas will often be higher than via a standalone gas CCGT in the US today. But not internationally? Or in the future? This 9-page note shows how solar deflation and load shifting can boost solar to >40% of future grids.

Solar deflation has been amazing. The costs of utility-scale projects have fallen by 75% in the past decade, via rising module efficiency and manufacturing scale, and we see costs falling to $400/kW and 1-3c/kWh in the future (per pages 2-3).

However the LCOEs quoted above are only on a partial electricity basis, assuming an idealized offtaker that happens to consume power, always and only when a utility-scale solar project happens to generate, which in turn features hour-by-hour volatility, day-by-day volatility, season-by-season volatility and even year by year volatility.

System costs depend on the system, which can have different load requirements and ability to load shift, ranging from from loads at, say, data centers to e-LNG plants.

The LCOEs for energizing a 100MW round-the-clock load, with 70% gas and 30% solar (i.e., solar+gas) are compared and contrasted with a standalone CCGT, in the case study on pages 4-5.

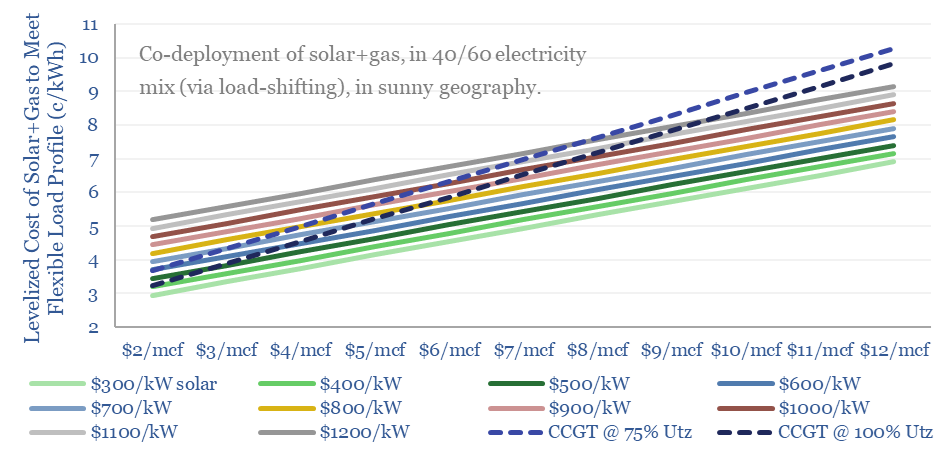

The LCOEs for energizing a more flexible load, with 60% gas and 40% solar (solar+gas) are compared and contrasted with a standalone CCGT, in the case study on pages 6-7.

The numbers depend on gas prices and solar capex, and vary interestingly in different geographies and contexts.

The “optimal” share of solar to meet real-world loads are discussed on page 8. And maybe in the future, there will be demand sources that scale up in the energy system that really are designed to absorb all and only solar output profiles, per our sci-fi fantasies here.

Our long-term forecasts for global electricity and global useful energy see both solar and gas gaining share, due to the economics above.