-

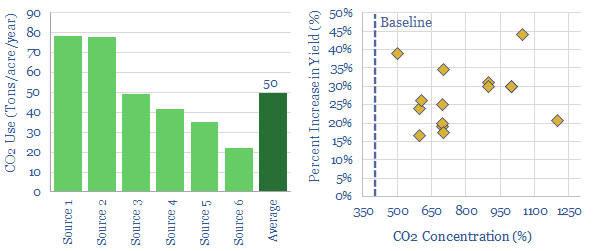

Greenhouse gas: use CO2 in agriculture?

Enhancing CO2 in greenhouses can improve yields by c30%. It costs $4-60/ton to supply this CO2, while $100-500/ton of value is unlocked. The challenge is scale, limited to 50MTpa globally. Around 50Tpa of CO2 is supplied to each acre of greenhouses. But only c10% is sequestered.

-

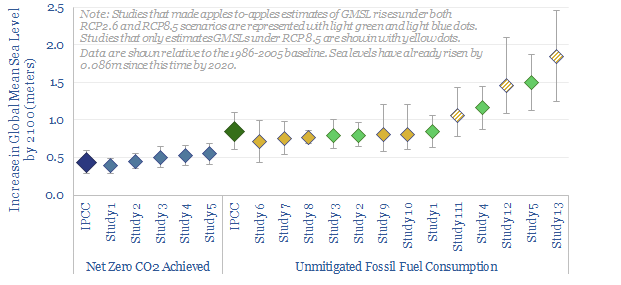

Sea levels: what implications amidst climate change?

Global mean sea levels will rise materially by 2100, irrespective of future emissions pathways. This note contains our top ten facts for decision makers, covering the numbers, the negative consequences and the potential mitigation opportunities.

-

US shale: our outlook in the energy transition?

This presentation covers our outlook for the US shale industry in the 2020s, and was presented at a recent investor conference. It covers the importance of shale oil supplies in balancing future oil markets, our outlook for 5% annual productivity growth, and the opportunity for carbon-neutrality to attract capital.

-

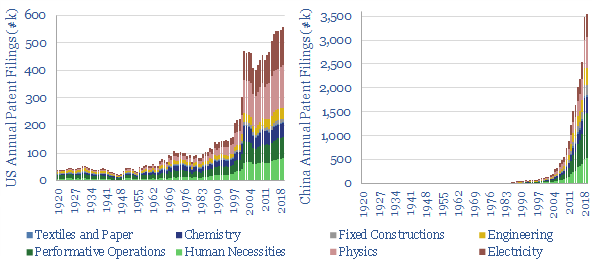

Rise of China: the battle is trade, the war is technology?

China’s pace of technology development is now 6x faster than the US, as measured across 40M patent filings, contrasted back to 1920 in this short, 7-page note. The implications are frightening. Questions are raised over the Western world’s long-term competitiveness, especially in manufacturing; and the consequences of decarbonization policies that hurt competitiveness.

-

The green hydrogen economy: a summary?

We have looked extensively for economic hydrogen opportunities to advance the energy transition. Pessimistically stated, we fear that the ‘green hydrogen economy’ may fail to be green, fail to deliver hydrogen, and fail to be economical. This short note summarizes our work.

-

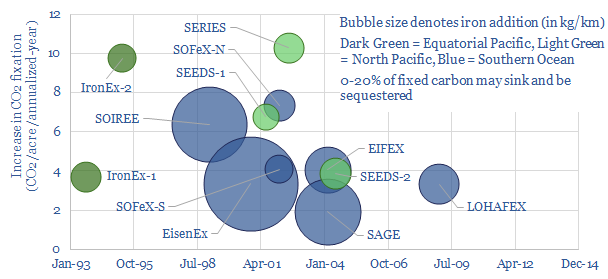

Carbon offsets: ocean iron fertilization?

Nature based solutions to climate change could extend beyond the world’s land (37bn acres) and into the world’s oceans (85 bn acres). This short article explores one option, ocean iron fertilization, based on technical papers. While the best studies indicate a vast opportunity, uncertainty remains high: on CO2 absorption, sequestration, scale, cost and side-effects. Unhelpfully,…

-

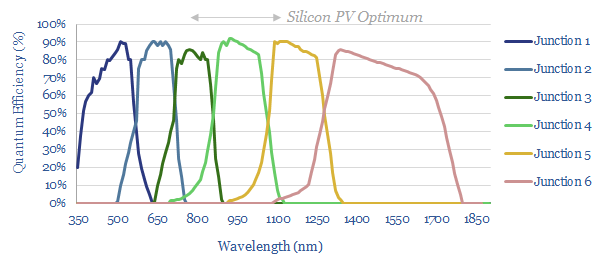

Solar energy: is 50% efficiency now attainable?

A new record has been published in 2020, achieving 47.1% conversion efficiency in a solar cell, using “a monolithic, series-connected, six-junction inverted metamorphic structure under 143 suns concentration”. Our goal in this note is to explain the achievement and its implications.

-

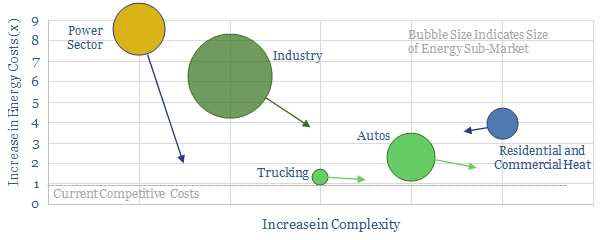

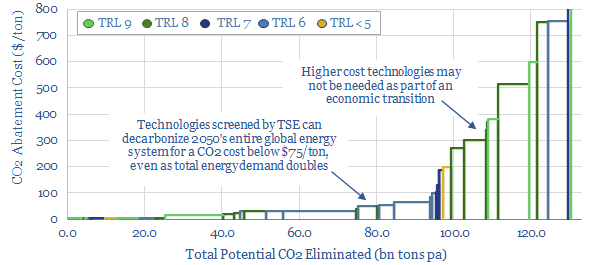

Energy Technologies and Energy Transition

Below is an overview of our written research into energy technologies and the energy transition, to help you navigate our work, by topic. The summary below captures 1,000 pages of output, across 50 research notes and 40 online articles since April-2019. Energy Transition Technologies The most economic route to ‘net zero’ is to ramp renewables…

-

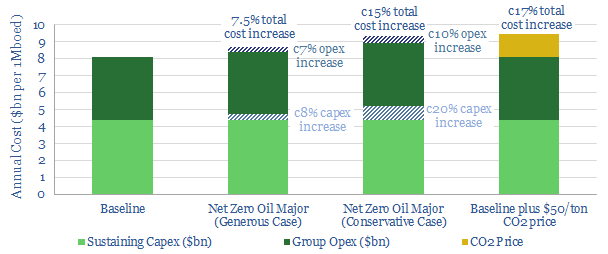

Net zero Oil Majors: worth the cost?

A typical Oil Major can uplift its valuation by 50% through targeting net zero. But how much will it cost? We find 7.5-15% higher total costs are incurred in the next decade. But this investment is justified: a $50/ton CO2 price would otherwise have inflated costs by 17%.

-

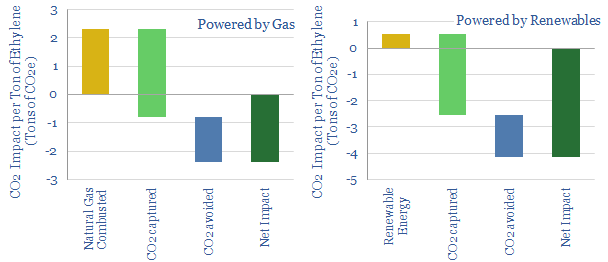

Carbon-negative plastics: a breakthrough?

This short note describes a potential, albeit early-stage, breakthrough converting waste CO2 into polyethylene, based on a recent TOTAL patent. We estimate the process could sequester 0.8T of net CO2 per ton of polyethylene. This matters as the world consumes c140MTpa of PE, 30% of the global plastics market, whose cracking and polymerisation emits 1.6T…

Content by Category

- Batteries (88)

- Biofuels (44)

- Carbon Intensity (49)

- CCS (63)

- CO2 Removals (9)

- Coal (38)

- Company Diligence (92)

- Data Models (830)

- Decarbonization (159)

- Demand (110)

- Digital (58)

- Downstream (44)

- Economic Model (203)

- Energy Efficiency (75)

- Hydrogen (63)

- Industry Data (278)

- LNG (48)

- Materials (82)

- Metals (77)

- Midstream (43)

- Natural Gas (147)

- Nature (76)

- Nuclear (23)

- Oil (164)

- Patents (38)

- Plastics (44)

- Power Grids (127)

- Renewables (149)

- Screen (114)

- Semiconductors (30)

- Shale (51)

- Solar (67)

- Supply-Demand (45)

- Vehicles (90)

- Wind (43)

- Written Research (351)