-

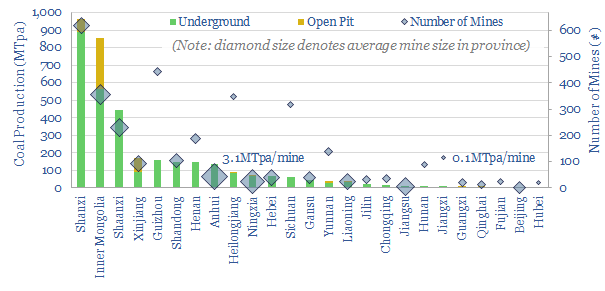

All the coal in China: our top ten charts?

Chinese coal provides 15% of the world’s energy, equivalent to 4 Saudi Arabia’s worth of oil. Global energy markets may become 10% under-supplied if this output plateaus per our ‘net zero’ scenario. Alternatively, might China ramp its coal, especially as Europe bids harder for renewables and LNG post-Russia? This note presents our ‘top ten’ charts.

-

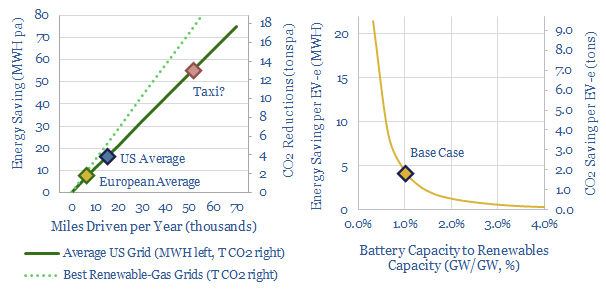

Battle of the batteries: EVs vs grid storage?

Who will ‘win’ the intensifying competition for finite lithium ion batteries, in a world that is hindered by shortages of lithium, graphite, nickel and cobalt in 2022-25? Today’s note argues EVs should outcompete grid-scale storage by a factor of 2-4x.

-

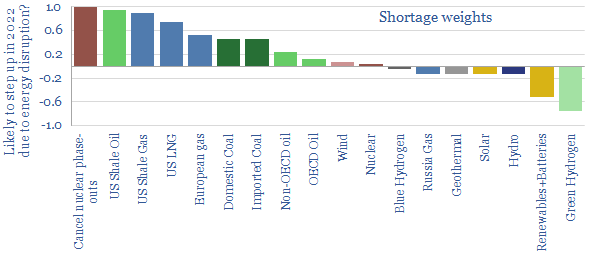

Energy shortages: medieval horrors?

Energy shortages are gripping the world in 2022. The 1970s are one analogy. But the 14th century was truly medieval. Today’s note reviews its top ten features. This is not a romantic portrayal of pre-industrial civilization, some simpler time “before fossil fuels”. It is a horror show of deficiencies. Avoiding energy shortages should be a…

-

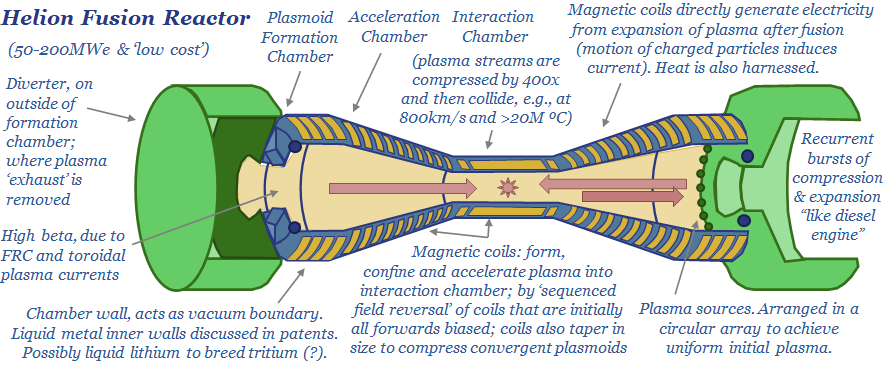

Helion: linear fusion breakthrough?

Helion is developing a linear fusion reactor, which has entirely re-thought the technology (like the ‘Tesla of nuclear fusion’). It could have costs of 1-6c/kWh, be deployed at 50-200MWe modular scale and overcome many challenges of tokamaks. Progress so far includes 100MºC and a $2.2bn fund-raise, the largest of any private fusion company to-date. This…

-

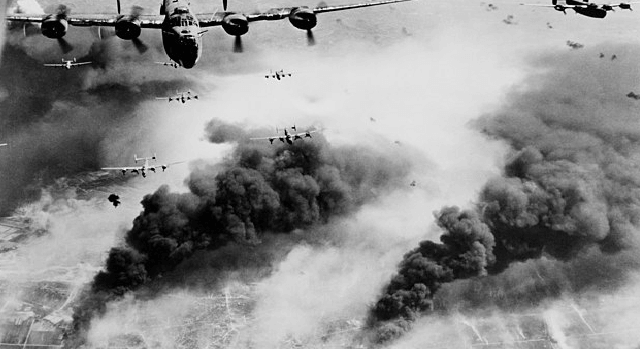

Oil and War: ten conclusions from WWII?

The second world war was decided by oil. Each country’s war-time strategy was dictated by its availability, quality and attempts to secure more of it, including by rationing non-critical uses of it. Ultimately, halting the oil meant halting the war. Today’s short note outlines out top ten conclusions from reviewing the history.

-

Russia conflict: pain and suffering?

This 13-page note presents 10 hypotheses on Russia’s horrific conflict. Energy supplies will very likely get disrupted, as Putin no longer needs to break the will of Ukraine, but also the West. Results include energy rationing and economic pain. Climate goals get shelved in this war-time scramble. Pragmatism, nuclear and LNG emerge from the ashes.

-

Energy transition: hierarchy of needs?

This gloomy video explores growing fears that the energy transition could ‘fall apart’ in the mid-late 2020s, due to energy shortages and geopolitical discord. Constructive solutions will include debottlenecking resource-bottlenecks, efficiency technologies and natural gas pragmatism.

-

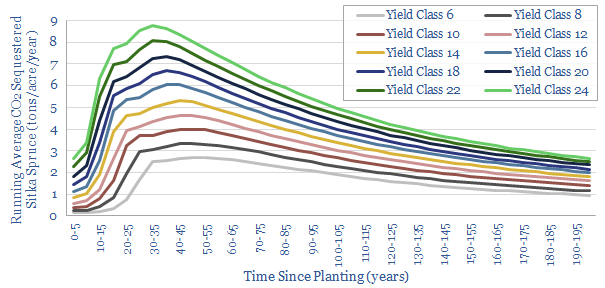

Sitka spruce: our top ten facts?

Sitka spruce is a fast-growing conifer, which now dominates UK forestry, and sequesters net CO2 up to 2x faster than mixed broadleaves. It can absorb 6-10 tons of CO2 per acre per year, at Yield Classes 16-30+, on 40 year rotations. This short note lays out our top ten conclusions, including benefits, drawbacks and implications.

-

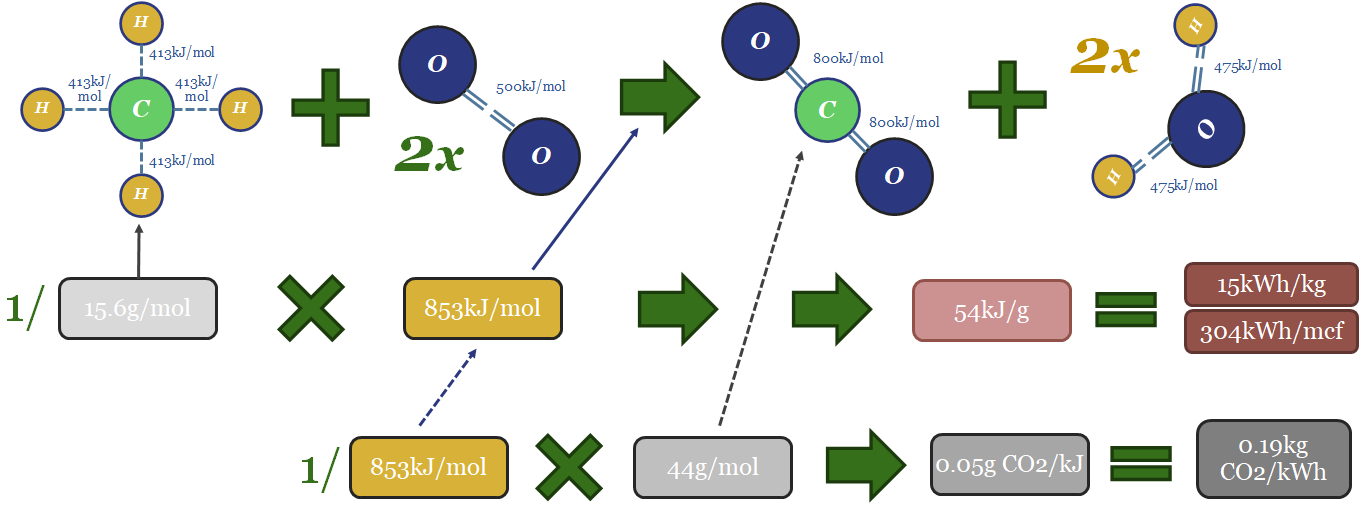

Coal versus gas: explaining the CO2 intensity?

Coal and gas both provide c25% of all primary global energy. But gas’s CO2 intensity is 50% less than coal’s. This short note explains the different carbon intensities from first principles, including bond enthalpies, production processes and efficiency factors.

-

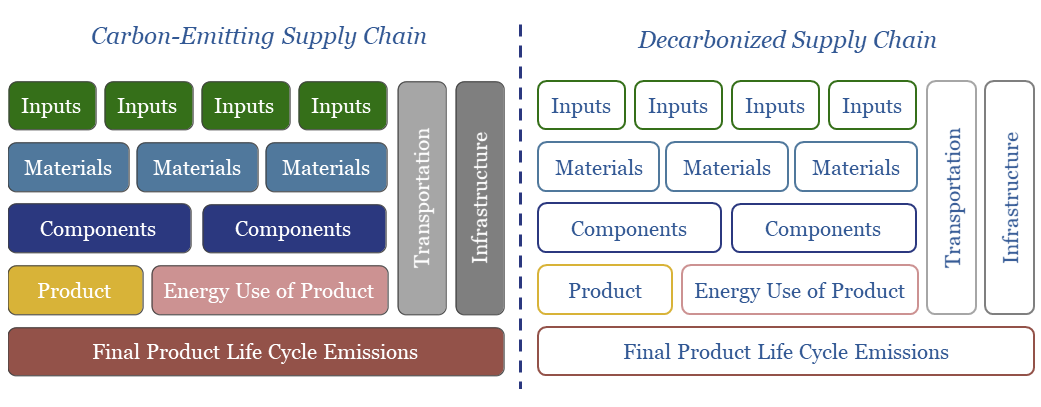

Decarbonized supply chains: first invent the universe?

“If you wish to make an apple pie from scratch, first you must invent the universe”. This captures the challenge of decarbonizing complex global supply chains. This note argues for each company in a supply chain to drive its own Scope 1&2 CO2 emissions towards zero on a net basis. Resulting products can be described…

Content by Category

- Batteries (89)

- Biofuels (44)

- Carbon Intensity (49)

- CCS (63)

- CO2 Removals (9)

- Coal (38)

- Company Diligence (95)

- Data Models (840)

- Decarbonization (160)

- Demand (110)

- Digital (60)

- Downstream (44)

- Economic Model (205)

- Energy Efficiency (75)

- Hydrogen (63)

- Industry Data (279)

- LNG (48)

- Materials (82)

- Metals (80)

- Midstream (43)

- Natural Gas (149)

- Nature (76)

- Nuclear (23)

- Oil (164)

- Patents (38)

- Plastics (44)

- Power Grids (130)

- Renewables (149)

- Screen (117)

- Semiconductors (32)

- Shale (51)

- Solar (68)

- Supply-Demand (45)

- Vehicles (90)

- Wind (44)

- Written Research (355)