-

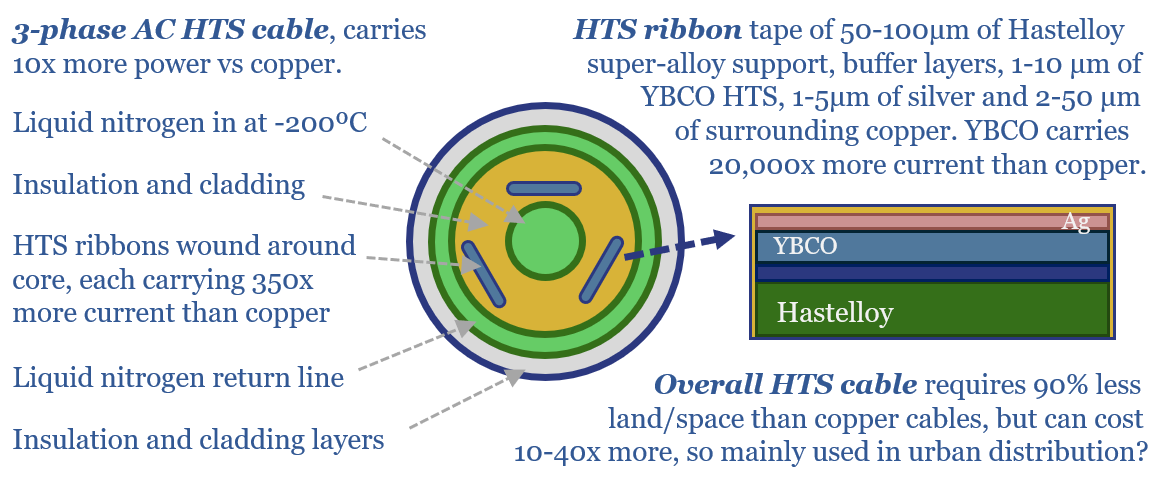

Superconductors: distribution class?

High temperature superconductors (HTSs) carry 20,000x more current than copper, with almost no electrical resistance. They must be cooled to -200ºC. So costs have been moderately high at 35 past projects. Yet this 16-page report explores whether HTS cables will now accelerate to defray power grid bottlenecks? And who benefits within the supply chain?

-

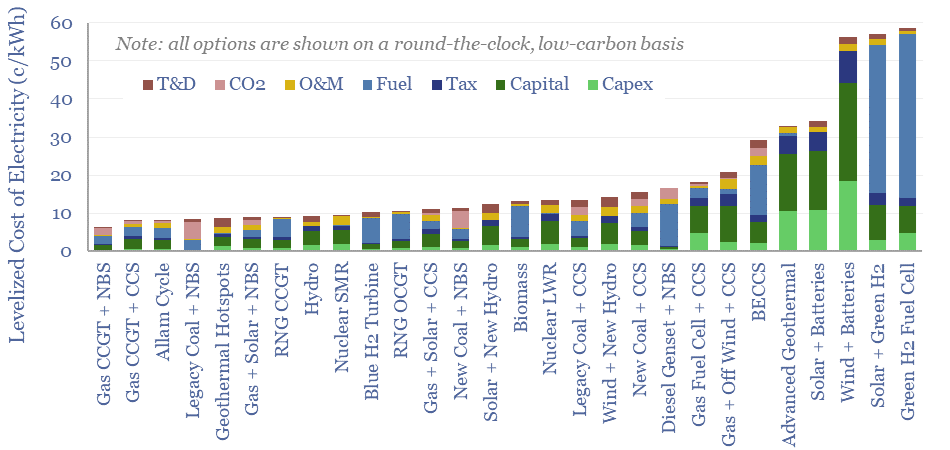

Low-carbon baseload: walking through fire?

This 16-page report appraises 30 different options for low-carbon, round-the-clock power generation. Their costs range from 6-60 c/kWh. We also consider true CO2 intensity, time-to-market, land use, scalability and power quality. Seven insights follow for powering new grid loads, especially AI data centers.

-

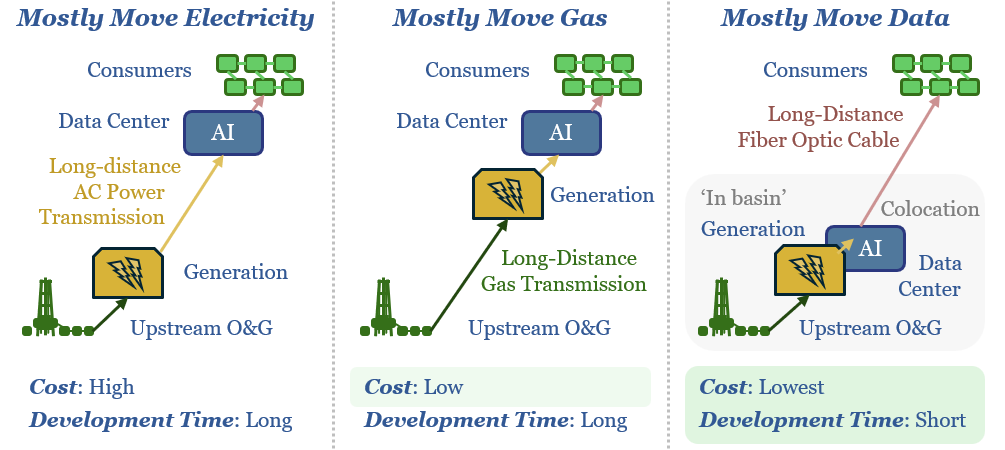

Moving targets: molecules, electrons or data ?!

New AI data-centers are facing bottlenecked power grids. Hence this 15-page note compares the costs of constructing new power lines, gas pipelines or fiber optic links for GW-scale computing. The latter is best. Latency is a non-issue. This work suggests the best locations for AI data-centers and impacts US shale, midstream and fiber-optics?

-

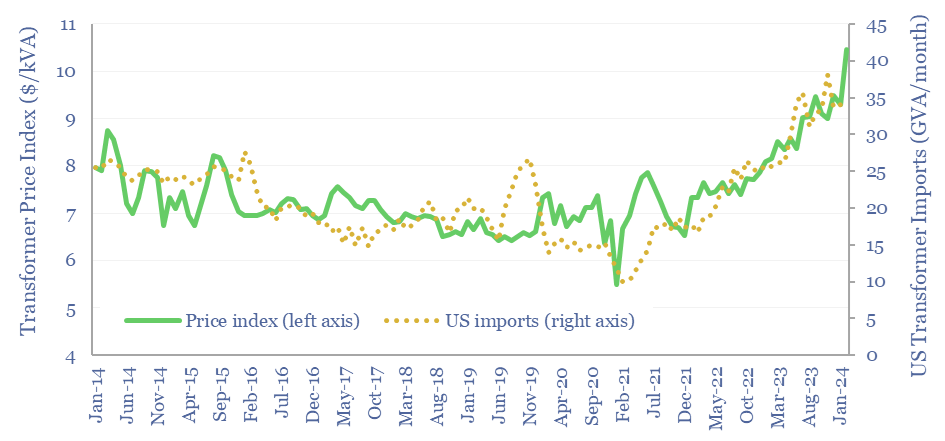

Transformer shortages: at their core?

Transformers are needed every time voltage steps up or down in the power grid. But lead times have now risen from 12-24 weeks to 1-3 years. And prices have risen 70%. Will these shortages structurally slow new energies and AI? Or improve transformer margins? Or is it just another boom-bust cycle? Answers are in this…

-

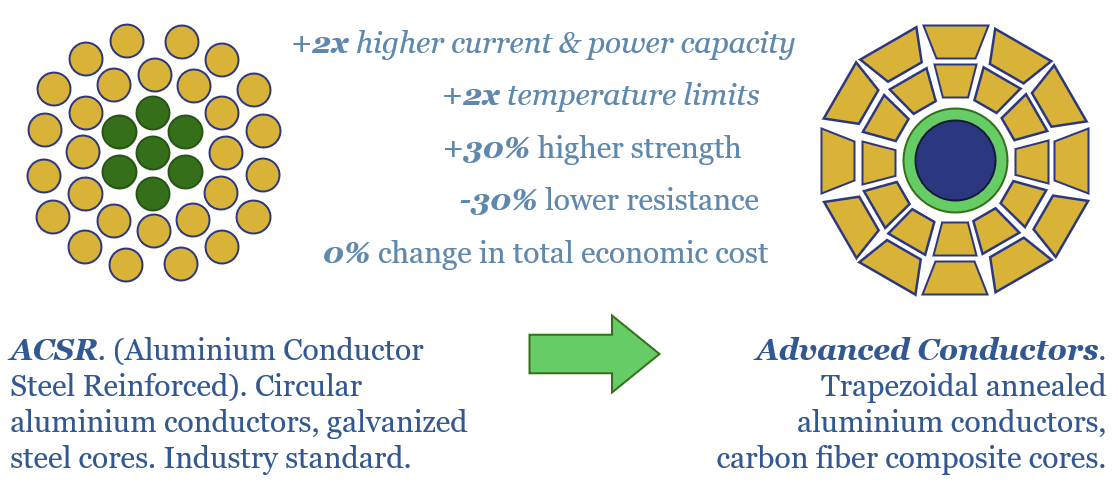

Advanced Conductors: current affairs?

Reconductoring today’s 7M circuit kilometers of transmission lines may help relieve power grid bottlenecks, while avoiding the 10-year ordeal of permitting new lines? Raising voltage may have hidden challenges. But Advanced Conductors stand out in this 18-page report. And the theme could double carbon fiber demand?

-

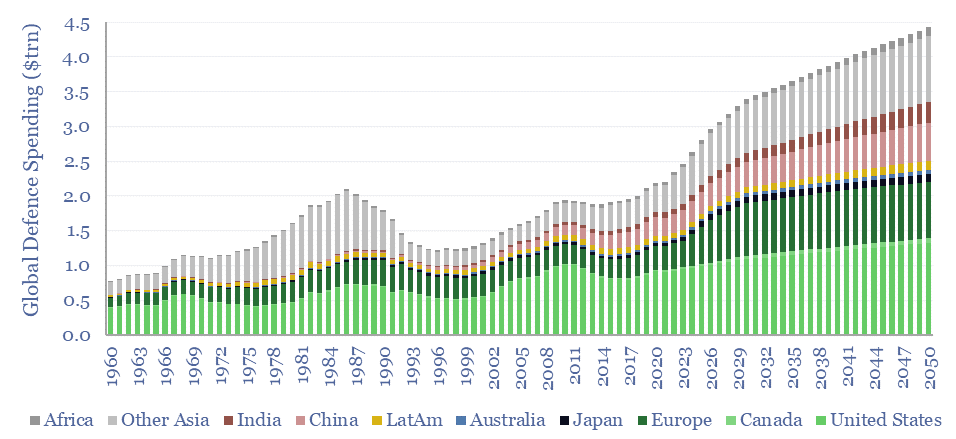

Arms race: defence versus decarbonization?

Does defence displace decarbonization as the developed world’s #1 policy goal through 2030, re-allocating $1trn pa of funds? Perhaps, but this 10-page note also finds a surprisingly large overlap between the two themes. European capital goods re-accelerate most? Some clean-tech does risk deprioritization?

-

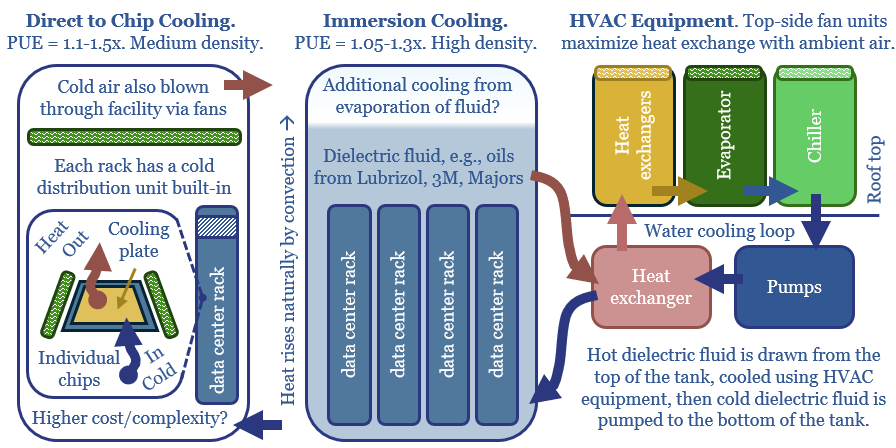

Cool customers: AI data-centers and industrial HVAC?

Chips must usually be kept below 27ºC, hence 10-20% of both the capex and energy consumption of a typical data-center is cooling, as explored in this 14-page report. How much does climate matter? What changes lie ahead? And which companies sell into this soon-to-double market for cooling equipment?

-

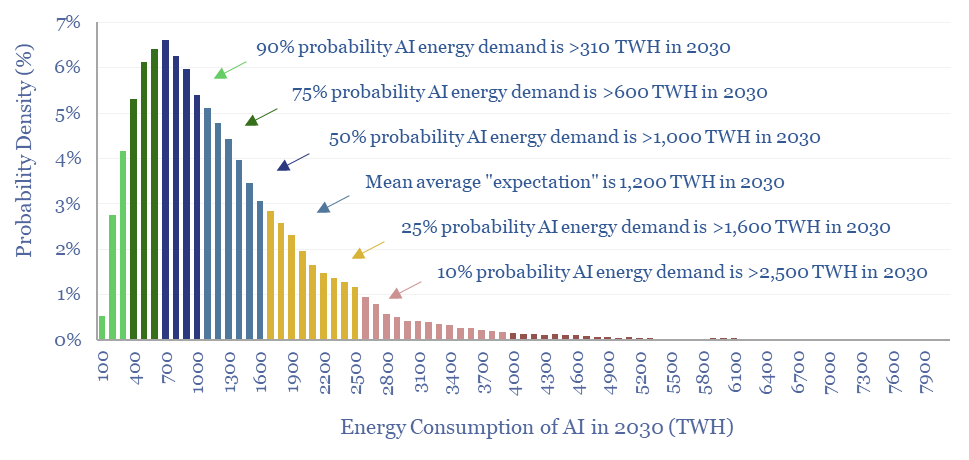

Energy intensity of AI: chomping at the bit?

Rising energy demands of AI are now the biggest uncertainty in all of global energy. To understand why, this 17-page note explains AI computing from first principles, across transistors, DRAM, GPUs and deep learning. GPU efficiency will inevitably increase, but compute increases faster. AI most likely uses 300-2,500 TWH in 2030, with a base case…

-

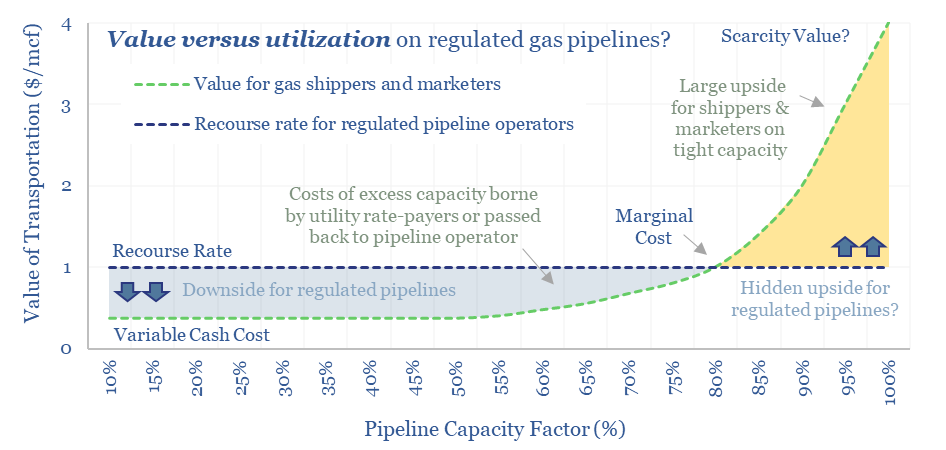

Midstream gas: pipelines have pricing power ?!

FERC regulations are surprisingly interesting!! In theory, gas pipelines are not allowed to have market power. But increasingly, they do have it: gas use is rising, on grid bottlenecks, volatile renewables and AI; while new pipeline investments are being hindered. So who benefits here? Answers are explored in this report.

-

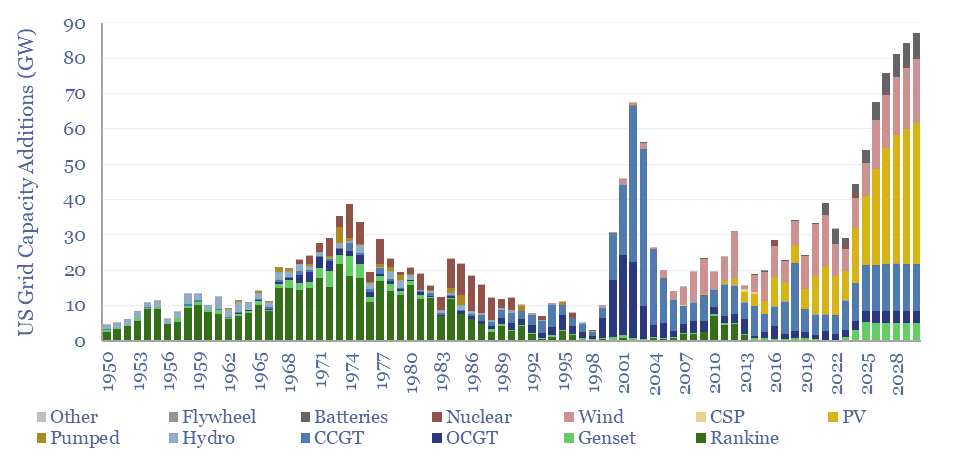

Energy and AI: the power and the glory?

The power demands of AI will contribute to the largest growth of new generation capacity in history. This 18-page note evaluates the power implications of AI data-centers. Reliability is crucial. Gas demand grows. Annual sales of CCGTs and back-up gensets in the US both rise by 2.5x? This is our most detailed AI report to…

Content by Category

- Batteries (89)

- Biofuels (44)

- Carbon Intensity (49)

- CCS (63)

- CO2 Removals (9)

- Coal (38)

- Company Diligence (94)

- Data Models (838)

- Decarbonization (160)

- Demand (110)

- Digital (59)

- Downstream (44)

- Economic Model (204)

- Energy Efficiency (75)

- Hydrogen (63)

- Industry Data (279)

- LNG (48)

- Materials (82)

- Metals (80)

- Midstream (43)

- Natural Gas (148)

- Nature (76)

- Nuclear (23)

- Oil (164)

- Patents (38)

- Plastics (44)

- Power Grids (130)

- Renewables (149)

- Screen (117)

- Semiconductors (32)

- Shale (51)

- Solar (68)

- Supply-Demand (45)

- Vehicles (90)

- Wind (44)

- Written Research (354)