Carbon Intensity

-

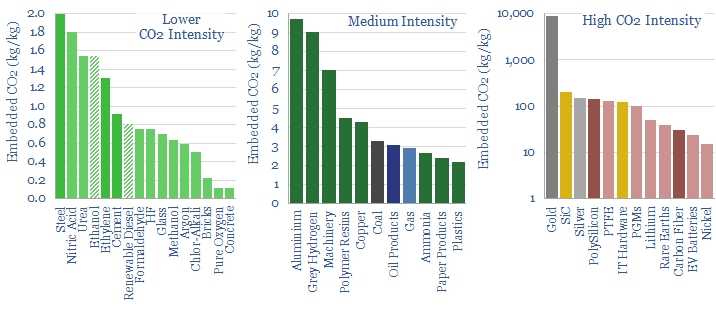

CO2 intensity of materials: an overview?

This data-file tabulates the energy intensity and CO2 intensity of materials, in tons/ton of CO2, kWh/ton of electricity and kWh/ton of total energy use per ton of material. The build-ups are based on 160 economic models that we have constructed to date, and simply intended as a helpful summary reference. Our key conclusions on CO2…

-

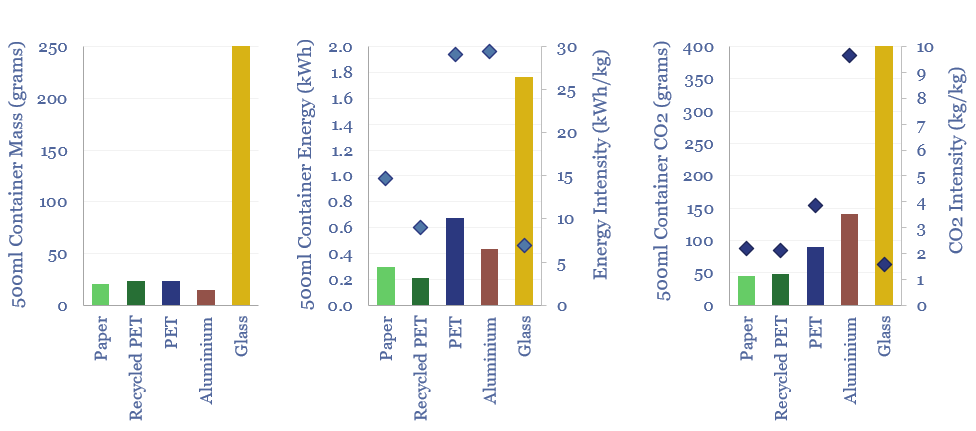

Plastic products: energy and CO2 intensity of plastics?

The energy intensity of plastic products and the CO2 intensity of plastics are built up from first principles in this data-file. Virgin plastic typically embeds 3-4 kg/kg of CO2e. But compared against glass, PET bottles embed 60% less energy and 80% less CO2. Compared against virgin PET, recycled PET embeds 70% less energy and 45%…

-

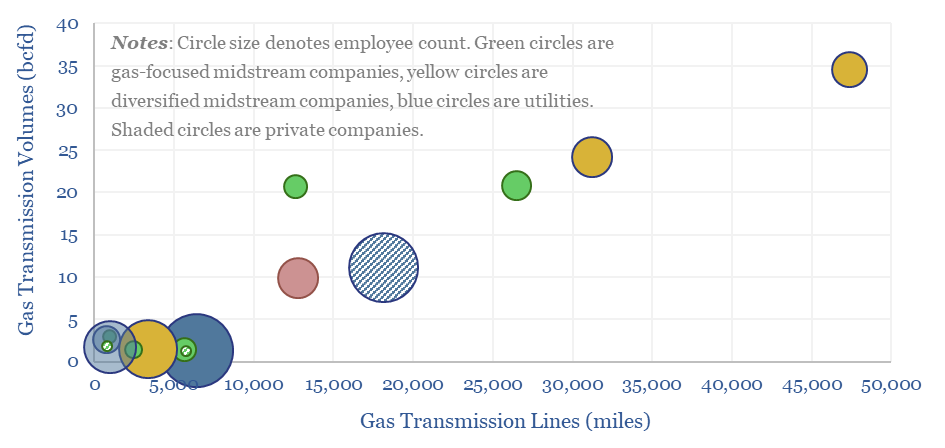

US gas transmission: by company and by pipeline?

This data-file aggregates granular data into US gas transmission, by company and by pipeline, for 40 major US gas pipelines which transport 45TCF of gas per annum across 185,000 miles; and for 3,200 compressors at 640 related compressor stations.

-

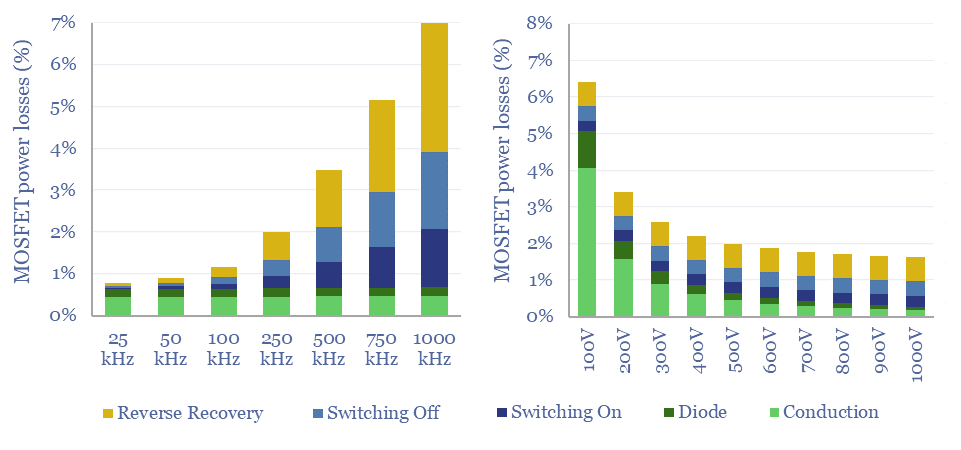

MOSFETs: energy use and power loss calculator?

MOSFETs are fast-acting digital switches, used to transform electricity, across new energies and digital devices. MOSFET power losses are built up from first principles in this data-file, averaging 2% per MOSFET, with a range of 1-10% depending on voltage, switching, on resistance, operating temperature and reverse recovery charge.

-

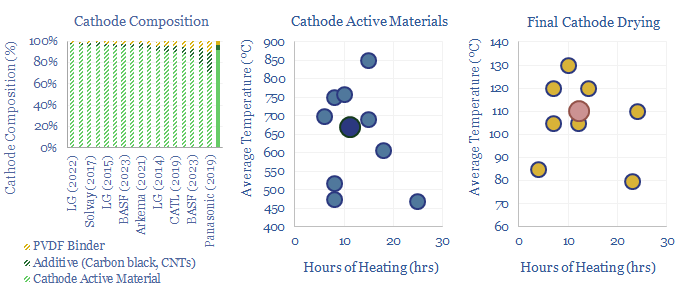

Battery cathode active materials and manufacturing?

Lithium ion batteries famously have cathodes containing lithium, nickel, manganese, cobalt, aluminium and/or iron phosphate. But how are these cathode active materials manufactured? This data-file gathers specific details from technical papers and patents by leading companies such as BASF, LG, CATL, Panasonic, Solvay and Arkema.

-

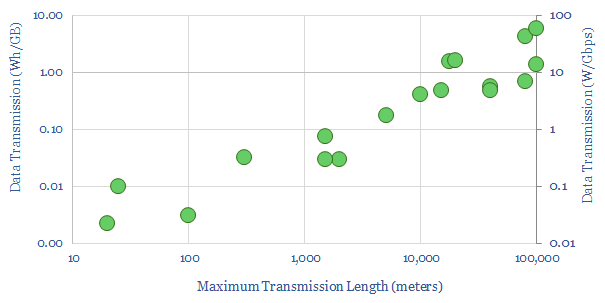

Energy intensity of fiber optic cables?

What is the energy intensity of fiber optic cables? Our best estimate is that moving each GB of internet traffic through the fixed network requires 40Wh/GB of energy, across 20 hops, spanning 800km and requiring an average of 0.05 Wh/GB/km. Generally, long-distance transmission is 1-2 orders of magnitude more energy efficient than short-distance.

-

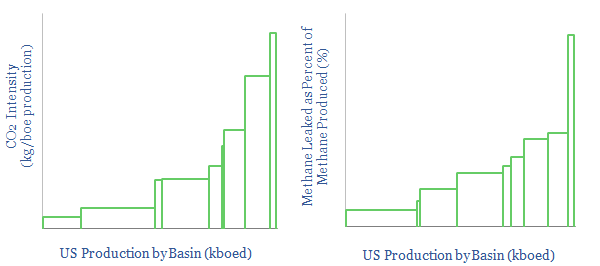

US CO2 and Methane Intensity by Basin

The CO2 intensity of oil and gas production is tabulated for 425 distinct company positions across 12 distinct US onshore basins in this data-file. Using the data, we can aggregate the total upstream CO2 intensity in (kg/boe), methane leakage rates (%) and flaring intensity (in mcf/boe), by company, by basin and across the US Lower 48.

-

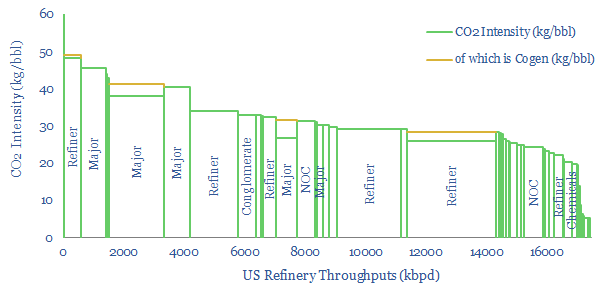

US Refinery Database: CO2 intensity by facility?

This US refinery database covers 125 US refining facilities, with an average capacity of 150kbpd, and an average CO2 intensity of 33 kg/bbl. Upper quartile performers emitted less than 20 kg/bbl, while lower quartile performers emitted over 40 kg/bbl. The goal of this refinery database is to disaggregate US refining CO2 intensity by company and…

-

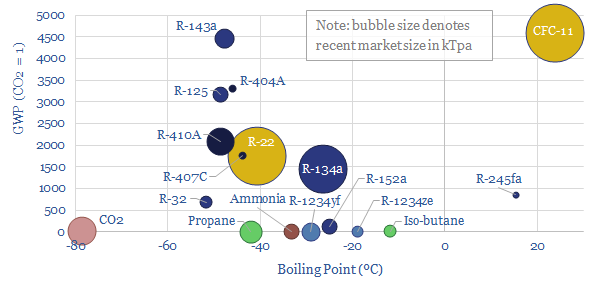

Refrigerants: leading chemicals for the rise of heat pumps?

This data-file is a breakdown of c1MTpa of refrigerants used in the recent past for cooling, across refrigerators, air conditioners, in vehicles, industrial chillers, and increasingly, heat pumps. The market is shifting rapidly towards lower-carbon products, including HFOs, propane, iso-butane and even CO2 itself. We still see fluorinated chemicals markets tightening.

-

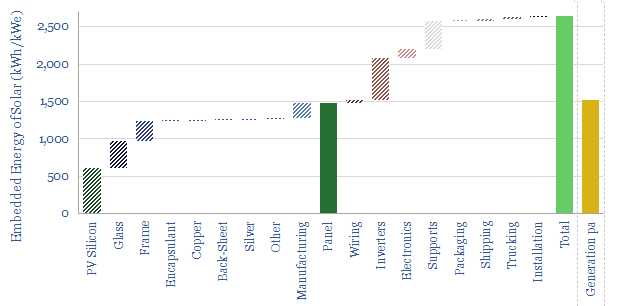

Solar: energy payback and embedded energy?

What is the energy payback and embedded energy of solar? We have aggregated the consumption of 10 different materials (in kg/kW) and around 10 other energy-consuming line-items (in kWh/kW). Our base case estimate is 2.5 MWH/kWe of solar and an energy payback of 1.5-years. Numbers and sensitivities can be stress-tested in the data-file.

Content by Category

- Batteries (89)

- Biofuels (44)

- Carbon Intensity (49)

- CCS (63)

- CO2 Removals (9)

- Coal (38)

- Company Diligence (94)

- Data Models (838)

- Decarbonization (160)

- Demand (110)

- Digital (59)

- Downstream (44)

- Economic Model (204)

- Energy Efficiency (75)

- Hydrogen (63)

- Industry Data (279)

- LNG (48)

- Materials (82)

- Metals (80)

- Midstream (43)

- Natural Gas (148)

- Nature (76)

- Nuclear (23)

- Oil (164)

- Patents (38)

- Plastics (44)

- Power Grids (130)

- Renewables (149)

- Screen (117)

- Semiconductors (32)

- Shale (51)

- Solar (68)

- Supply-Demand (45)

- Vehicles (90)

- Wind (44)

- Written Research (354)