-

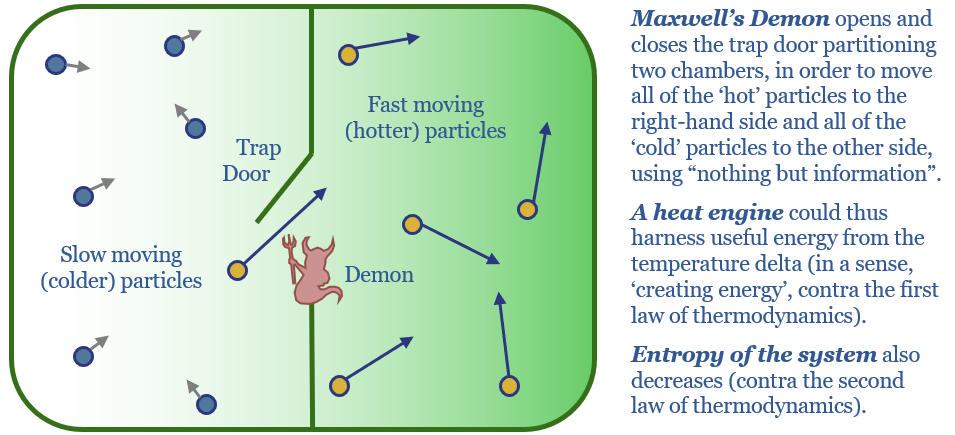

Maxwell’s demon: computation is energy?

Computation, the internet and AI are inextricably linked to energy. Information processing literally is an energy flow. This note explains the physics, from Maxwell’s demon, to the entropy of information, to the efficiency of computers.

-

Electric adventures: conclusions from an EV road trip?

It is a rite of passage for every energy analyst to rent an electric vehicle for an EV road trip, then document their observations and experiences. Our conclusions are that range anxiety is real, chargers benefit retailers, economics are debatable, power grids will be the biggest bottleneck and our EV growth forecasts are not overly…

-

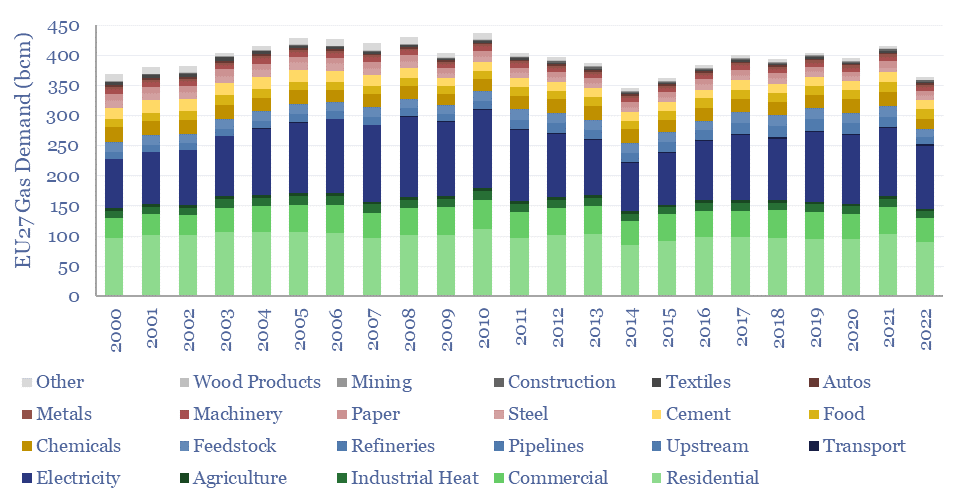

European gas: anatomy of an energy crisis?

Europe suffered a full-blown energy crisis in 2022, hence what happened to gas demand, as prices rose 5x from 2019 levels? European gas demand in 2022 fell -13% overall, including -13% for heating, -6% for electricity and -17% for industry. The data suggest upside to for European gas, global LNG and gas as the leading…

-

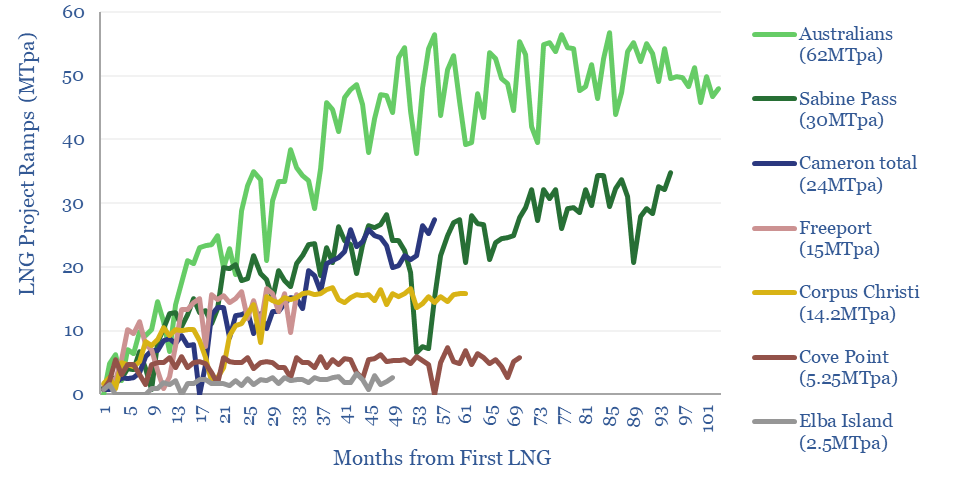

LNG ramp-rates: MTpa per month and volatility?

What are the typical ramp-rates of LNG plants, and how volatile are these ramp-ups? We have monthly data on serveral facilities in our LNG models, implying 4-5MTpa LNG trains ramp at +0.7MTpa/month, with a +/- 35% monthly volatility around this trajectory. Thus do LNG ramps create upside for energy traders?

-

Email deliverability: who broke the internet?

This video explains email mailing lists, SPF, DKIM, DMARC, lessons learned over 15-years, and an unfortunate issue from December that prevented 4,000 subscribers from receiving our research. We’re sorry. We’ve fixed it! And some comments follow below to make sure important research reaches you.

-

Energy transition from first principles?

Our top three questions in the energy transition are depicted above. Hence we have become somewhat obsessed with analyzing the energy transition from first principles, to help our clients understand the global energy system, understand new energy technologies and understand key industries.

-

Energy transition: three reflections on 2023?

In October-2022, we wrote that high interest rates could create an ‘unbridled disaster’ for new energies in 2023. So where could we have done better in helping our clients to navigate this challenging year? Our new year’s resolutions are clearer conclusions, predictions over moralizations, and looking through macro noise to keep long-term mega-trends in mind.

-

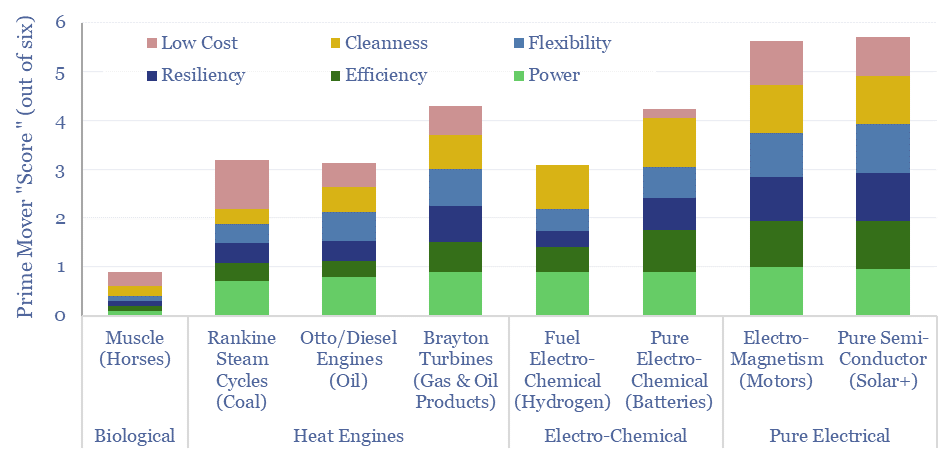

Thermodynamics of prime movers: energy from first principles?

A highlight of 2023 has been going back to first principles, to explain the underpinnings of prime movers in the global energy system. If you understand the thermodynamics of prime movers, you will inevitably conclude that the world is evolving towards solar, semi-conductors, electro-magnetic motors, lithium batteries and high-grade gas turbines.

-

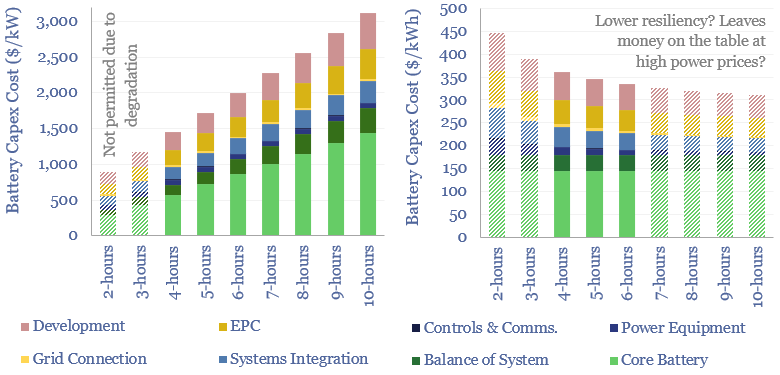

Grid-scale battery costs: $/kW or $/kWh?

Grid-scale battery costs can be measured in $/kW or $/kWh terms. Thinking in kW terms is more helpful for modelling grid resiliency. A good rule of thumb is that grid-scale lithium ion batteries will have 4-hours of storage duration, as this minimizes per kW costs and maximizes the revenue potential from power price arbitrage.

-

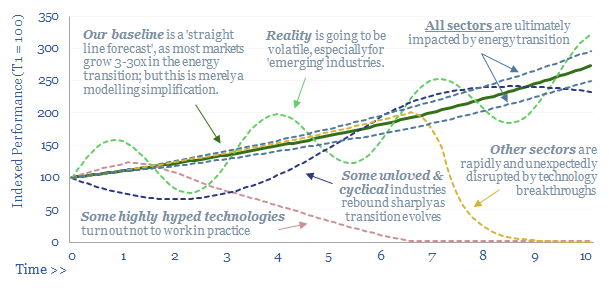

Energy transition: active duty?

After five years researching the energy transition, we believe it favors active managers. Within the energy transition, active managers can add value by ranging across this vast mega-trend, balancing risk factors in a portfolio, timing volatility, understanding complexity, unearthing specific opportunities and benchmarking ESG leaders and laggards.

Content by Category

- Batteries (89)

- Biofuels (44)

- Carbon Intensity (49)

- CCS (63)

- CO2 Removals (9)

- Coal (38)

- Company Diligence (95)

- Data Models (840)

- Decarbonization (160)

- Demand (110)

- Digital (60)

- Downstream (44)

- Economic Model (205)

- Energy Efficiency (75)

- Hydrogen (63)

- Industry Data (279)

- LNG (48)

- Materials (82)

- Metals (80)

- Midstream (43)

- Natural Gas (149)

- Nature (76)

- Nuclear (23)

- Oil (164)

- Patents (38)

- Plastics (44)

- Power Grids (130)

- Renewables (149)

- Screen (117)

- Semiconductors (32)

- Shale (51)

- Solar (68)

- Supply-Demand (45)

- Vehicles (90)

- Wind (44)

- Written Research (354)