-

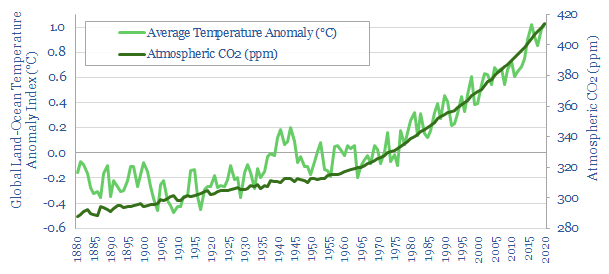

Global average temperature data?

Global average surface temperatures have risen by 1.2-1.3C since pre-industrial times and continue rising at 0.02-0.03C per year, according to most data-sets . This note assesses their methodologies and controversies. Uncertainty in the data is likely higher than admitted. But the strong upward warming trend is robust.

-

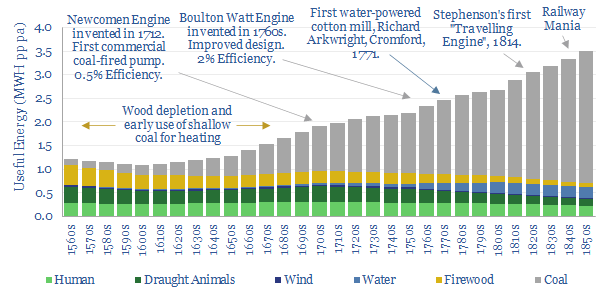

Britain’s industrial revolution: what happened to energy demand?

Britain’s remarkable industrialization in the 18th and 19th centuries was part of the world’s first great energy transition. In this short note, we have aggregated data, estimated the end uses of different energy sources in the Industrial Revolution, and drawn five key conclusions for the current Energy Transition.

-

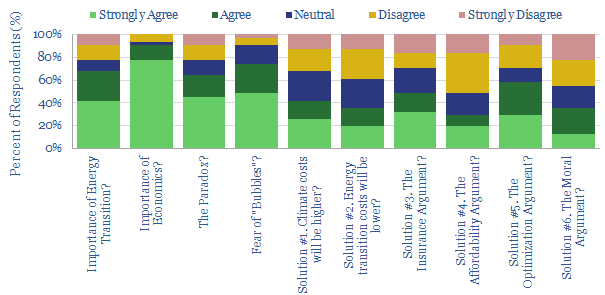

Costs of climate change: solving the paradox?

It is fully possible to reach ‘net zero’ by 2050. The costs ratchet up to $3trn per year. Paradoxically, this is 2x higher than the unmitigated cost of climate change. This note outlines different decision-makers perspectives on resolving the paradox.

-

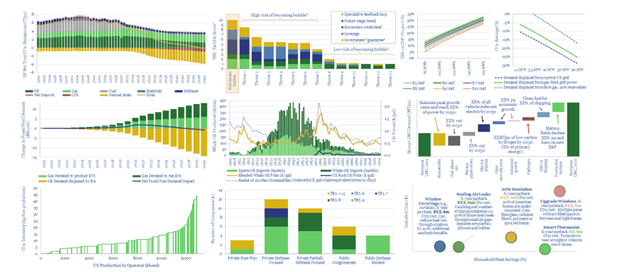

Our Top Ten Research Notes of 2020

We have published 250 new research notes and data-files on our website in 2020. The purpose of this review is to highlight the ‘top ten’ reports, summarizing all of our work, and drawing out the best opportunities.

-

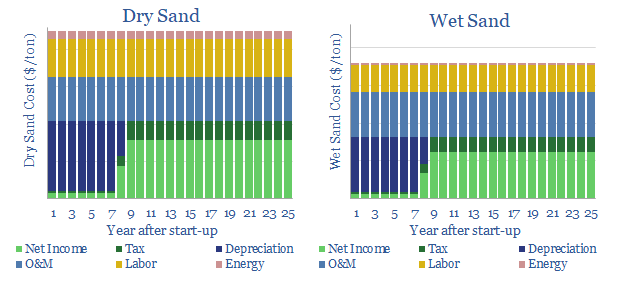

Wet sand: what impacts on shale breakevens and CO2?

This note presents the exciting new prospect of using ‘wet sand’ for hydraulic fracturing in shale plays. This can reduce breakeven costs up to $1/bbl and CO2 intensity up to 0.6kg/bbl.

-

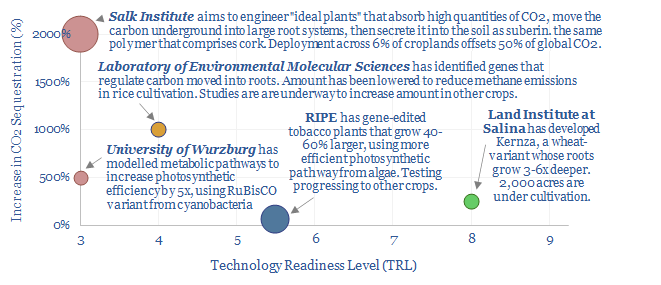

Bio-engineer plants to absorb more CO2?

Our roadmap towards ‘net zero’ requires 20-30GTpa of carbon offsets using nature based solutions, including reforestation and soil carbon. This short note considers whether the task could be facilitated by bio-engineering plants to sequester more CO2. We find exciting ambitions, and promising pilots, but the space is not yet investable.

-

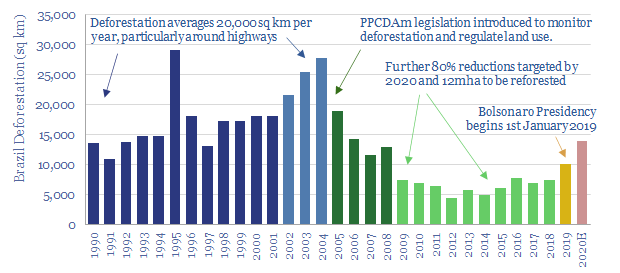

The Amazon tipping point theory?

Another 2-10% deforestation could make the Amazon rainforest too dry to sustain itself. 80GT of Carbon could thus be released, inflating atmospheric CO2 above 450ppm. This matters as Amazon deforestation rates have already doubled under Jair Bolsonaro’s presidency. We explore policy implications.

-

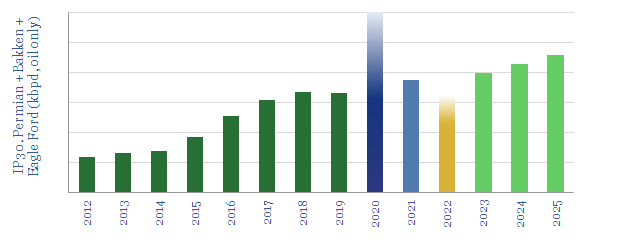

Shale productivity: snakes and ladders?

Unprecedented high-grading is now occurring in the US shale industry, amidst challenging industry conditions. This means production surprising to the upside in 2020-21 and disappointing during the recovery. Our 7-page note explores the causes and consequences of the whipsaw effect.

-

Biden presidency: our top ten research reports?

Joe Biden’s presidency will prioritize energy transition among its top four focus areas. Thus we present our top ten pieces of research that gain increasing importance as the new landscape unfolds. We are cautious on bubbles and supply shortages. But decision-makers will become more discerning around CO2.

-

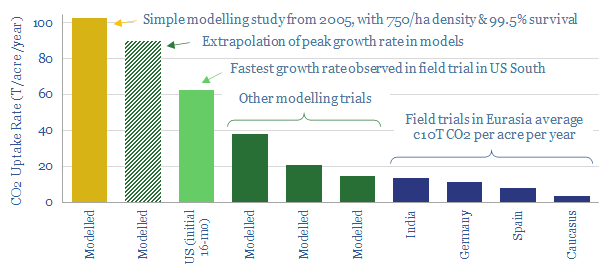

Paulownia tomentosa: the miracle tree?

The ‘Empress Tree’ has been highlighted as a miracle solution to climate change, with potential to absorb 10x more CO2 than other tree species; while its strong, light-weight timber is prized as the “aluminium of woods”. This note investigates the potential. There is clear room to optimise nature based solutions. But there may be downside…

Content by Category

- Batteries (89)

- Biofuels (44)

- Carbon Intensity (49)

- CCS (63)

- CO2 Removals (9)

- Coal (38)

- Company Diligence (95)

- Data Models (839)

- Decarbonization (160)

- Demand (110)

- Digital (60)

- Downstream (44)

- Economic Model (204)

- Energy Efficiency (75)

- Hydrogen (63)

- Industry Data (279)

- LNG (48)

- Materials (82)

- Metals (80)

- Midstream (43)

- Natural Gas (148)

- Nature (76)

- Nuclear (23)

- Oil (164)

- Patents (38)

- Plastics (44)

- Power Grids (130)

- Renewables (149)

- Screen (117)

- Semiconductors (32)

- Shale (51)

- Solar (68)

- Supply-Demand (45)

- Vehicles (90)

- Wind (44)

- Written Research (354)